Refining Properties with Feedback

Train prompt properties to match your quality standards by providing corrective feedback on their evaluations

Refining Properties with Feedback

Overview

Property feedback enables you to refine custom LLM properties by correcting their evaluations on specific interactions. When you provide feedback indicating that a property's score doesn't match your expectations, Deepchecks uses your correction as a training example for future evaluations. Over time, this transforms generic evaluation criteria into property models tailored precisely to your use case, quality standards, and domain-specific requirements.

How It Works

When you review an interaction and disagree with a property's evaluation, you can provide corrective feedback that includes:

- Your Corrected Score - Either a 1-5 rating for numeric properties or the correct category selection for categorical properties

- Reasoning - A detailed explanation of why your score or categories better reflect the correct evaluation for this interaction

This feedback is stored and automatically incorporated into the property's evaluation process. The next time the property evaluates new interactions, your feedback examples are included in the LLM prompt alongside the property's guidelines, functioning as in-context learning examples that teach the evaluator how you want it to judge similar cases.

The Learning Process

Each piece of feedback acts as a training signal:

- Feedback Collection - You correct a property score on an interaction, explaining your reasoning

- Example Integration - Your correction becomes a reference example showing the correct judgment

- Improved Evaluation - When evaluating new interactions, the property references your feedback to make decisions more aligned with your expectations

- Continuous Refinement - As you provide more feedback across diverse cases, the property learns the nuances of your evaluation criteria

Unlike static guidelines, feedback-based refinement allows properties to adapt to edge cases, domain-specific terminology, and subjective quality standards that vary between use cases.

Providing Feedback

Accessing the Feedback Interface

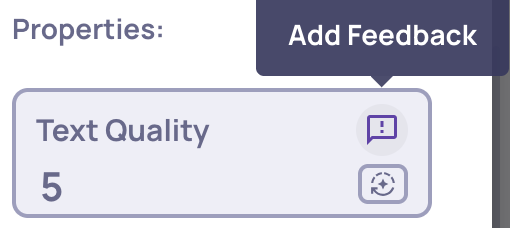

- Open any interaction in the Interactions view by clicking on its row

- Navigate to the Properties section of the interaction details

- Find the property you want to refine (feedback is available only for custom LLM properties)

- Click the feedback icon next to the property score

Submitting Feedback

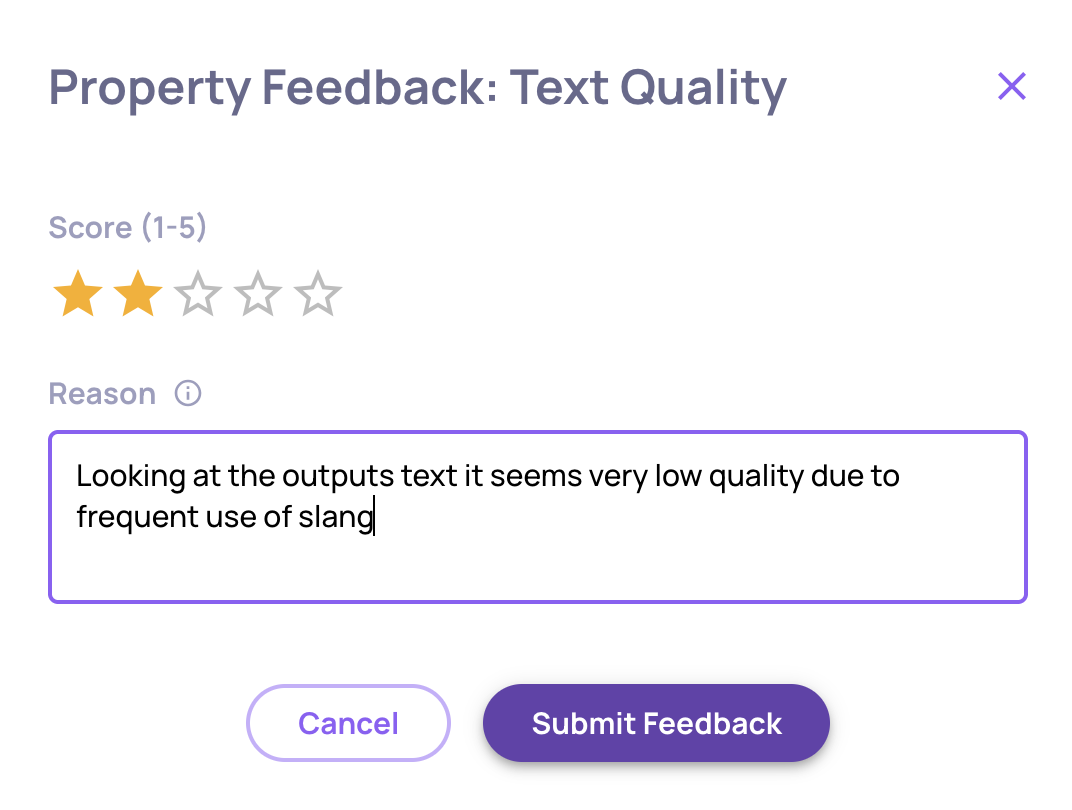

For Numeric Properties (1-5 scale):

- Select your corrected rating using the star selector

- Enter your reasoning explaining why this score is more accurate

- Example: "Rated 2 instead of 4 because while the response is grammatically correct, it completely misses the user's actual question about pricing tiers, not product features."

For Categorical Properties:

- Select the correct category from the dropdown (single or multiple, depending on property configuration)

- Explain why your selected category better reflects the interaction

- Example: "Changed from 'Neutral' to 'Negative Feedback' because the phrase 'I guess it works' clearly indicates disappointment, not neutrality."

Writing Effective Reasoning:

The reasoning field is critical—it's what teaches the property evaluator your judgment criteria. Effective feedback reasoning:

- ✅ Explains why your score is correct, not just what the correct score is

- ✅ Points out specific details in the interaction data that justify your rating

- ✅ Clarifies edge cases or ambiguous scenarios

- ✅ Highlights what the original evaluation missed or misinterpreted

Avoid generic explanations like "This score is wrong" or "It should be higher." Instead, provide specific context: "The response contains three factual errors (mentions 2023 when the input asks about 2024 data), which warrants a low accuracy score despite being well-written."

Updated 2 days ago