Uploading the Data

Follow step by step to upload the data in two ways - python SDK or Deepchecks UI

We will create two types of interactions - Summarization and Feature Extraction, for the two distinct objectives. In this demo, we will compare the performance of three versions based on our evaluation set and then demonstrate production monitoring on the selected version.

Summarization

We will upload three versions of the evaluation dataset to the Deepchecks platform. Subsequently, we will also upload a second dataset to the Production Environment.

The versions differ in the prompt used to generate the summaries:

- Base Version: This is our initial prompt, which includes general summarization instructions. This version was annotated by a human expert based on the product requirements.

- Attractive Version: To address issues with the attractiveness of the summaries, we adjusted the prompt by incorporating background information about our use case and increased the "temperature" parameter to generate more appealing summaries.

- Balanced Version: This final version addresses various issues identified in earlier versions, striving to achieve a balance between the factual accuracy of the text and its attractiveness.

In order to evaluate both the summaries and the prompt used, we make sure each CSV contains the following columns:

session_id | user_interaction_id | interaction_type | input | output | full_prompt | user_annotation | annotation_reason | product_category | started_at |

|---|---|---|---|---|---|---|---|---|---|

Used to connect different interactions within the same session | Must be unique within a single version. | The type of the interaction - in this case, "Summarization". | (mandatory) The original document | (mandatory) summary | The full prompt to the LLM used in this interaction | Was the pipeline response good enough? | The annotator gave a reason for the annotation. Available only for the base version. | A user-value property indicating the product category for analysis purposes. | timestamp that the data was streamlined into the system, formatted as YYYY-MM-DD HH:MM:SS+UTC. Available only for production. |

Feature Extraction

We will upload one version of the evaluation dataset to the Deepchecks platform. Later we will also upload a second dataset to the Production Environment, simulating production data being streamlined over time.

In order to evaluate the feature extraction over time, we make sure each CSV contains the following columns:

Note: The session_id connects between the two applications.

| session_id | user_interaction_id | interaction_type | input | output | full_prompt | percent_of_valid_values | product_category | started_at |

|---|---|---|---|---|---|---|---|---|

| Used to connect different interactions within the same session | Must be unique within a single version. | The type of the interaction - in this case, "Feature Extraction". | (mandatory) The original document | (mandatory) generated metadata, formatted as a json | The full prompt to the LLM used in this interaction | A user-value property computed offline, percent of non-null values, out of total keys. | A user-value property indicating the product category for analysis purposes. | timestamp that the data was streamlined into the system, formatted as YYYY-MM-DD HH:MM:SS+UTC. Available only for production. |

Use Deepchecks' Python SDK (Option 1)

Open the Demo Notebook via Colab or Download it LocallyClick the badge below to open the Google Colab or click here to download the Notebook, set in your API token (see below), and you're ready to go!

Get your API key from the user menu in the app:

If running locally, we recommend the best practice of using a python virtual environment to install the Deepchecks client SDK.

Or: Use the Deepchecks' UI (Option 2)

Click here to Download the Demo DataYou'll see there the three demo datasets used in this example

-

Click the Create New Application on the Applications page.

-

Create a New Application, name it "E-Commerce", and select a default Interaction Type of Summarization.

-

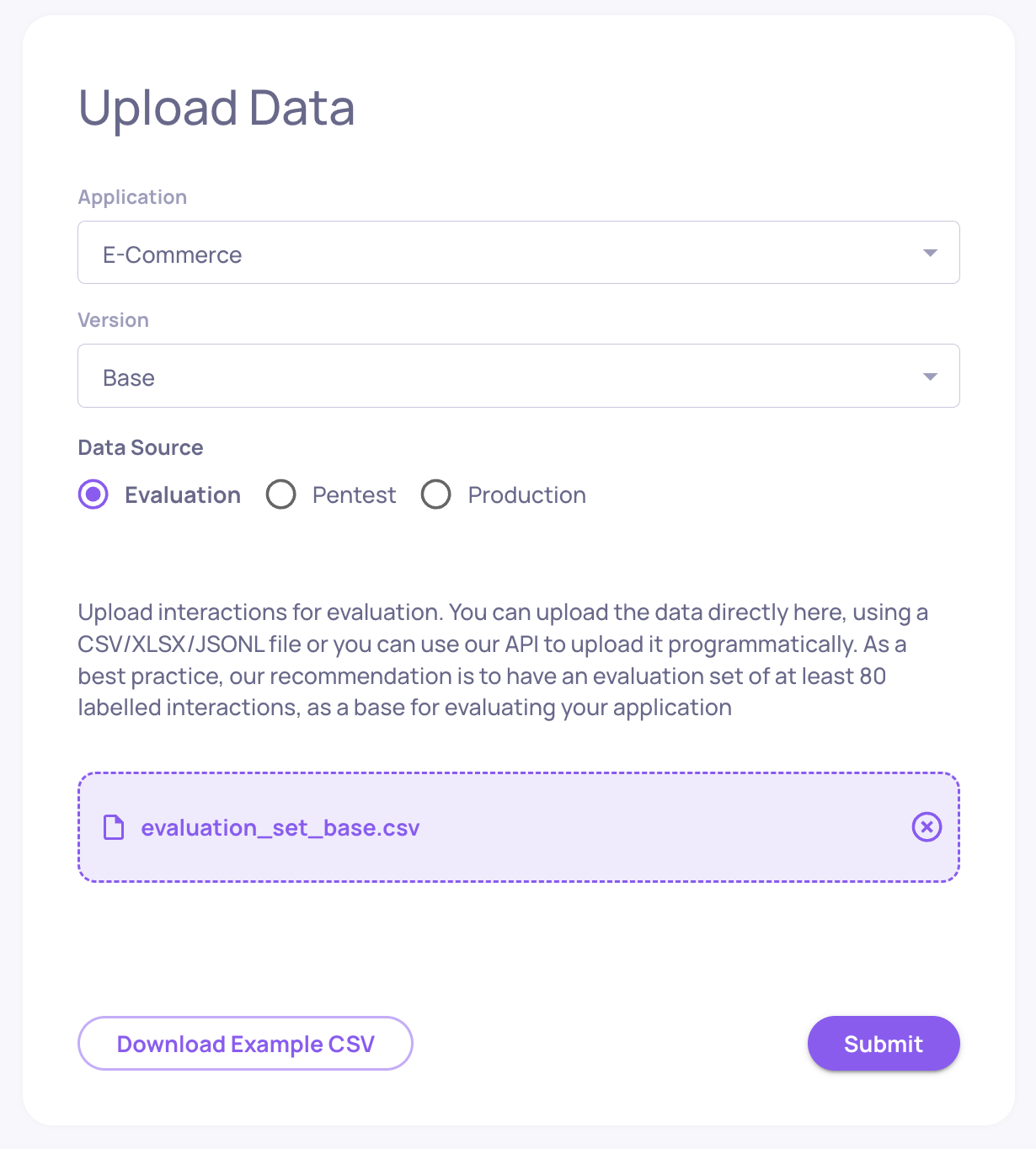

Uploading Evaluation Set Data

Click The "Upload Data" button on the screen's bottom left corner.

Create a new version called Base and upload the relevant CSV file

Repeat the process for versions Attractive and Balanced.

- Uploading Production Data

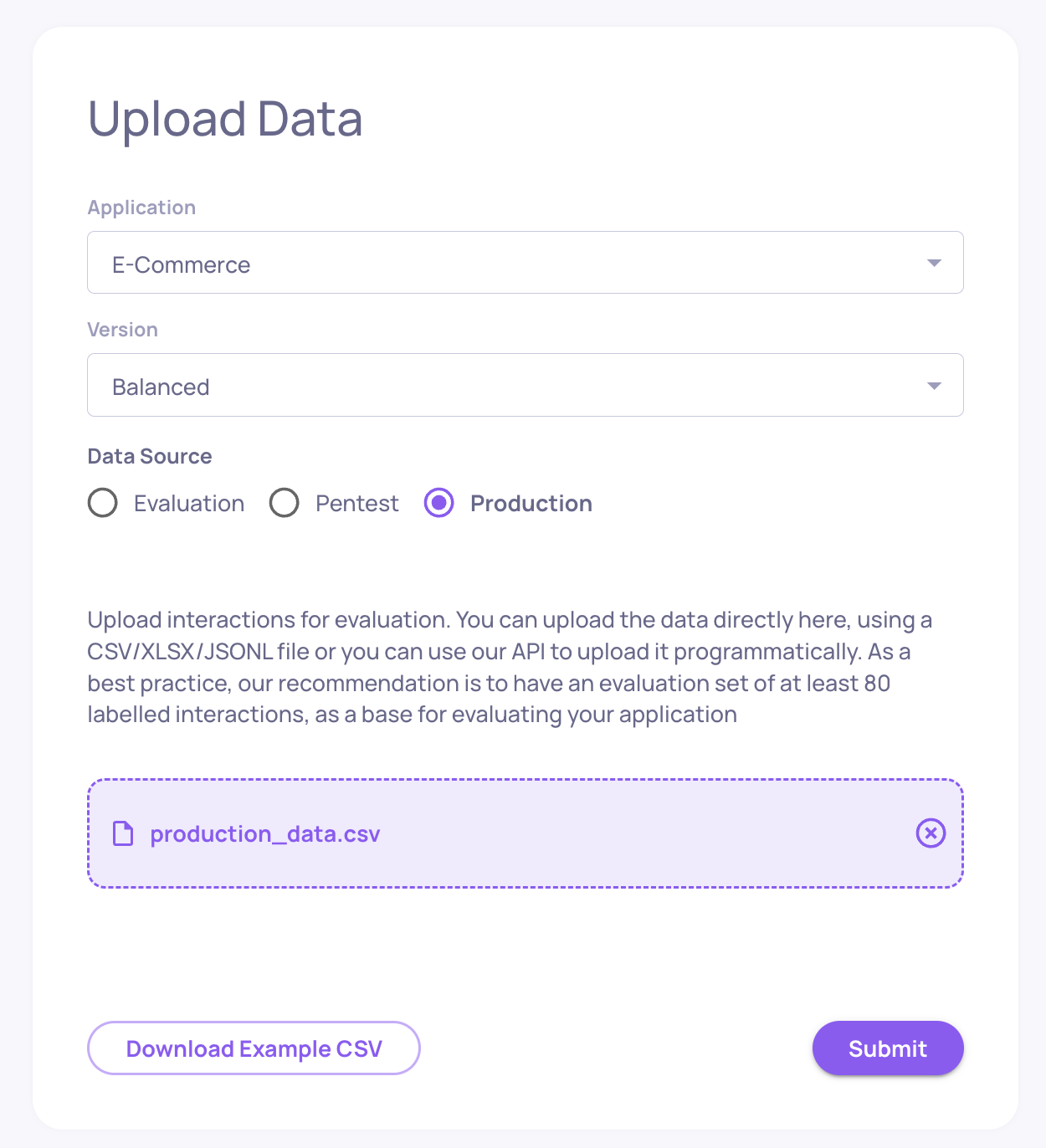

In the data upload screen select theBalanced version, switch the upload environment from evaluation to production, and attach the CSV named "production_data".

Success! The E-Commerce Application is now in the Deepchecks App

- Some properties take a few minutes to calculate, so some of the data - such as properties and estimated annotations will be updated over time.

- You'll see a ✅ Completed Processing Status in the Applications page, when processing is finished.

Updated about 2 months ago