Root Cause Analysis

Imagine this scenario: You've just been alerted by your customer-success team or Deepchecks monitoring that your mission-critical deployed model is behaving erratically. Or perhaps you're planning to thoroughly examine a version candidate before its production release.

In either case, Deepchecks provides a comprehensive suite of tools to gain deep insights into your application's performance:

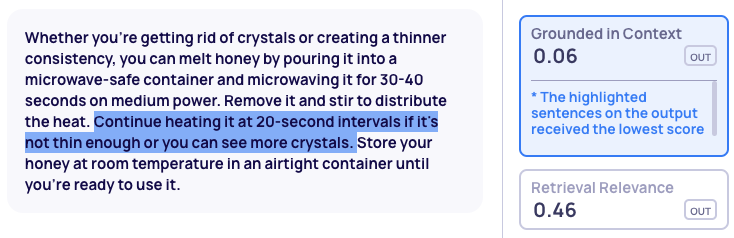

Property Explainability

Found in the single sample view, this tool allows for a granular understanding of your application's mistakes. Depending on the property you click, you'll be supplied with an in-depth explanation about the scoring process reasoning or a chunk of the output where the property score is the lowest or greatest.

Annotation Breakdown

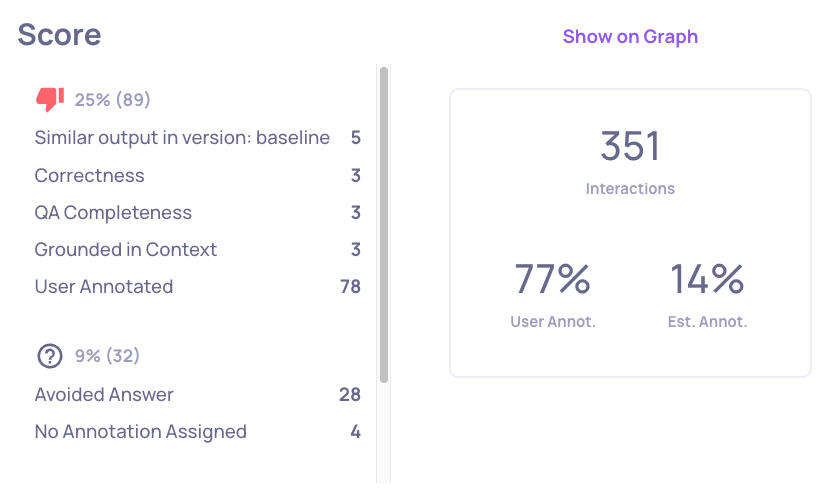

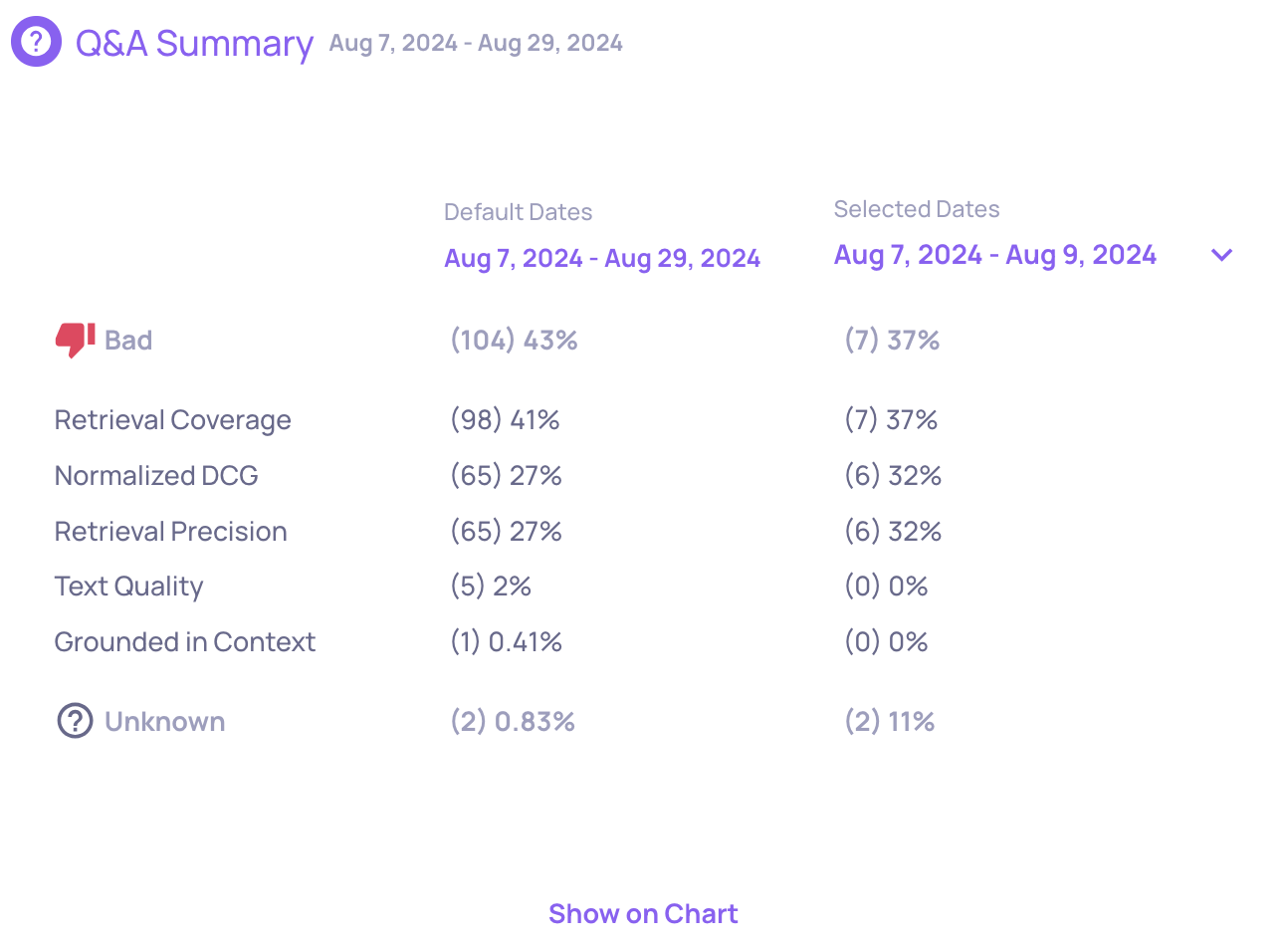

Located in the overview screen, this feature offers a quick snapshot of the distribution of your applications errors and their prevalence.

In the Production environment, you can compare the score breakdown across two different time ranges to identify trends, detect potential drifts, and better understand changes in your model's behavior. This comparison helps highlight which properties may be contributing to performance issues—or, conversely, driving improvements—allowing for faster root-cause analysis and more informed decision-making.

Clicking a property or annotation reason takes you to the interactions screen with relevant filters applied, showing only interactions affected by that reason and carrying that annotation.

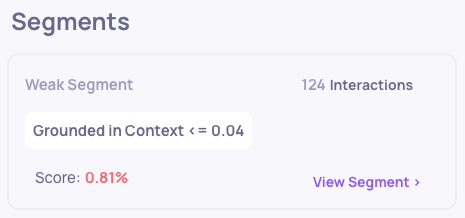

Weak Segments

This automated analysis uses Deepchecks-generated features to identify specific data segments where your application underperforms. These features encompass various aspects of the input, retrieved information, and output, including text length, topics, and more.

Recommendations

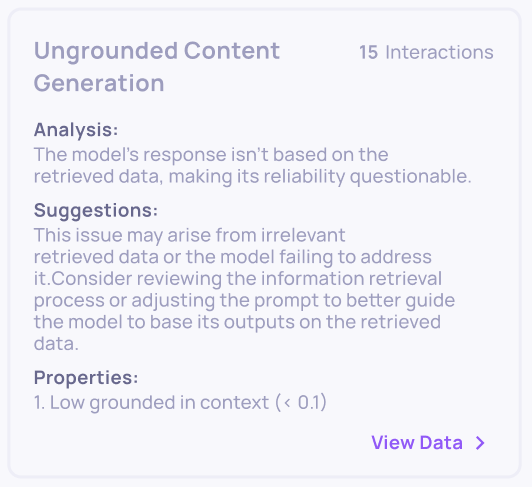

This automated analysis leverages Deepchecks-generated properties to detect common pitfalls in LLM-based applications and suggest solutions, based on the properties scores. For instance, if both the

PII Risk and Grounded in Context properties score high, it may indicate that your retrieved data contains personal information that your model isn't filtering out during output generation. In such cases, we might recommend including specific instructions in your prompt or implementing a post-processing detection mechanism. Here's another example for hallucination detection and mitigation:

Failure Mode Analysis 🔍

Deepchecks provides an LLM-powered analysis tool that helps you quickly identify and understand the main failure patterns behind low-scoring interactions. The analysis groups related issues into high-level, meaningful categories and presents each category with concrete examples taken from the actual failing cases.

For LLM-based properties (with full reasoning), the analysis also includes an estimated root cause for each category, offering insight into potential model weaknesses. For Deepchecks built-in GPU properties (e.g., when only highlighted text is available), the tool still clusters content patterns and presents representative examples.

You can further refine the analysis by providing custom guidelines to the analysis agent. Guidelines may include assumptions, suspected failure modes, or specific areas of concern you want the summary to focus on. This helps tailor the output to your evaluation needs and domain context.

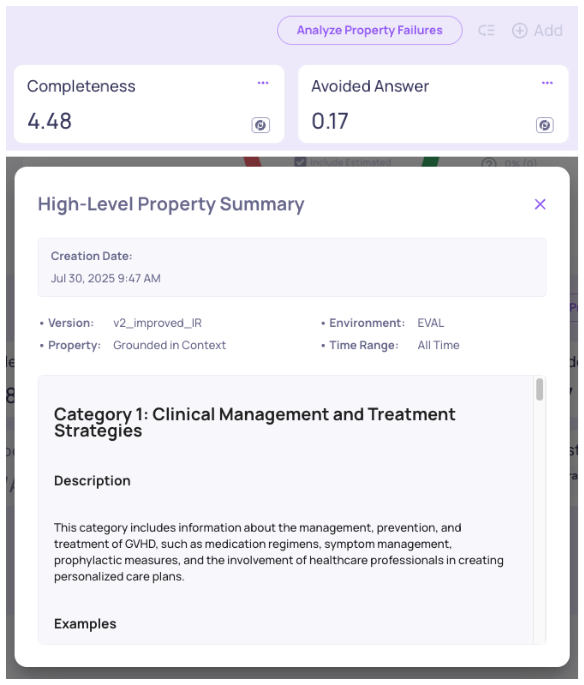

Property-Level Failure Mode Analysis

Property-level analysis focuses on a single evaluation property within a specific version. This allows you to investigate why the model failed for that specific property, surfacing patterns such as recurring phrasing issues, incorrect reasoning steps, or frequent violations of that property’s rules.

This is especially helpful when you want to zoom in on a particular metric, for example, understanding why the model consistently violates an instruction-following property or misclassifies certain examples. Note that if there are fewer than 5 interactions matching the criteria (low-scoring interactions for the property), the system returns a "Not enough available data" message instead of generating the summary.

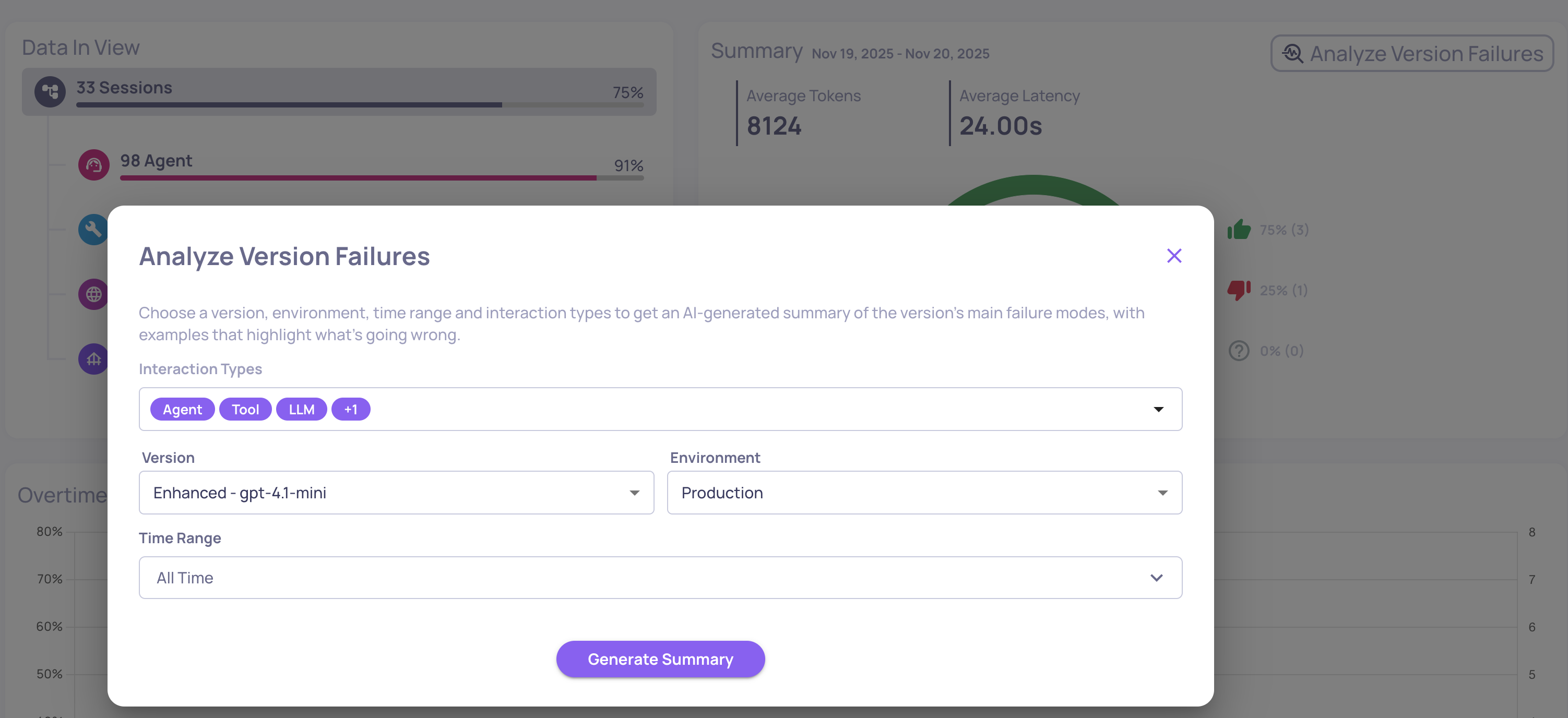

Version-Level Failure Mode Analysis

In addition to property-level insights, Deepchecks now supports generating a comprehensive, **version-level failure mode analysis report. **This report aggregates failures across all interaction types and all selected evaluation properties within the version, creating a unified view of the dominant failure modes in the entire system.

The version-level summary is ideal for identifying cross-cutting issues, prioritizing model improvements, and spotting regressions that may not surface clearly in a single property.

To generate it, open the Version page and select “Analyze version failures” (appears on the Summary chart when filtered on "Sessions" in the Overview screen). The tool will compile examples from relevant properties and produce a consolidated, structured summary of your model’s main shortcomings for that version.

Filtering the overview screen**Note:**You can filter the Overview screen using several options. At the top of the page, you’ll find the global filters: Application, Version, and Time Range.

In addition, the page itself includes local filters, such as Interaction Type and Span Filters (e.g., filter by span name, run status code, etc.).

Updated 17 days ago