Usage Management and Optimization

A guide about Deepchecks usage tracking and how to utilize your DPUs for data upload and LLM features in an optimal way

DPUs (Deepchecks Processing Units) are the core usage units in the Deepchecks platform. Think of them as your monthly usage bank—refreshed every month—and used for both data uploading and LLM-based features. Uploading data costs DPUs based on volume, while prompt properties and other LLM features consume DPUs based on the model chosen to run them.

To make the most of your plan, DPUs should be used efficiently—focusing usage on the parts of your pipeline that yield the most valuable evaluation and insights.

Understanding DPUs & Monitoring Usage

Base Usage

Base usage refers to the amount of data you upload to the platform. Uploading 1 million tokens for evaluation costs 250 DPUs, while sending 1 million tokens for storage only (unevaluated) costs 40 DPUs. This includes all data fields submitted per interaction.

LLM Usage

LLM usage covers all tokens processed by LLMs—both inputs and outputs—across Deepchecks features. This includes built-in LLM properties, user-created prompt properties, and other features like document classification and Analyze Property Failures. Prompt properties run on user-selected models, each with its own token-to-DPU ratio.

Translation Usage

If translation is enabled for your app, tokens sent through the translation pipeline will consume additional DPUs. The cost depends on the translation method used and is charged separately from base or LLM usage.

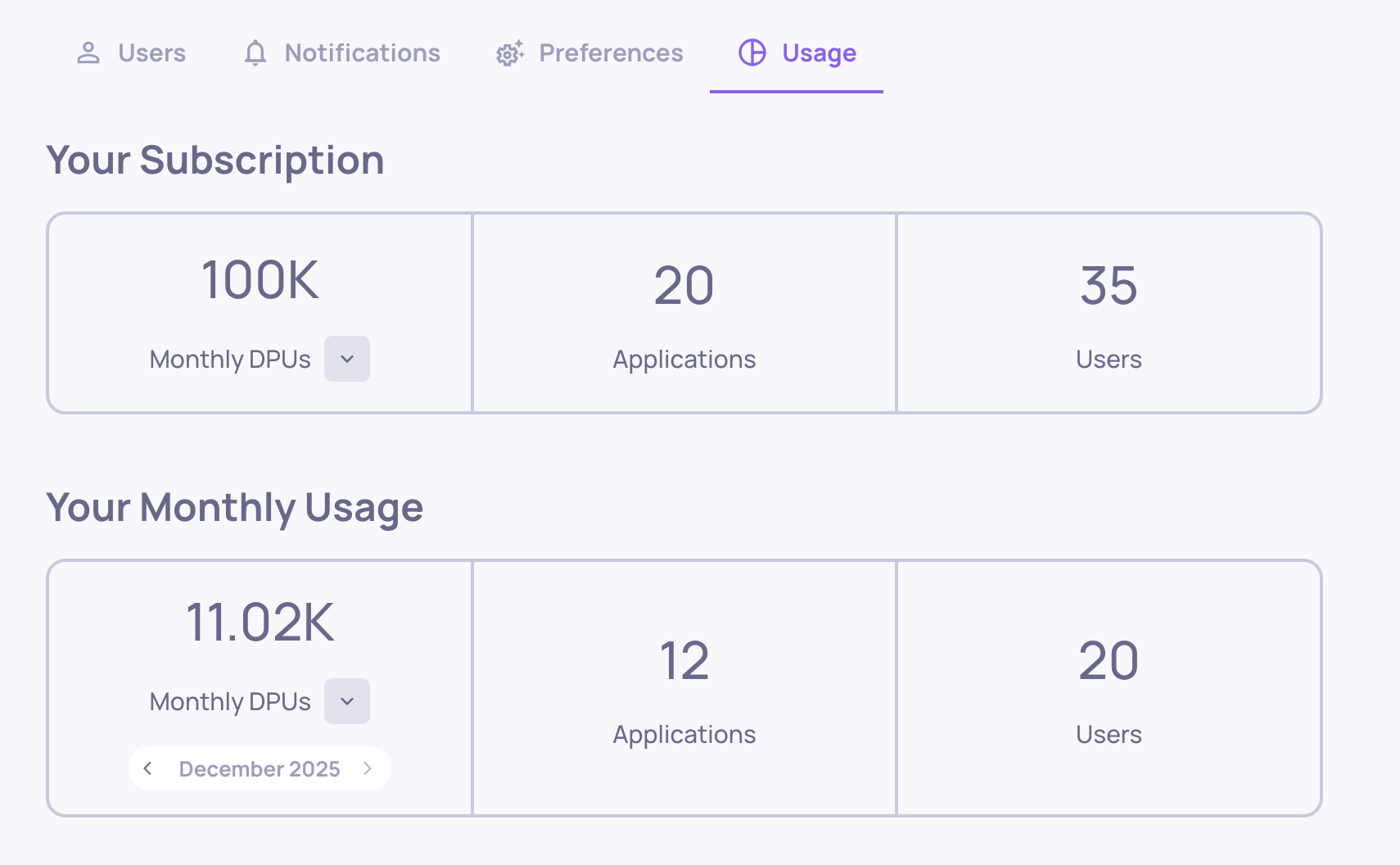

Usage and Plan DisplayAll of your usage—broken down by type and matched to your current plan—is visible in the Usage tab under Workspace Settings.

Optimizing Base Usage

Sample Your Production Data

Sampling is an essential tool for working efficiently with large-scale production data. When your application reaches production and begins generating high volumes of user interactions, it is often unnecessary (and costly) to evaluate every single session. Deepchecks allows you to configure sampling on the application level, helping you balance between cost and insight.

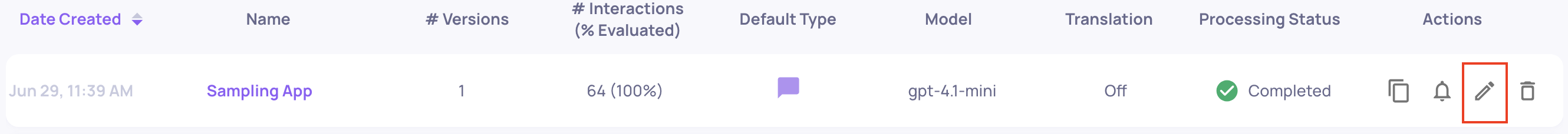

The first thing you should do when uploading production data to Deepchecks is to set a proper sampling ratio. This can be done via the Edit Application window.

"Edit Application" Button on the "Manage Applications" Screen

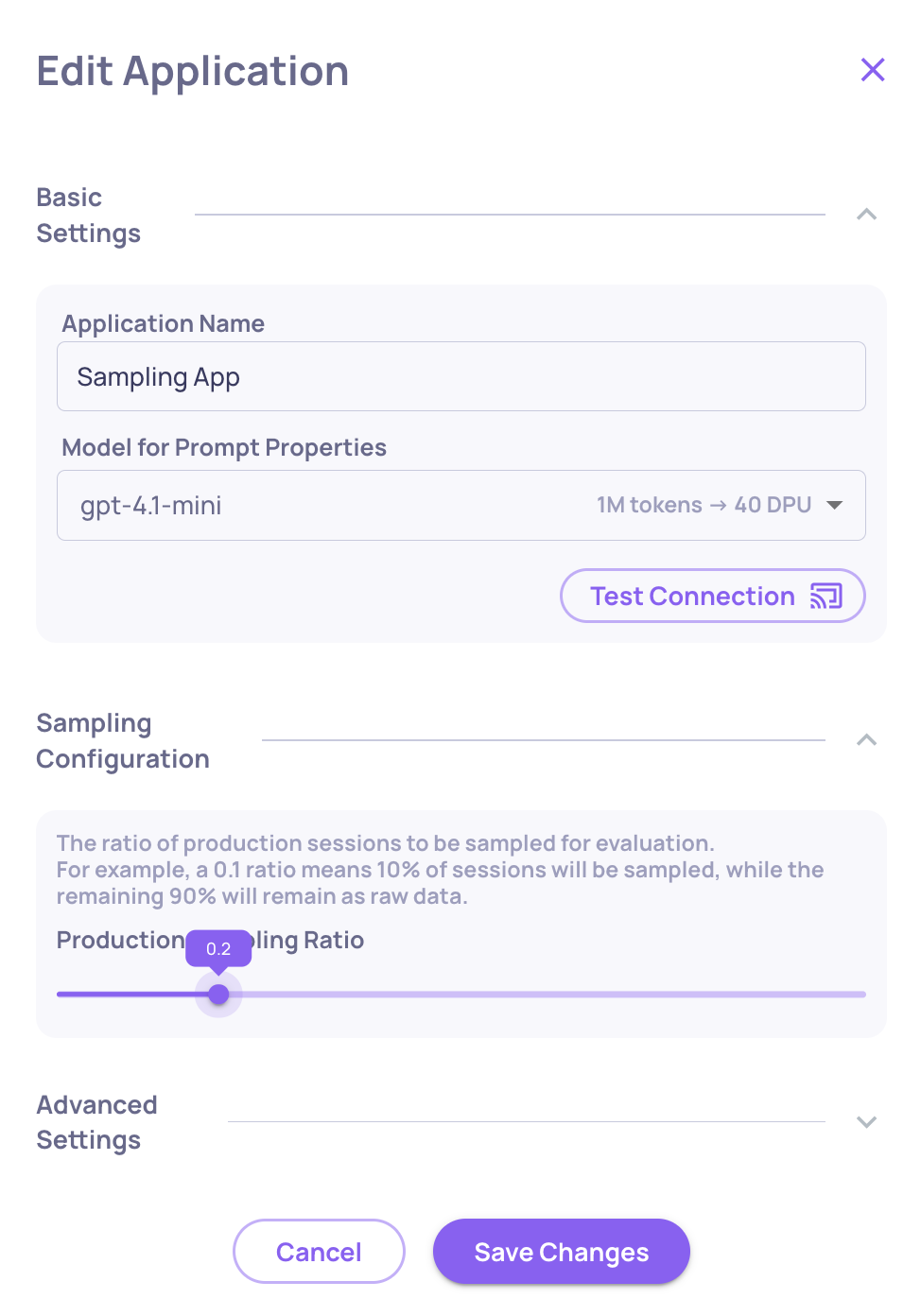

For high-volume apps, a 20% sampling rate for evaluated data (set as 0.2 ratio) usually offers statistically significant results while keeping costs reasonable—about 35% of what it would cost to evaluate 100% of sessions. Deepchecks performs randomized selection, so the evaluated sessions represent the full data distribution.

Setting the Sampling Ratio at 0.2; "Save Changes" Must Be Clicked For the Ratio to Be Applied

Optimize the Size of Your Evaluation Dataset

When evaluating your pipeline, more data doesn’t always yield better results. You don’t need to send all of your production data for evaluation—what matters is having a dataset that’s representative of your pipeline’s behavior. Building this dataset should be intentional, with careful attention to size: too small, and it may not reflect real-world usage patterns; too large, and you may incur unnecessary DPU costs without added insight. Focus on curating a diverse and statistically meaningful sample that balances coverage with efficiency.

Pre-Process and Parse Your Data

Large blobs of raw or verbose text might include information not relevant for evaluation (e.g, characters with no actual content). Wherever possible, you can parse or trim your data fields to keep only the parts needed for quality and performance insights.

Optimizing LLM Usage

Choose the Right LLM

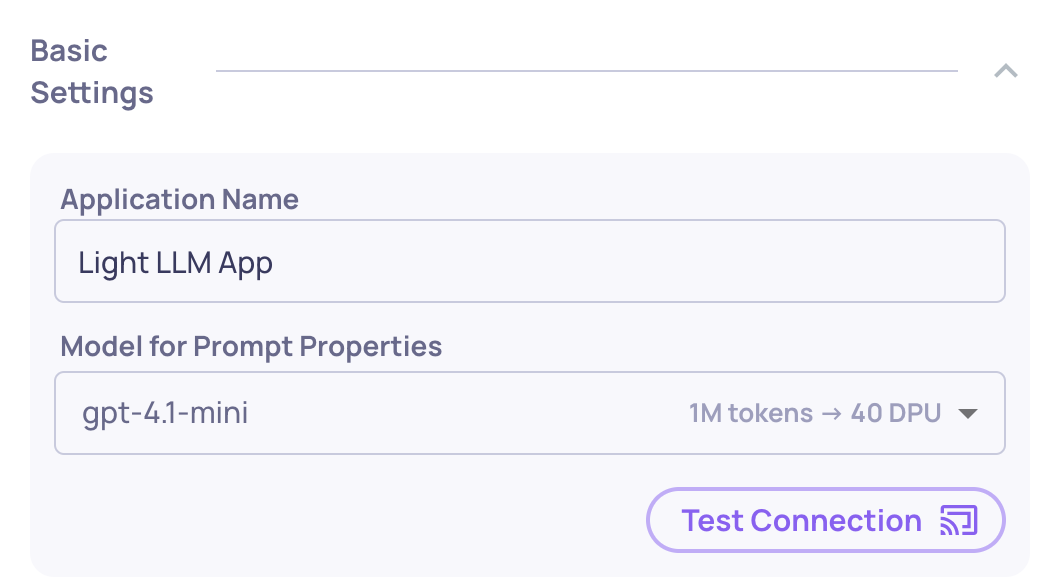

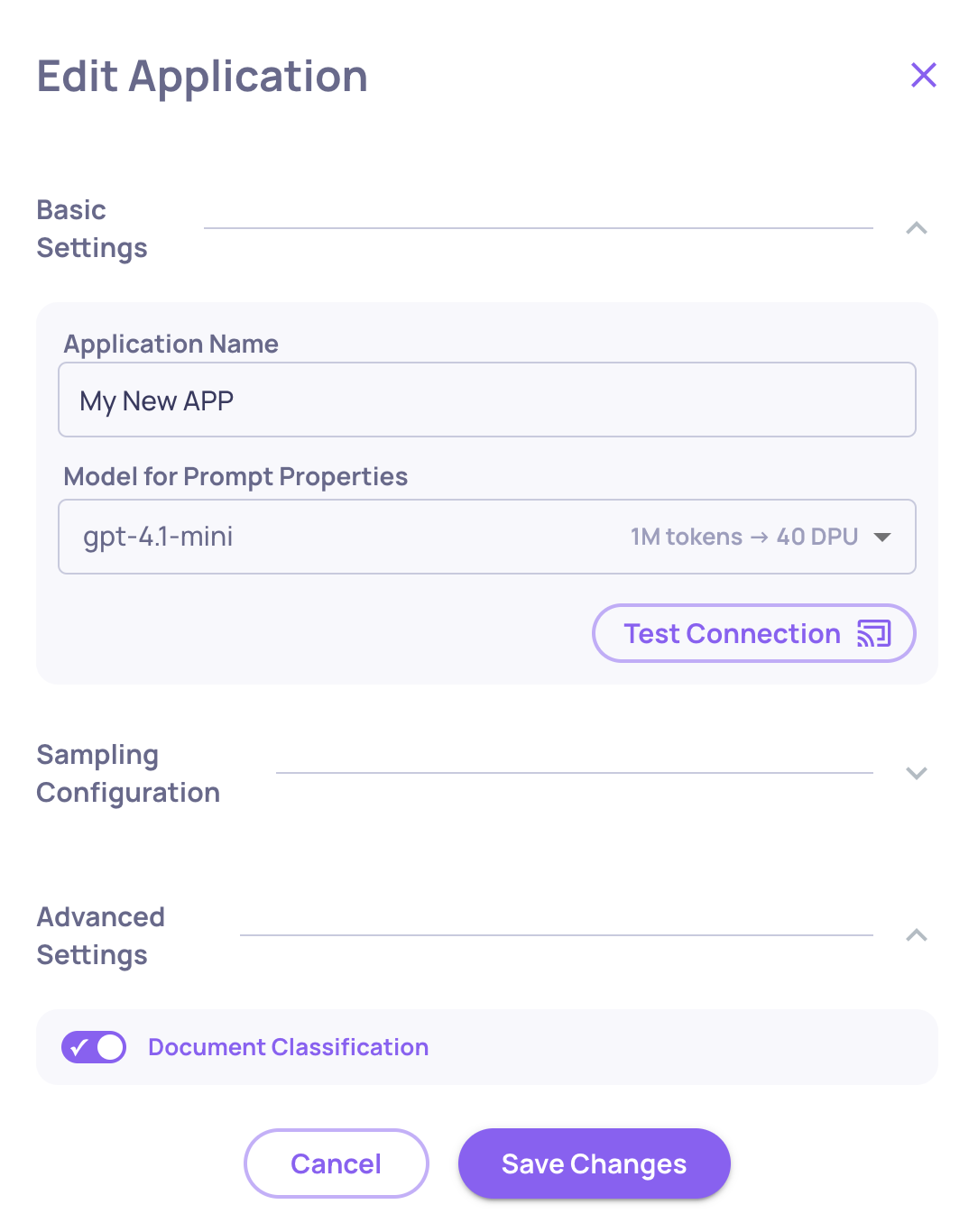

Prompt properties and other LLM features run on a model selected at the application level (in the Edit Application window). Lighter-weight models cost fewer DPUs—choose the one that gives you the balance you need between accuracy and efficiency.

Choosing the LLM Running Prompt Properties on the "Edit Application" Window

Keep Only Relevant LLM Properties Active

LLM-based properties—both built-in and prompt properties—consume DPUs each time they run (i.e., when an interaction is uploaded). While these tools can offer deep insights into your pipeline, that doesn’t mean every property should be active by default. Evaluate each property’s contribution to your current analysis: if it no longer adds meaningful evaluation value, consider disabling or deleting it.

Remember, the goal is to focus on properties that genuinely help you improve performance or spot issues. Using unnecessary properties not only adds noise to your analysis—it also consumes DPU resources that could be better spent elsewhere.

Not Pinned ≠ Not RunningPinning properties to the Overview screen is meant to highlight the most important ones for quick access—but it doesn’t affect which properties are actually being calculated. All active properties (pinned or not) continue to run on every uploaded interaction.

To view the full list of properties currently active in your application, go to the Properties screen. There, you can also see which properties rely on LLMs (marked with a special tag). Alternatively, open any specific interaction to see the complete set of properties that were calculated for it.

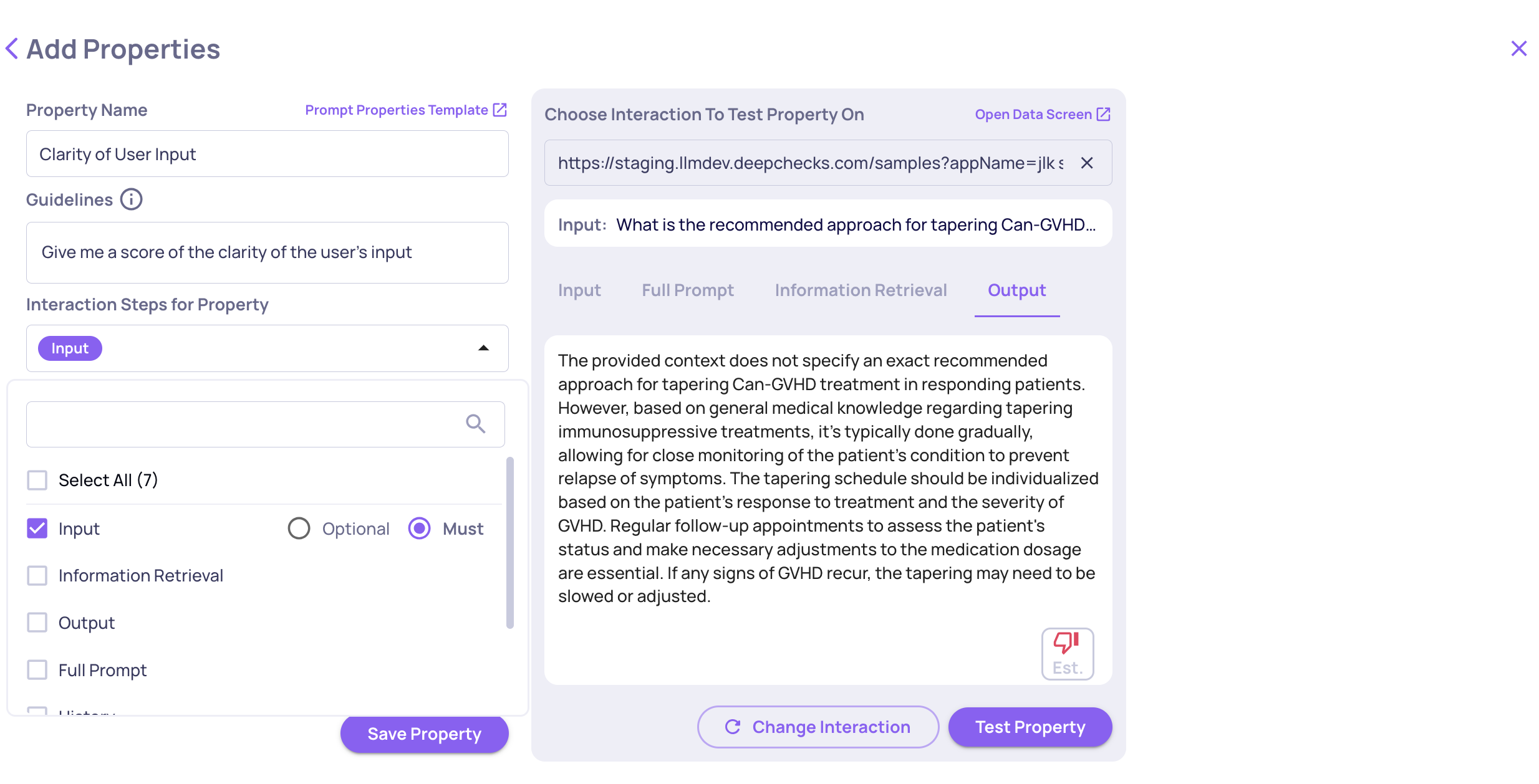

Choose the Right Data Fields for Prompt Property Calculations

When creating a prompt property, you can select which data fields (interaction steps) are included in the prompt sent to the LLM. These fields are submitted every time the property is calculated—so including unnecessary ones can drive up DPU usage without improving accuracy.

To use your DPUs efficiently, include only the fields truly relevant to the property’s logic. For example, if you create a property like “Clarity of User Input”, and your prompt guidelines specify focus on the input itself, there's no need to include a heavy field like history. Excluding unrelated fields will both reduce costs and help the LLM focus on what matters—leading to cleaner, more reliable evaluations.

Choosing Data Fields While Creating a Prompt Property

Optimize Document Classification

Document Classification is a key feature in Deepchecks for evaluating RAG pipelines. It provides valuable insights into which documents contributed to the answer generation—tagging them as relevant, irrelevant, distracting, and more. Beyond its standalone value, it also serves as the foundation for all Retrieval Properties. See here for more information on Document Classification and Retrieval Properties.

Because classification uses an LLM under the hood, it consumes DPUs. If you’re not actively reviewing document-level classifications or using the related retrieval properties, consider turning it off to avoid unnecessary usage. You can enable or disable Document Classification through the Edit Application flow.

Document Classification Enable/Disable Toggle on the Edit Application Flow

Updated about 2 months ago