CrewAI

Deepchecks integrates seamlessly with CrewAI, providing automated tracing, evaluation, and observability for multi-agent workflows and tool-assisted pipelines

Deepchecks integrates seamlessly with CrewAI, letting you upload and evaluate your CrewAI workflows. With our integration, you can capture traces from CrewAI runs using OpenTelemetry (OTEL) and OpenInference, and automatically send them to Deepchecks for observability, evaluation and monitoring.

How it works

Data upload and evaluation

Capture traces from your CrewAI runs and send them to Deepchecks for evaluation.

Instrumentation

We use OTEL + OpenInference to automatically instrument CrewAI (and LiteLLM if applicable). This gives you rich traces, including LLM calls, tool invocations, and agent-level spans.

Registering with Deepchecks

Traces are uploaded through a simple register_dc_exporter call, where you provide your Deepchecks API key, application, version, and environment.

Viewing results

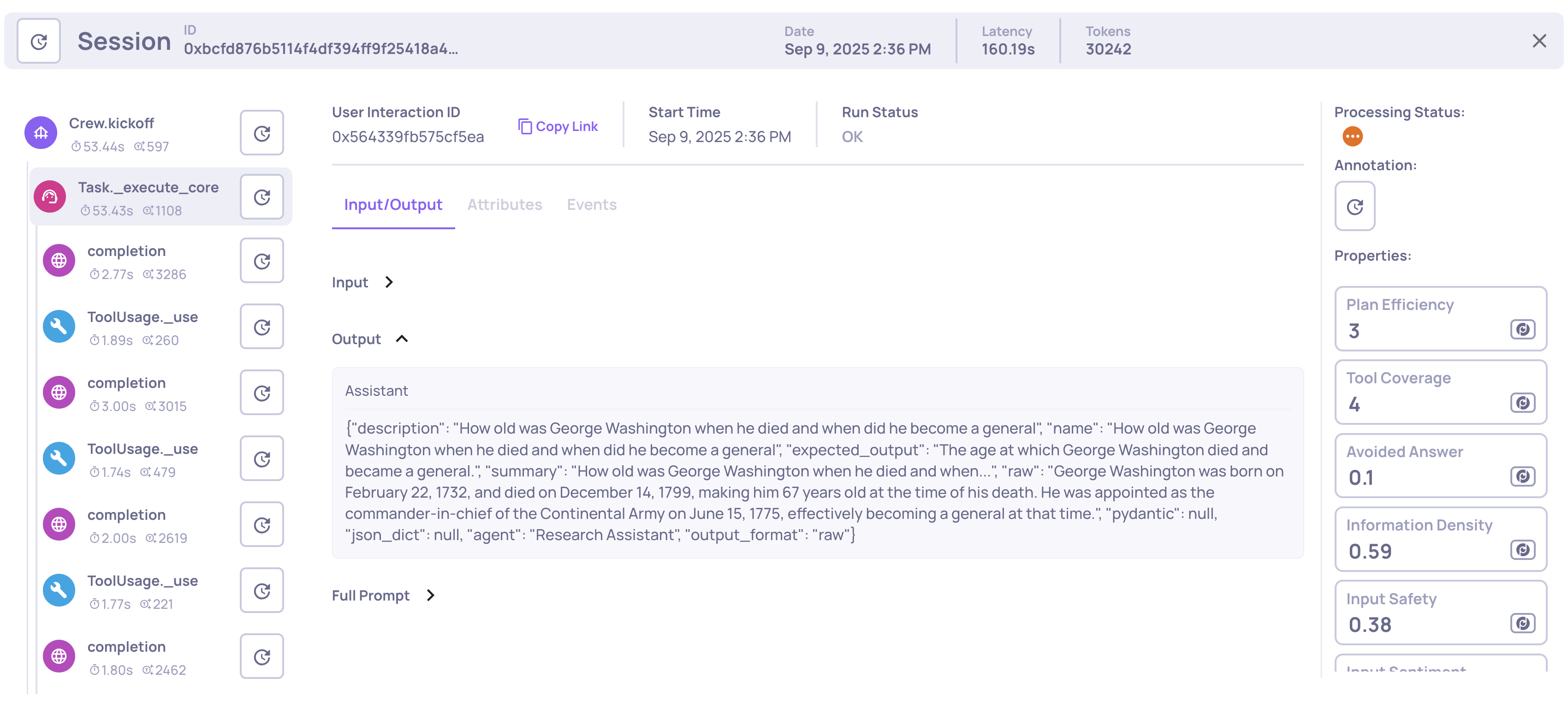

Once uploaded, you’ll see your traces in the Deepchecks UI, complete with spans, properties, and auto-annotations. See here for information about multi-agentic use-case properties.

Package installation

pip install "deepchecks-llm-client[otel]"Instrumenting CrewAI

from deepchecks_llm_client.data_types import EnvType

from deepchecks_llm_client.otel import CrewaiIntegration

# Register the Deepchecks exporter

CrewaiIntegration().register_dc_exporter(

host="https://app.llm.deepchecks.com/", # Deepchecks endpoint

api_key="Your Deepchecks API Key", # API key from your Deepchecks workspace

app_name="Your App Name", # Application name in Deepchecks

version_name="Your Version Name", # Version name for this run

env_type=EnvType.EVAL, # Environment: EVAL, PROD, etc.

log_to_console=True, # Optional: also log spans to console

)Example

This is a simple agentic workflow for a research assistant that retrieves accurate information on various topics by searching Wikipedia and verifying facts in academic papers. It runs on the CrewAI pipeline and includes tracing and Deepchecks registration code:

import os

import requests

import wikipedia

from crewai.tools import tool

from crewai import Agent, Task, Crew

from deepchecks_llm_client.data_types import EnvType

from deepchecks_llm_client.otel import CrewaiIntegration

os.environ["OPENAI_API_KEY"] = "Your OpenAI Key"

SEMANTIC_SCHOLAR_API_URL = "https://api.semanticscholar.org/graph/v1/paper/search"

# Register the Deepchecks exporter

CrewaiIntegration().register_dc_exporter(

host="https://app.llm.deepchecks.com/", # Deepchecks endpoint

api_key="Your Deepchecks API KEY", # API key from your Deepchecks workspace

app_name="Your App Name", # Application name in Deepchecks

version_name="Your Version Name", # Version name for this run

env_type=EnvType.EVAL, # Environment: EVAL, PROD, etc.

log_to_console=True, # Optional: also log spans to console

)

@tool("academic_papers_lookup")

def search_academic_papers(query: str) -> str:

"""Search for academic papers related to the given query and return the titles and abstracts from Semantic Scholar."""

params = {

"query": query,

"fields": "title,abstract",

"limit": 3 # Get top 3 papers

}

try:

response = requests.get(SEMANTIC_SCHOLAR_API_URL, params=params)

response.raise_for_status()

data = response.json()

if "data" not in data or not data["data"]:

return "No relevant academic papers found."

results = []

for paper in data["data"]:

title = paper.get("title", "No title available")

abstract = paper.get("abstract", "No abstract available")

results.append(f"Title: {title}\nAbstract: {abstract}")

return "\n\n".join(results)

except requests.exceptions.RequestException as e:

return f"Error fetching papers: {e}"

@tool("wikipedia_lookup")

def wikipedia_lookup(query: str) -> str:

"""Search Wikipedia for the given query and return the summary."""

try:

summary = wikipedia.summary(query, sentences=2)

return summary

except wikipedia.exceptions.DisambiguationError as e:

return f"Disambiguation error: {e.options}"

except wikipedia.exceptions.PageError:

return "Page not found."

except Exception as e:

return f"An error occurred: {e}"

# Define the agent

agent = Agent(

role='Research Assistant',

goal='Provide accurate information on various topics by searching Wikipedia and verifying in academic papers.',

backstory='Proficient in retrieving and summarizing information from Wikipedia and Academic resources.',

tools=[wikipedia_lookup, search_academic_papers],

llm='gpt-4.1-mini',

verbose=True # Enable detailed logging

)

# Define a task for the agent

task1 = Task(

description='How old was George Washington when he died and when did he become a general',

expected_output='The age at which George Washington died and became a general.',

agent=agent

)

# Create a crew with the agent and the task

crew = Crew(

agents=[agent],

tasks=[task1],

verbose=True, # Enable detailed logging for the crew

)

crew.kickoff()The following illustrates how a single execution of this example appears within the Deepchecks platform, showcasing the full workflow from input to output, the captured spans, and the registered evaluation metrics for detailed analysis:

Updated about 2 months ago