Get the Most Out of Your DPUs in SageMaker

A guide about utilizing your DPUs for data upload in an optimal way on SageMaker

Get the Most Out of Your DPUs in SageMaker

In this guide, we’ll show you how to maximize the value of your DPUs when using Deepchecks on AWS SageMaker.

Unlike our SaaS offering, when using Deepchecks on SageMaker you run models with your own LLM profiles and API keys. This guide therefore focuses on data upload and base token usage, which directly impact your DPU consumption. It does not cover LLM usage or model-specific optimizations.

By following these recommendations, you’ll ensure that your DPUs are used efficiently, that your data is structured correctly, and that your evaluation workflows run smoothly.

Understanding DPUs & Monitoring Usage

Base Usage

Base usage refers to the amount of data you upload to the platform. Uploading 1 million tokens for evaluation costs 250 DPUs, while sending 1 million tokens for storage only (unevaluated) costs 40 DPUs. This includes all data fields and attributes submitted per interaction.

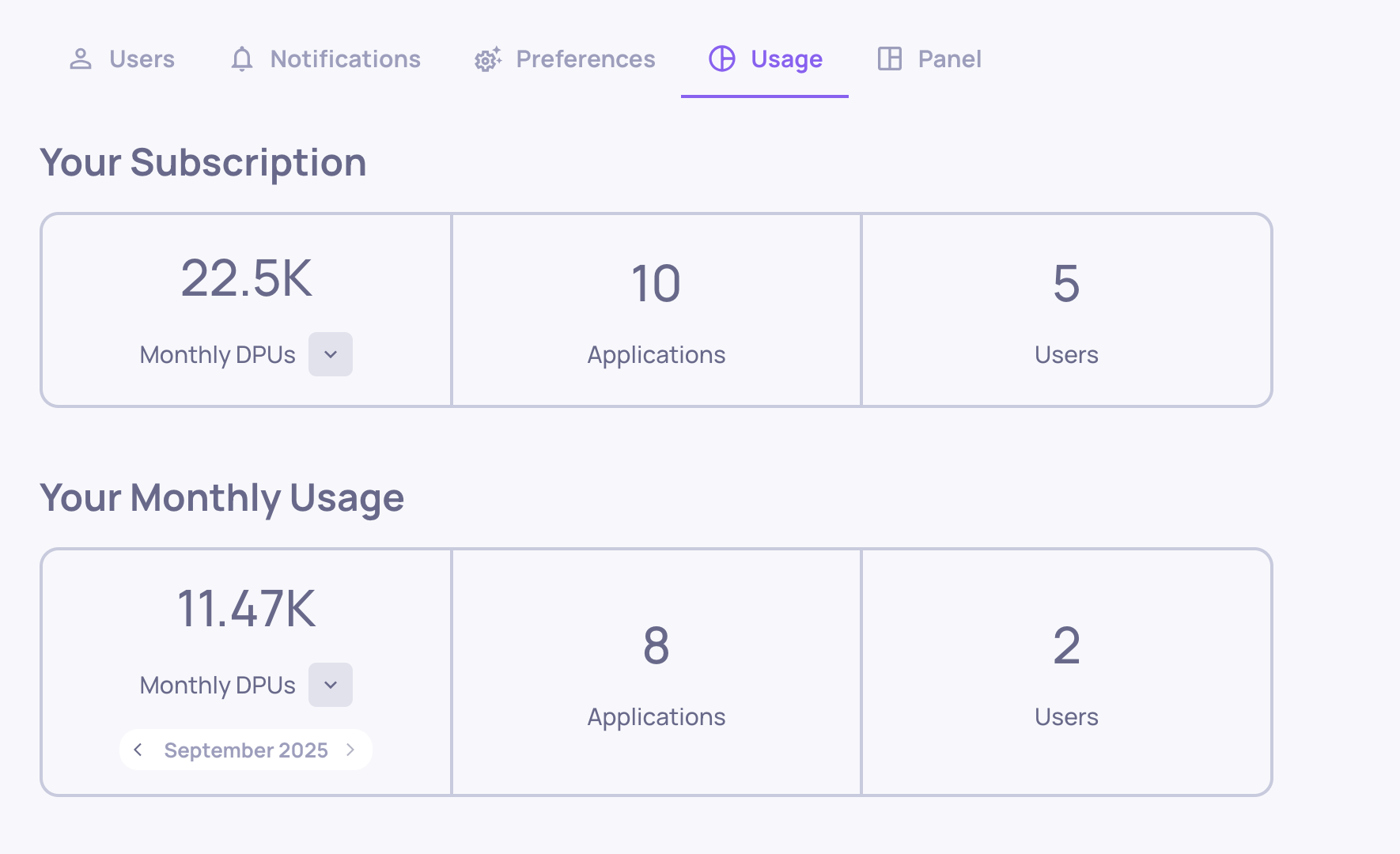

Usage and Plan DisplayAll of your usage - broken down by type and matched to your current plan - is visible in the Usage tab under Workspace Settings.

Example of the Usage tab

Optimizing Base Usage

To get the most out of your DPUs in SageMaker, it’s important to manage the amount and structure of the data you upload. The following tips focus on optimizing base token usage to ensure efficient, cost-effective evaluations.

Sample Your Production Data

Sampling is essential for working efficiently with large-scale production data. When your workflows generate high volumes of interactions, evaluating every session may be unnecessary (and costly).

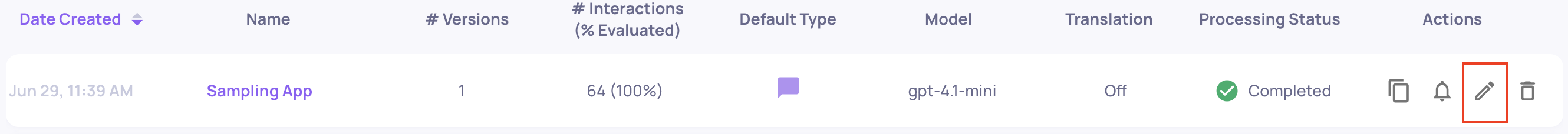

"Edit Application" Button on the "Manage Applications" Screen

Set an appropriate sampling ratio when uploading production data to Deepchecks via SageMaker. For example, a 20% sampling rate for evaluated data (0.2 ratio) often provides statistically significant results while keeping costs reasonable. Deepchecks randomly selects sessions, ensuring the sample represents your full data distribution.

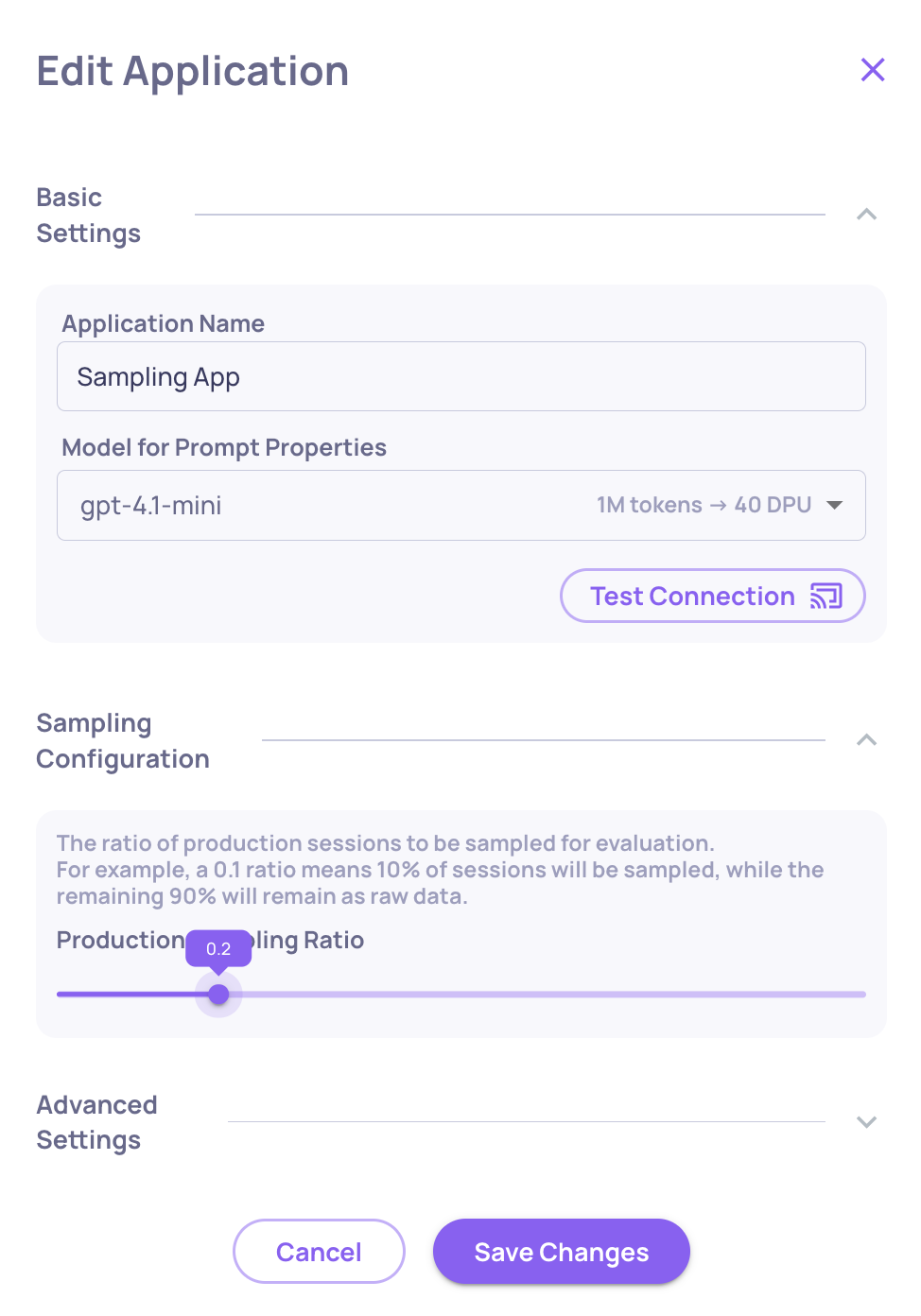

Setting the Sampling Ratio at 0.2; "Save Changes" Must Be Clicked For the Ratio to Be Applied

Optimize the Size of Your Evaluation Dataset

More data doesn’t always yield better results. Focus on creating a dataset that is representative and statistically meaningful. Too small a dataset may not reflect real-world usage; too large incurs unnecessary DPU costs without added insight.

Pre-Process and Parse Your Data

Ensure your uploaded data is clean, correctly structured, and parsed according to the Deepchecks schema. Proper preprocessing avoids wasted DPUs on malformed or incomplete interactions and ensures your evaluations reflect accurate usage patterns.

If you’d like more personalized guidance, the Deepchecks team is always here to help. We can work with you to optimize DPU usage for your specific SageMaker workflows and use cases, ensuring you get the most value from your data.

Updated 3 months ago