Experiment Management

How to track and organize experiment metadata to maximize insight and control over your LLM evaluation pipeline?

Deepchecks’ Experiment Management provides a structured way to track and analyze different iterations of your LLM-based application's pipeline. It allows teams to store detailed configuration data about each version and different LLM calls within it, making it easier to understand changes over time, audit performance, and compare experiments more effectively.

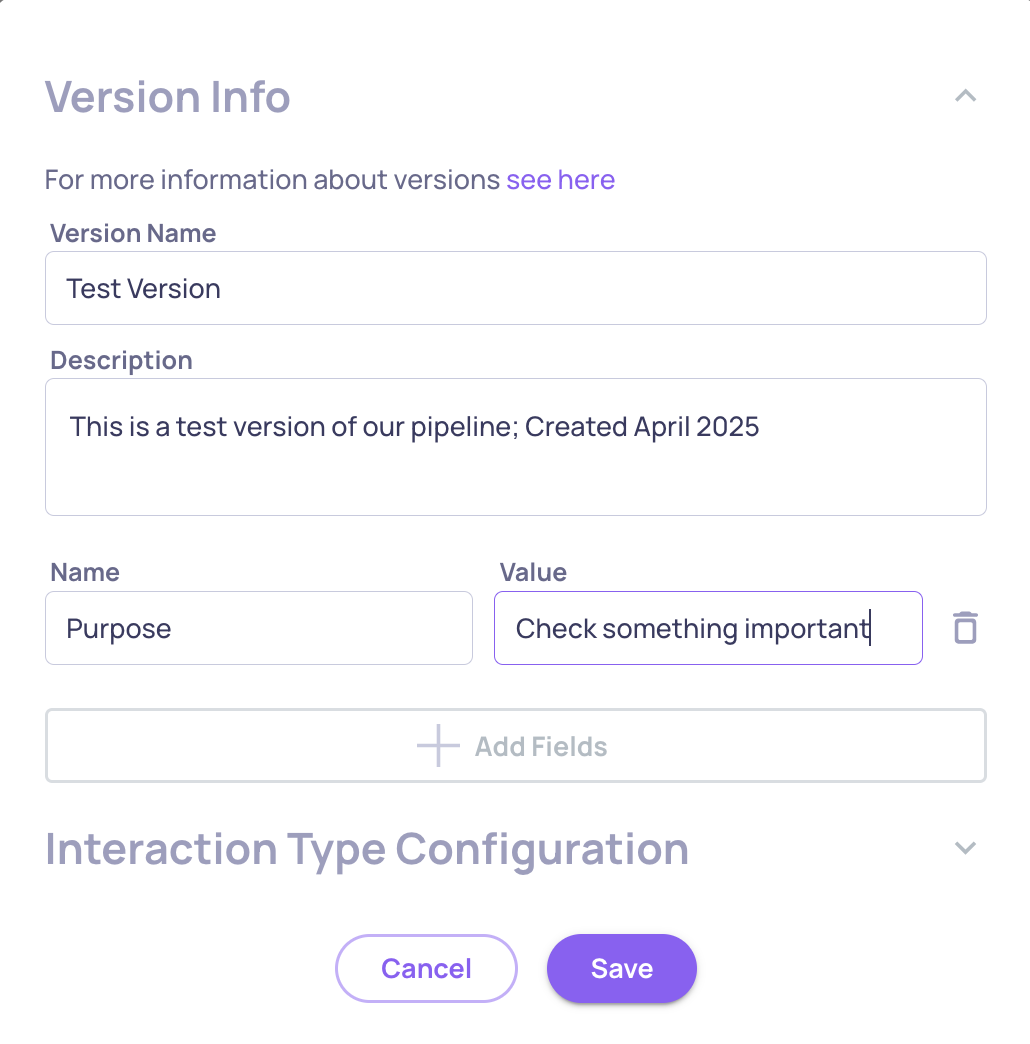

Version-Level Configuration Data

Each version represents a specific experiment or iteration of your application's pipeline. At this level, you can assign:

- Name: A unique identifier for the version.

- Description: A short summary explaining what this version of the pipeline represents.

- Metadata: Custom key-value pairs to store experiment-specific context, such as dataset origin, date, purpose, or deployment target.

This structured information makes it easier to understand the context behind evaluation results and collaborate across teams.

Version Level Configuration Data

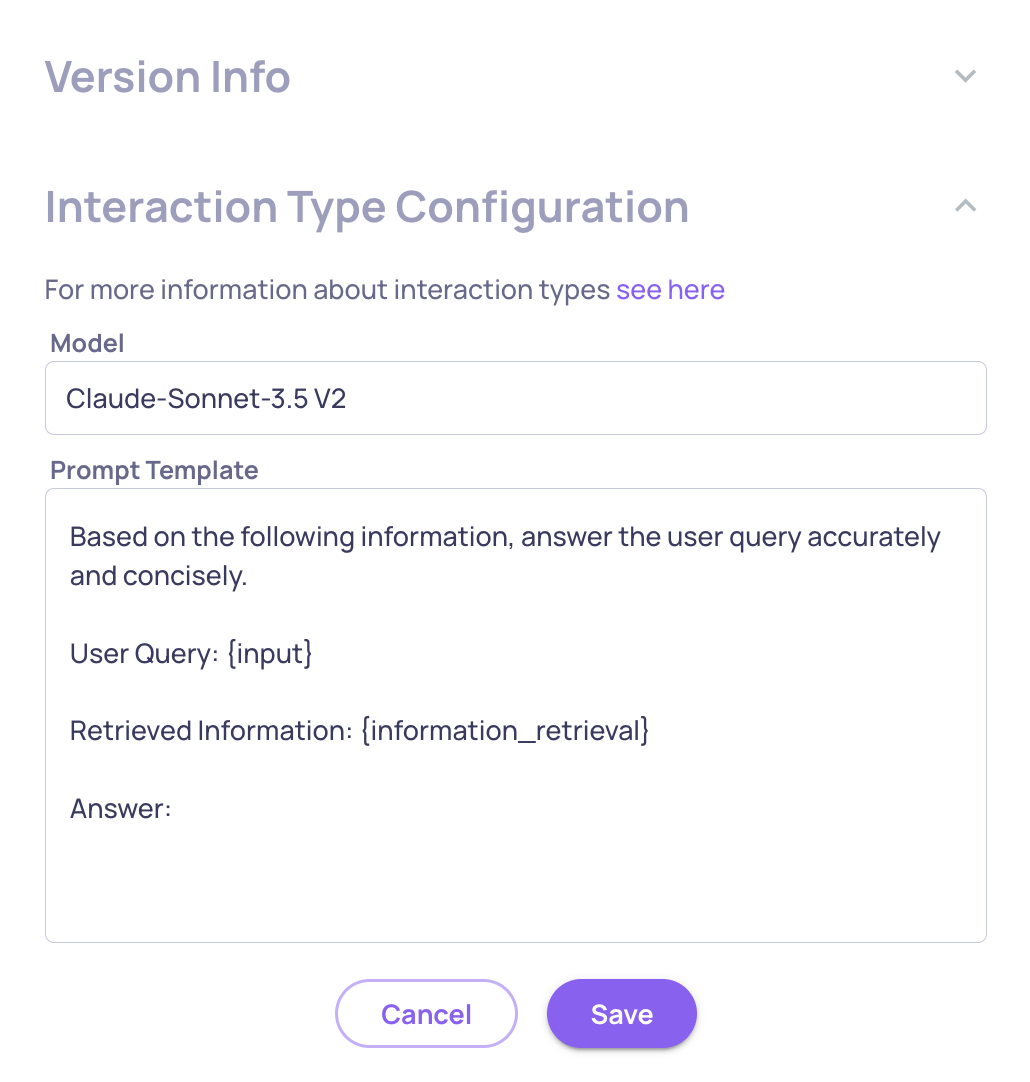

Interaction-Type Level Configuration Data

Within each version, interaction types (such as summarization, Q&A, etc.) are the base for the different LLM calls in your pipeline, and can carry their own configuration context. For each interaction type, you can track:

- Model Used: The name of the model used for the interaction type's LLM calls (e.g., "Claude-Sonnet-3.5").

- Prompt Template: The prompt template applied in these LLM calls (including variables like {input}, {information_retrieval}, etc.).

- Metadata: Custom key-value pairs to capture parameters like temperature, few-shot source, or prompt logic variations.

This per-interaction-type configuration makes it possible to capture fine-grained experimentation logic within a single version, enabling deeper insights into what affects performance.

Version x Interaction Type Level Configuration Data

Create/Edit the Experimentation DataCreating and editing the experimentation data on both levels can be done via the UI or via SDK.

UI:While viewing a Version, clicking on "Edit Version" opens the interface to create or update experiment metadata — both at the version level and for each interaction type within it.

SDK:Example for creating an experimentation data for a particular version/interaction type

sdk_client.create_interaction_type_version_data( app_name="MyApp", version_name="v1", interaction_type="SUMMARIZATION", model="gpt-4o-mini", prompt="Please provide a comprehensive summary of the following text: {input}", metadata_params={"temperature": "0.5", "version": "0.12.4"} )

This feature gives teams the structure and clarity needed to manage experimentation at scale, and serves as the foundation for future tools like automated comparisons and experiment recommendations.

Updated 2 months ago