Agent Evaluation

How Deepchecks supports agent evaluation by measuring their reasoning, decision-making, and task execution across multi-step workflows

Evaluating Agent Performance

Evaluating an agent’s performance typically involves analyzing the series of steps it takes to achieve a user’s goal. At each step, the agent might call an external tool, consult a large language model (LLM) expert, or interact with other agents—making evaluation a complex process.

Deepchecks provides both modular and end-to-end evaluation for agents. It assesses every action the agent takes as well as the overall session outcome. To do this, each agent session is divided into two main types of interactions, with each type evaluated using its own set of tailored properties:

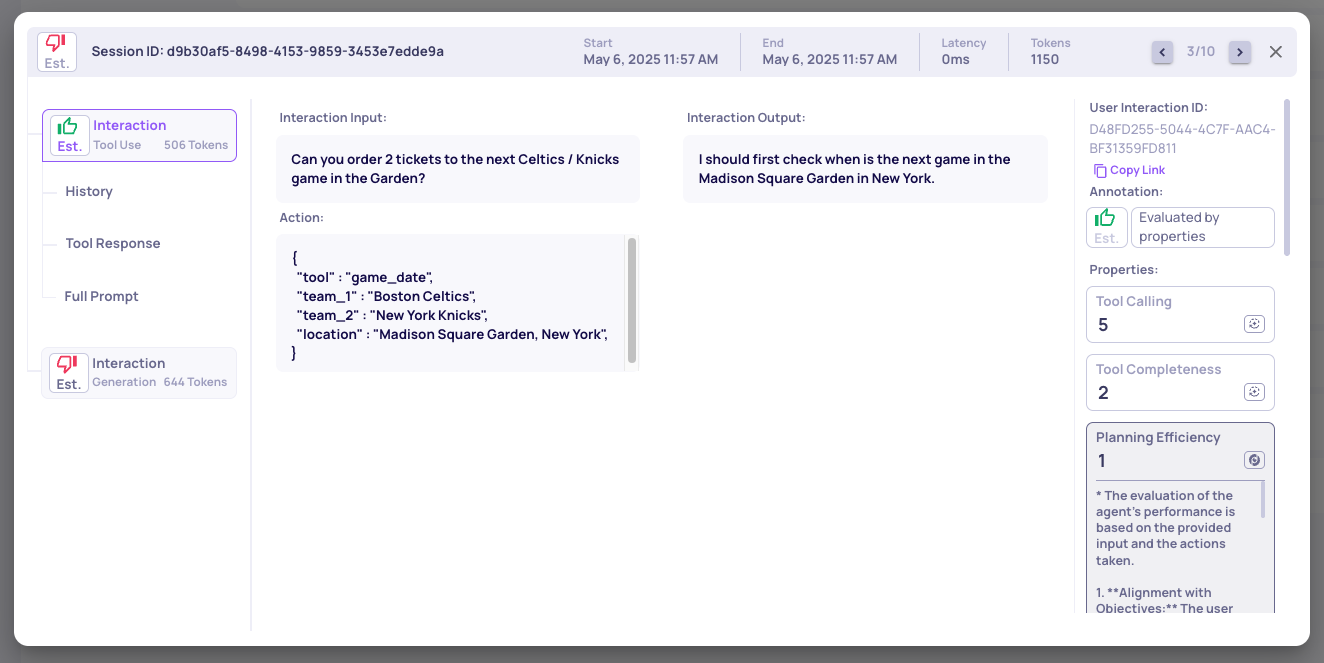

1. Tool Use 🦾

These interactions occur when the agent takes an action other than generation. For Deepchecks to provide maximum value, each Tool Use interaction should include:

- Input (str): The user’s input.

- Full Prompt (str): The list of available tools and any other relevant instructions provided to the agent.

- History (str): A list of past actions taken by the agent, including the thoughts, actions and tool responses.

- Output (str): The agent's thought process or planning.

- Action (str): The action itself—specifically, tool invocation.

- Tool Response (str): Information returned from the tool.

- (Optional) Additional Steps - In case your interaction has additional intermediate steps they should be logged under the steps mechanism. See more details here.

Tool Use Key Properties:

- Tool Calling - Assesses whether the tool selected by the agent is appropriate given its planning and the user request, and whether the tool call is correctly formatted.

- Planning Efficiency - Assesses whether the agent's planning is efficient, effective and goal oriented.

- Tool Completeness - Assesses how relevant is the information provided by the tool for addressing the user request.

2. Generation 🎨

These interactions occur when the agent generates a response for the user. Ideally, each Generation interaction should include:

- Input (str): The user’s input.

- Full Prompt (str): Relevant instructions that guided the response generation.

- Information Retrieval (str): The actions previously taken by the agent, along with the corresponding responses returned by the tools.

- Output (str): The response generated for the user.

- (Optional) Additional Steps - In case your interaction has additional intermediate steps they should be logged under the steps mechanism. See more details here.

Generation interactions are intentionally designed to be flexible. Since agents can generate anything from technical summaries or code to simple confirmations (e.g., “200 OK”) or even long-form content (e.g., book chapters), this interaction type provides basic metrics such as a general Instruction Following metric. You can also supplement these with custom, use-case-specific metrics like Coverage (for summarization) or Completeness (for Q&A).

Example of an Agent Use-Case Session with Tool-Use Unique Properties

How to Organize Sessions

When uploading data to Deepchecks, each agent session should be grouped under a single session (with a unique session_id). Within each session:

- Interactions where the agent calls a tool should use the Tool Use interaction type.

- All other interactions should use the Generation interaction type.

This structure ensures clear, comprehensive evaluation of both the process and the outcomes of your agent’s behavior.

Updated 3 months ago