Langchain

The SDK provides a Langchain Callback Handler that enables you to automatically trace your Langchain applications.

If you are using Langchain, the python SDK contains a Langchain Callback Handler that can help you effortlessly trace your Langchain application and log it to Deepchecks.

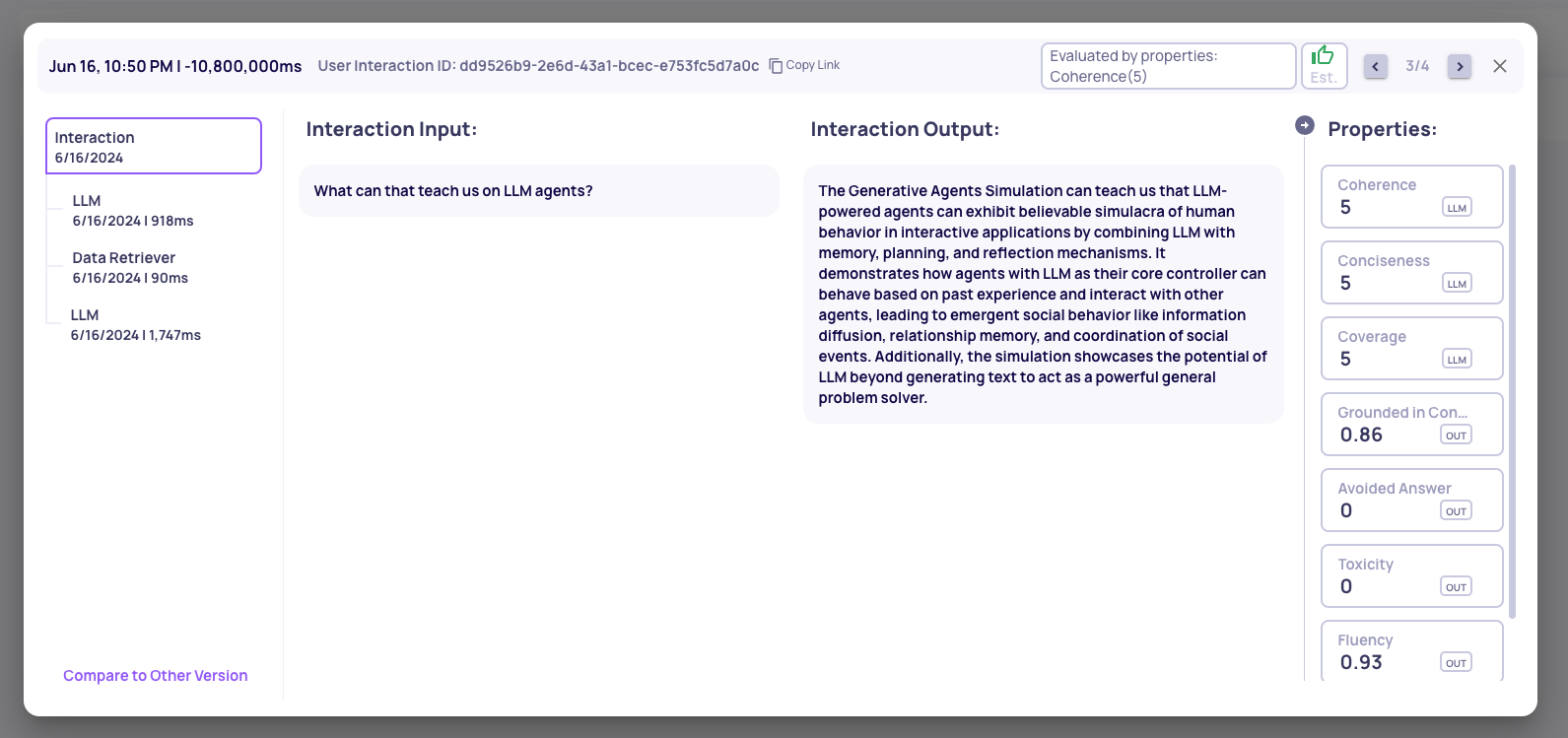

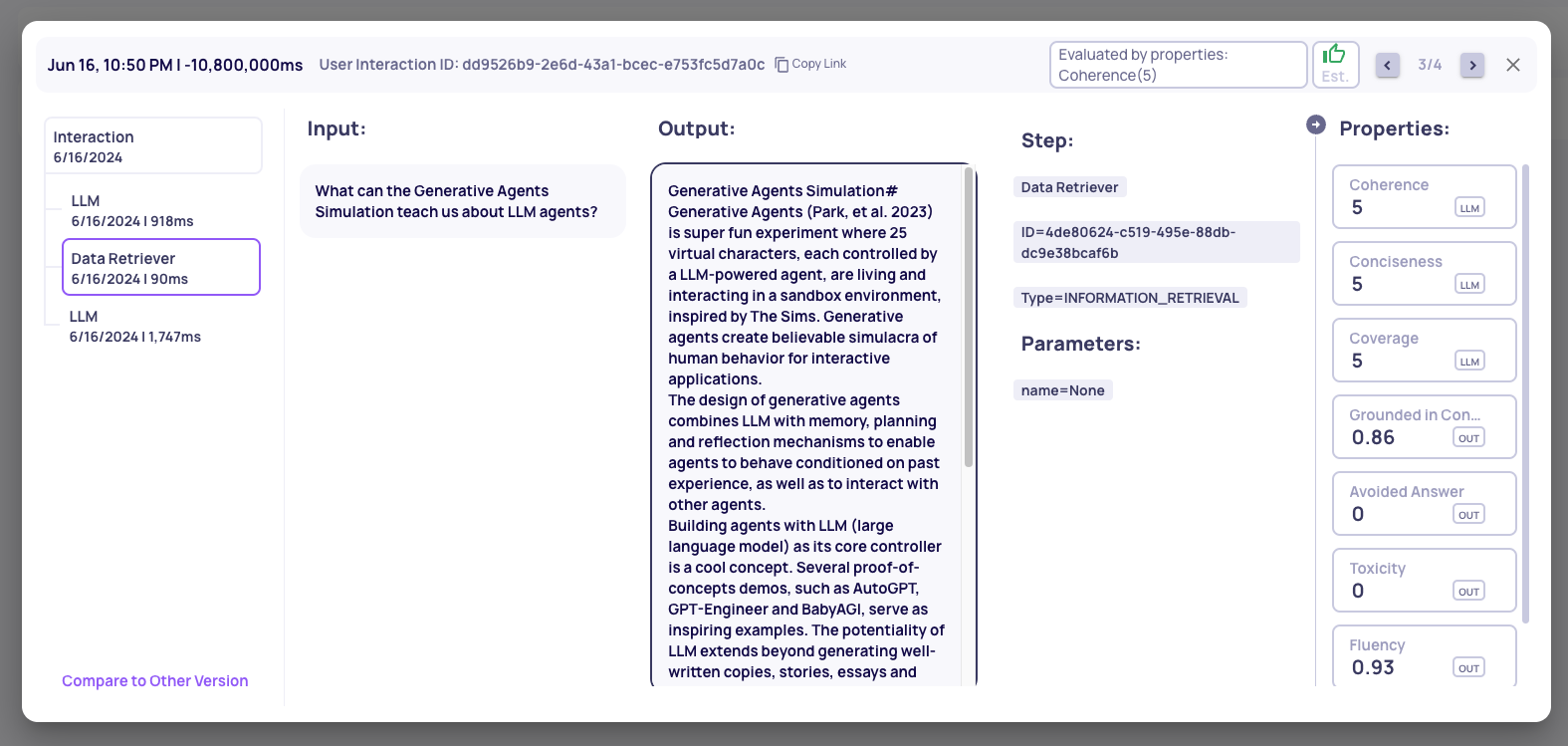

This handler, if passed to a Langchain chain, will automatically log all individual chain components (LLM calls, Information Retrieval and so on) as steps within a single interaction. This will look similar to the trace shown below:

In the screenshot above you can see that a single Langchain chain call produces a single interaction, with the original chain input as the input and the final chain output as the output. Individual components within the chain, such as the Information Retrieval result shown above, will be shown as steps.

Code Example

To produce the result above you can run the following example

import os

import uuid

from langchain.chains import ConversationalRetrievalChain

from langchain_openai import ChatOpenAI

from langchain.memory import ConversationSummaryMemory

from langchain.document_loaders import WebBaseLoader

from langchain.schema.runnable import RunnableConfig

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.vectorstores import Chroma

from langchain_community.embeddings import HuggingFaceBgeEmbeddings

from deepchecks_llm_client.sdk.langchain.callbacks.deepchecks_callback_handler import DeepchecksCallbackHandler

from deepchecks_llm_client.data_types import EnvType

## Building the Langchain ConversationalRetrievalChain chain

#-------------------------------------------------------------

# This particular chain will use an article about Agents as its source document

def get_llm():

llm = ChatOpenAI(

openai_api_key=os.environ["OPENAI_API_KEY"])

return llm

def get_memory():

return ConversationSummaryMemory(llm=get_llm(), memory_key="chat_history", return_messages=True)

def build_app():

loader = WebBaseLoader("https://lilianweng.github.io/posts/2023-06-23-agent/")

data = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=0)

all_splits = text_splitter.split_documents(data)

model_name = "BAAI/bge-base-en"

encode_kwargs = {'normalize_embeddings': True}

hf = HuggingFaceBgeEmbeddings(

model_name=model_name,

encode_kwargs=encode_kwargs

)

vectorstore = Chroma.from_documents(documents=all_splits, embedding=hf)

llm = get_llm()

retriever = vectorstore.as_retriever()

qa = ConversationalRetrievalChain.from_llm(llm, retriever=retriever, memory=get_memory())

return qa

## Creating and logging the application

#-------------------------------------------------------------

# Instantiating the chain and logging each interaction using DeepchecksCallbackHandler

def get_callback_handler():

# ** Be sure to create the Chat QA Application Prior to running this code **

return DeepchecksCallbackHandler(host='https://app.llm.deepchecks.com',

api_key='YOUR_API_KEY',

app_name='Chat QA', app_version='v1', env_type=EnvType.EVAL,

user_interaction_id=uuid.uuid4()

)

qa_chatbot = build_app()

# Interaction 1

user_input = "So what is the Generative Agents Simulation?"

result = qa_chatbot.invoke(user_input, config=RunnableConfig(callbacks=[get_callback_handler()]))

# Interaction 2

user_input = "What can that teach us on LLM agents?"

result = qa_chatbot.invoke(user_input, config=RunnableConfig(callbacks=[get_callback_handler()]))

# Interaction 3

user_input = "Wait, what was my first question again?"

result = qa_chatbot.invoke(user_input, config=RunnableConfig(callbacks=[get_callback_handler()]))

Callback InstancesMake sure to instantiating a new callback, with its own user_interaction_id, for each chain call.

The code above (while requiring some installs to run) will produce the interaction steps shown in the images above.

Langchain versionsThe code above was tested with the following Langchain-ecosystem versions:

langchain==0.2.5

langchain-community==0.2.5

langchain-core==0.2.7

langchain-openai==0.1.8

langchain-text-splitters==0.2.1

langchainhub==0.1.20

langcodes==3.4.0

langsmith==0.1.77

language_data==1.2.0The

DeepchecksCallbackHandlerobject is known to work with versions as early as langchain 0.0.313, but the rest of langchain example brought above is highly dependant on exact versions.

Updated about 2 months ago