Automatic Annotations

How the automatic annotations work and how to configure them

Evaluating the quality of generative AI-based workflows is a difficult task. In order for an output to be considered good, it needs to follow several requirements. It needs to provide an accurate solution (e.g., relevant answer, summary with high coverage) that is not harmful (e.g., no hallucinations, no PII) and adhere to specific product requirements.

Interaction Annotations

Deepchecks provides a high-quality multi-step pipeline to generate auto annotations per interaction, which can be used to compare different versions' performance as well as evaluate performance on production data without the use of manual annotation.

The auto annotations are based on three customizable components:

All aspects of the automatic annotations can be customized by modifying the Auto Annotation Yaml. Such changes can include adding new steps, modifying the different thresholds, or introducing new properties, and specifically Custom Properties.

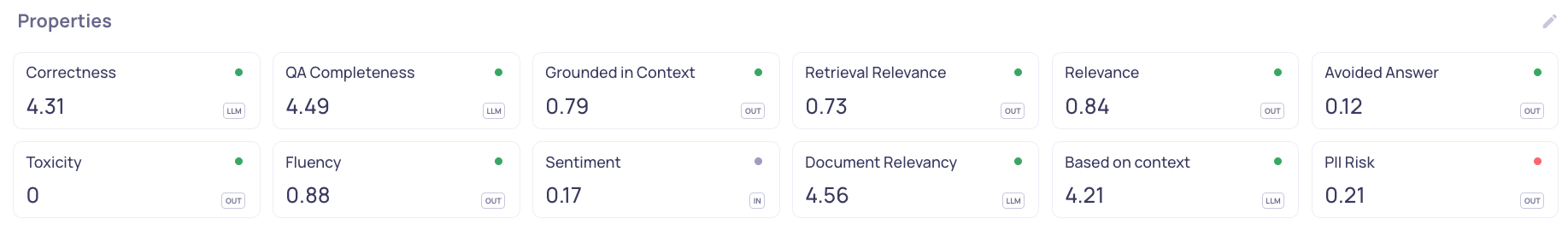

Each property scores the interaction on a specific aspect. For example, if a sample has a low Grounded in Context score it means that the output is not really based on the information retrieval, and is likely a hallucination. The property scores are incorporated in the auto-annotation pipeline via rule-based clauses. In different interaction types, different properties can be useful, see Supported Applications for recommendations per interaction type and Properties for the full catalog.

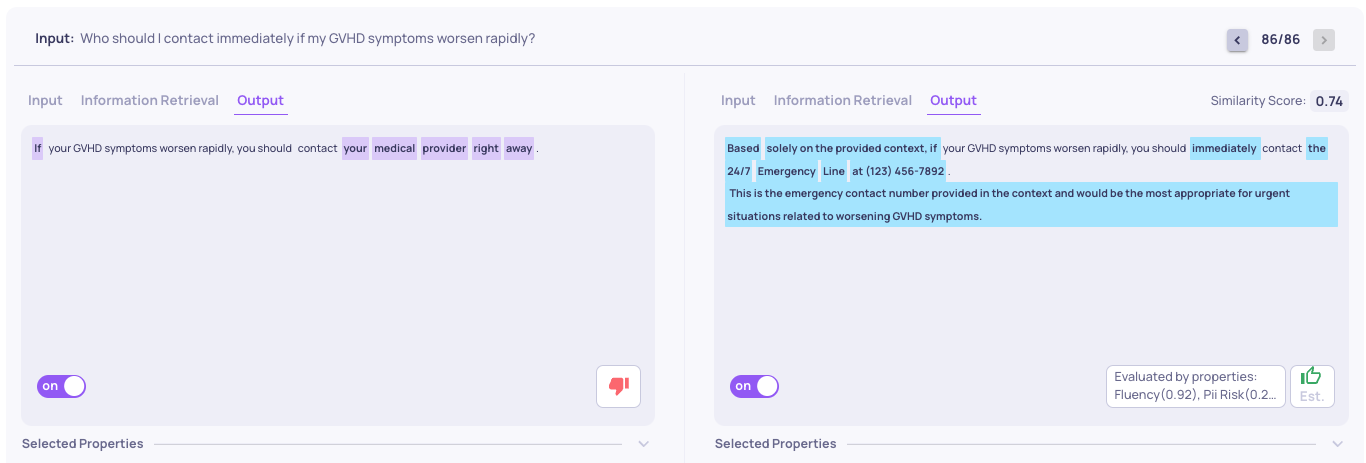

Deepchecks compares the interaction's output to previously annotated outputs (e.g., domain expert responses) via Deepchecks' similarity mechanism. Similarity is used only for auto-annotation of the evaluation set and is specifically useful for regression testing.

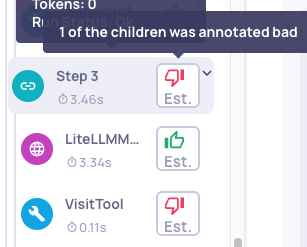

Deepchecks supports agentic use cases and automatically annotates parent interactions based on their children’s annotations. This is designed for hierarchical interaction types such as Agent, Chain, and Root, where a parent’s quality depends on the quality of its child interactions.

An LLM-based technique that learns from user-provided annotated interactions. It is specifically useful for detecting use-case-specific problems that cannot be caught using the built-in properties. Requires at least 50 annotated samples, including at least 15 bad and 15 good annotations, in order to initialize. The more user-annotated interactions across more versions, the better it performs.

Session Annotations

In Deepchecks, a session represents a multi-step workflow and consists of one or more interactions — possibly from different interaction types. In many use cases — especially agentic or multi-step workflows — users want to evaluate the overall quality of an entire session, not just individual steps. Session annotations help answer the question: "Was this whole interaction flow successful?"

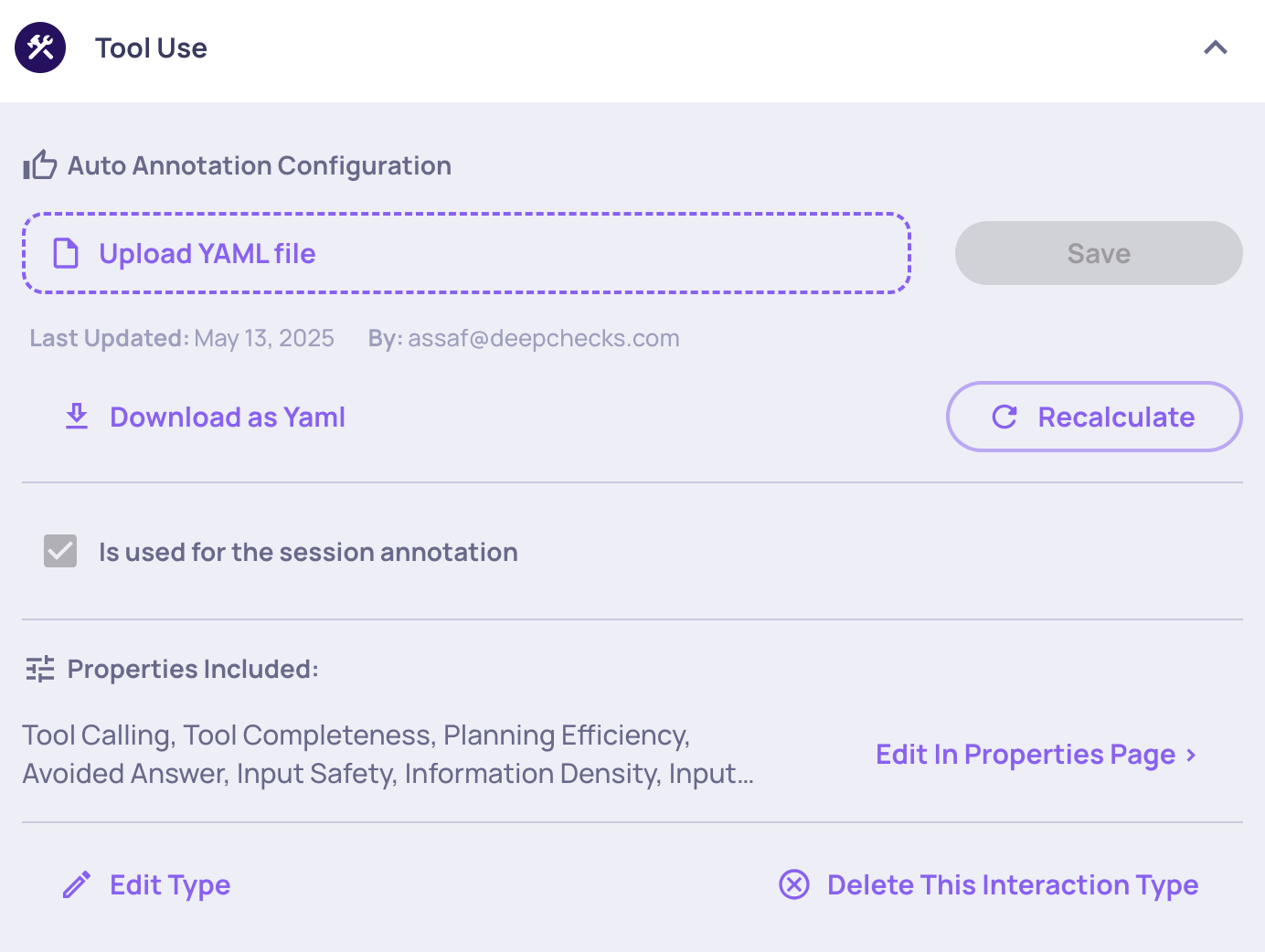

Configuring Which Interaction Types Affect Session Annotations

To make this useful across different use cases, the calculation focuses only on interactions from the types you've marked as important. For example, in an agent workflow, the final generation step (where the assistant returns an answer to the user) may matter more than an intermediate tool-use step. Deepchecks lets you control this by deciding which interaction types should influence session-level annotations.

In the interaction type configuration screen, you'll find a checkbox labeled "Affects Session Annotation".

By default, this checkbox is enabled for all interaction types, meaning annotations from those interactions will contribute to the session's overall annotation. You can disable the checkbox if a specific interaction type should be excluded from the session-level annotation logic.

Tool-Use Interaction Type configured to affect the session annotation in the application

How Session Annotations Are Calculated

Session annotations can be Good, Bad, Pending, or Unknown, and are based solely on interactions from the interaction types that are marked as "Affects Session Annotation".

Here’s how they are determined:

- Bad: If any interaction (from a checked interaction type) in the session is annotated as Bad, the entire session is marked Bad.

- Pending: If no interaction is Bad, but at least one interaction is Pending, the session is marked Pending.

- Good: If none are Bad or Pending, and at least one interaction is annotated as Good, the session is marked Good (even if the rest are Unknown).

- Unknown: If none of the above conditions are met — i.e., no Bad, no Pending, and no Good — the session is marked Unknown.

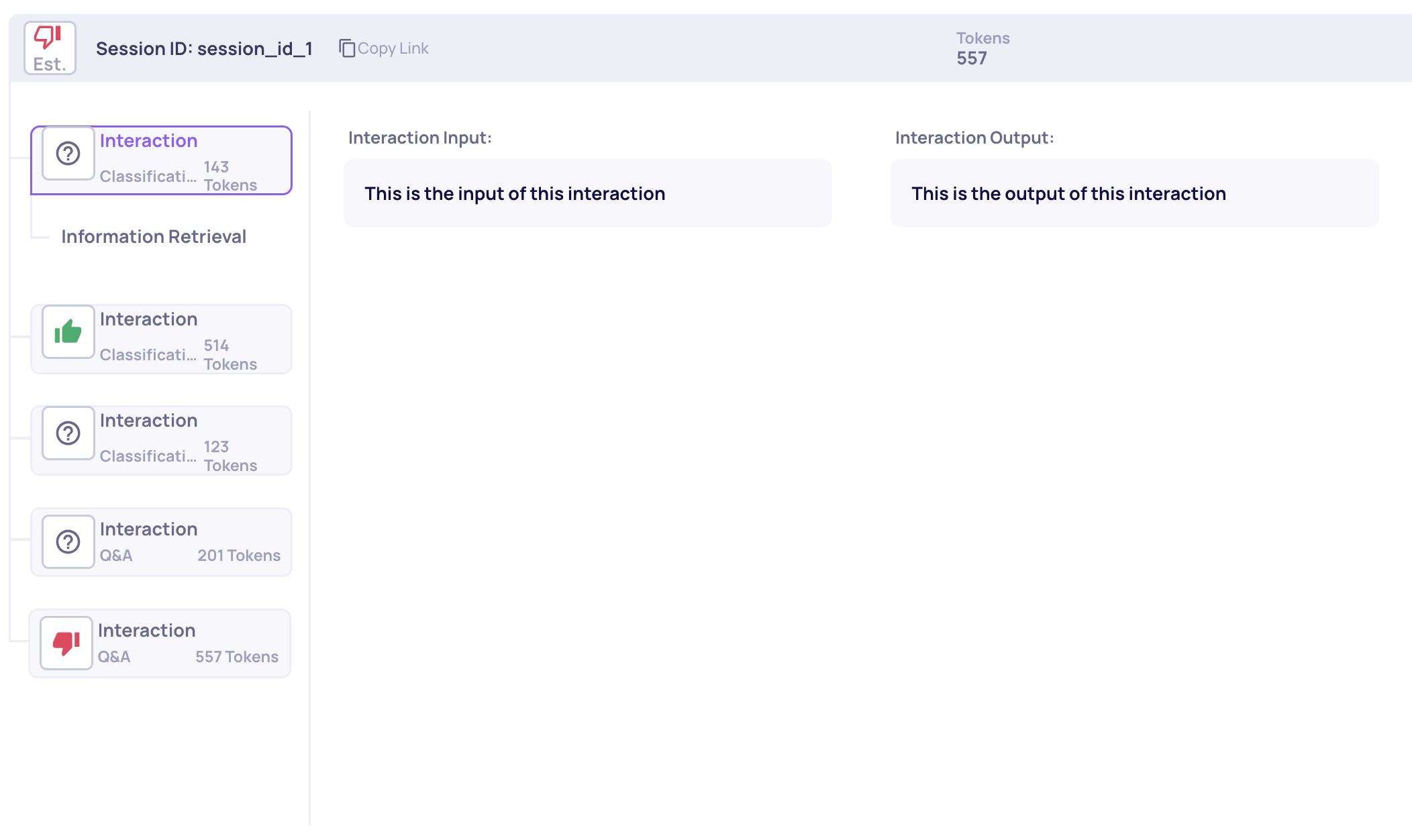

A session that was annotated "bad' due to a bad interaction annotation on a flagged interaction type (Q&A)

Involvement of user annotations in the logicNote: By default, the interactions' automatic annotations are involved in the session annotation logic. If a user manually annotated an interaction, that annotation overrides the automatic one and is used for the session calculation.

Updated 6 days ago