When and How to Update Your Evaluation Set

When

Your evaluation set isn’t static—it should grow and adapt alongside your product. Keeping it up to date ensures it continues to reflect real-world challenges and drives meaningful evaluations. Here are the top scenarios where updating is essential:

- When New Features Launch

Every time your product introduces new capabilities, your model faces new types of inputs and expectations. This could mean documents covering new topics in a RAG (Retrieval-Augmented Generation) setup, or new tools integrated into an agentic workflow (like a calculator, calendar, or code interpreter). These changes introduce new behaviors and edge cases that won’t be captured by your current evaluation set. Without representative examples, you’re not testing how the system performs in its expanded scope. Proactively add data that reflects these new capabilities to ensure your evaluations stay relevant and useful.

- When User Behavior Shifts

User input isn’t fixed—it changes over time. You might notice more queries in a new language, longer inputs, different phrasing, or usage patterns tied to new user segments. If your evaluation set doesn’t keep up with these shifts, your scores may become misleading. Use Deepchecks’ Properties to track changes in production input characteristics and compare them to your current eval set. If gaps emerge, it’s time to expand or rebalance your data.

- When Performance Suddenly Drops

Unexpected performance drops should prompt a close look at your evaluation set. Sometimes, these issues stem from real regressions—but often, they expose weaknesses in your eval coverage. The model may be failing on types of inputs that were never properly represented. In such cases, reviewing failed examples and identifying patterns can guide you in adding new, targeted samples.

How

For meaningful Version Comparison, make sure older model versions are also evaluated on the updated set. This keeps the evaluation consistent across all versions and ensures that performance changes reflect real model differences, not changes in the data being tested.

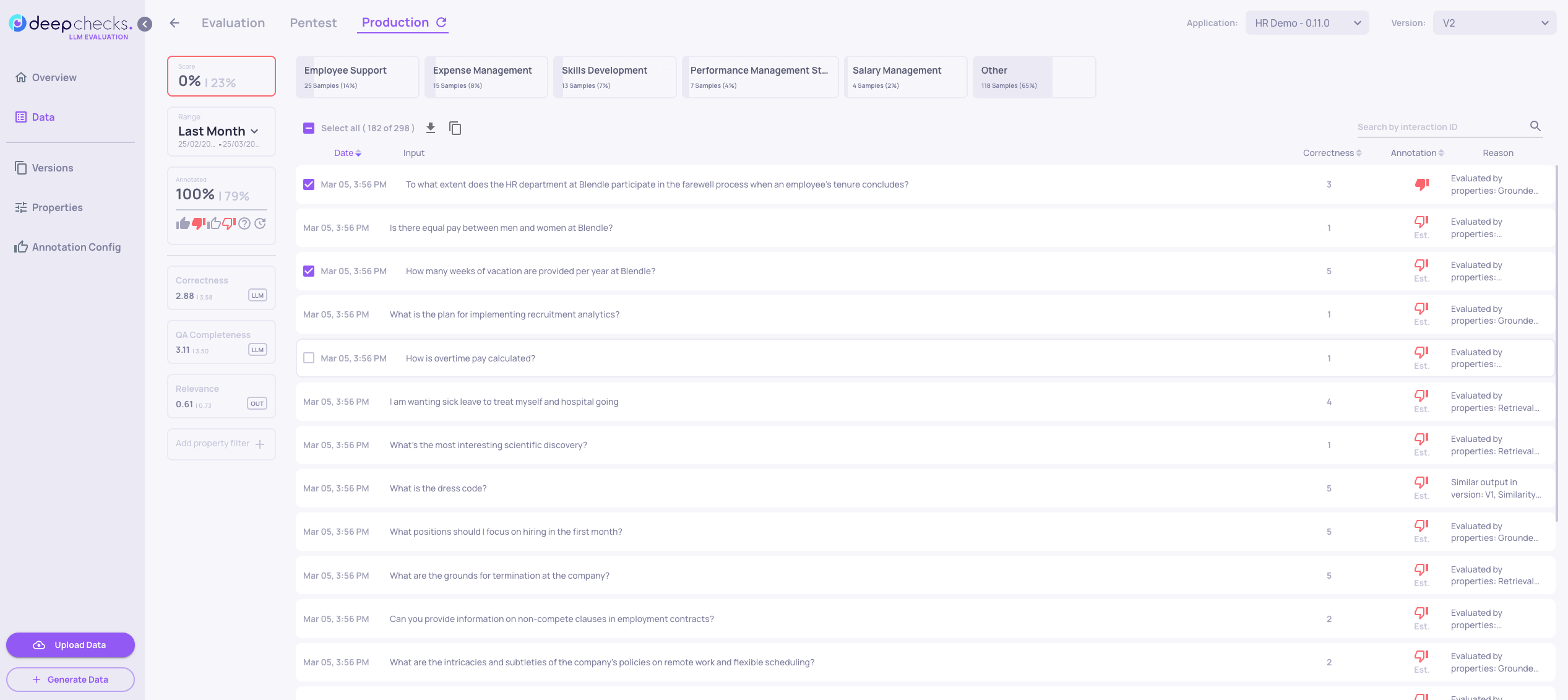

Cloning Interactions from Production

To copy interactions from production into your evaluation set, go to the Interactions screen, select the relevant interactions, then click the “Copy” icon that appears at the top, next to the “Select All” checkbox.

Note: This will only copy the interactions to the evaluation set of the version currently being edited.

Adding Additional Samples

This process follows the same steps and uses the same functions or API calls as uploading the original evaluation set. Since the version already exists, Deepchecks will recognize the update as an extension—not a replacement—of the existing evaluation set. Just make sure any newly added interactions have unique user_interaction_id values to avoid conflicts.

Deleting Interactions

Similar to the copy flow, to remove interactions from your evaluation set, go to the Interactions screen, select the interactions you want to delete, then click the “Delete” icon that appears at the top, next to the “Select All” checkbox.

Updated 2 months ago