Version Comparison

Use Deepchecks to compare versions of an LLM-based-app. The versions may differ in the prompts, models, routing mechanism or any

A common scenario during the lifecycle of LLM-based apps is the need to compare a few alternative versions, across various parameters (e.g. performance, cost, safety, etc.) and choose the desired one. This usually arises either during the iterative development cycle, when working on a new application, or alternatively, when an application already in production needs to be improved or updated due to the circumstances (e.g. malperformance or dynamics of the data, user behavior, etc.)

The comparison will either be between 2-3 versions (e.g. the current version in production and the new candidates), or between a myriad of alternatives – e.g. when iterating over various prompt options, or when comparing different base models to decide which would be the best balance of cost and performance.

Who Should Use This (and When)

Depending on the phase of the app and the company’s workflows, users performing these comparisons are likely to belong to one of the following groups:

- Technical user: Software engineer, LLM engineer, or data scientist

- Domain expert: Data curation, analyst, or product manager

Deepchecks enables:

- Deciding on the desired version, by comparing the high-level metrics, such as overall score and selected property scores. Possibly taking into account additional considerations (such as latency, cost, etc. for each option). This is enabled thanks to the built-in, user-value, and prompt properties, along with the auto-annotation pipeline for calculating the overall scores.

- Obtaining a granular understanding of the differences between the versions, by being able to drill down to interactions that changed greatly across the versions, and by verifying that existing problems from previous versions were resolved. This is enabled thanks to the property values, similarity calculations, and annotations (both automatic and estimated).

Version Comparison Within Deepchecks

We’ll demonstrate how this can be seen within the Deepchecks system:

-

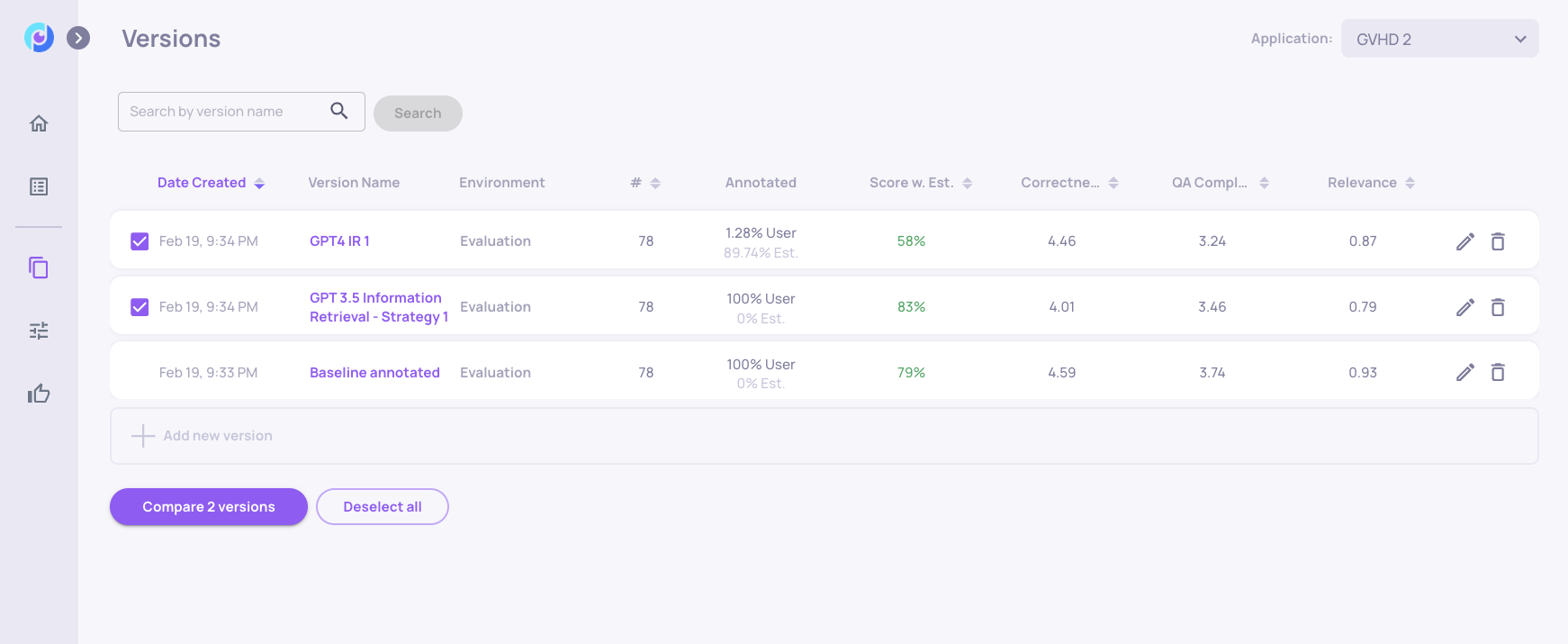

Go to the versions screen. For each version and environment, you will see the overall score and additional property scores. You can sort the versions according to these scores and properties.

-

In order to determine which version is better, you want to have a defined golden set, which you run on with every version you want to compare. With Deepchecks you can save this golden set across versions, expand it with interactions from production, and calculate the properties and estimated annotations on all of the interactions that belong to it.

-

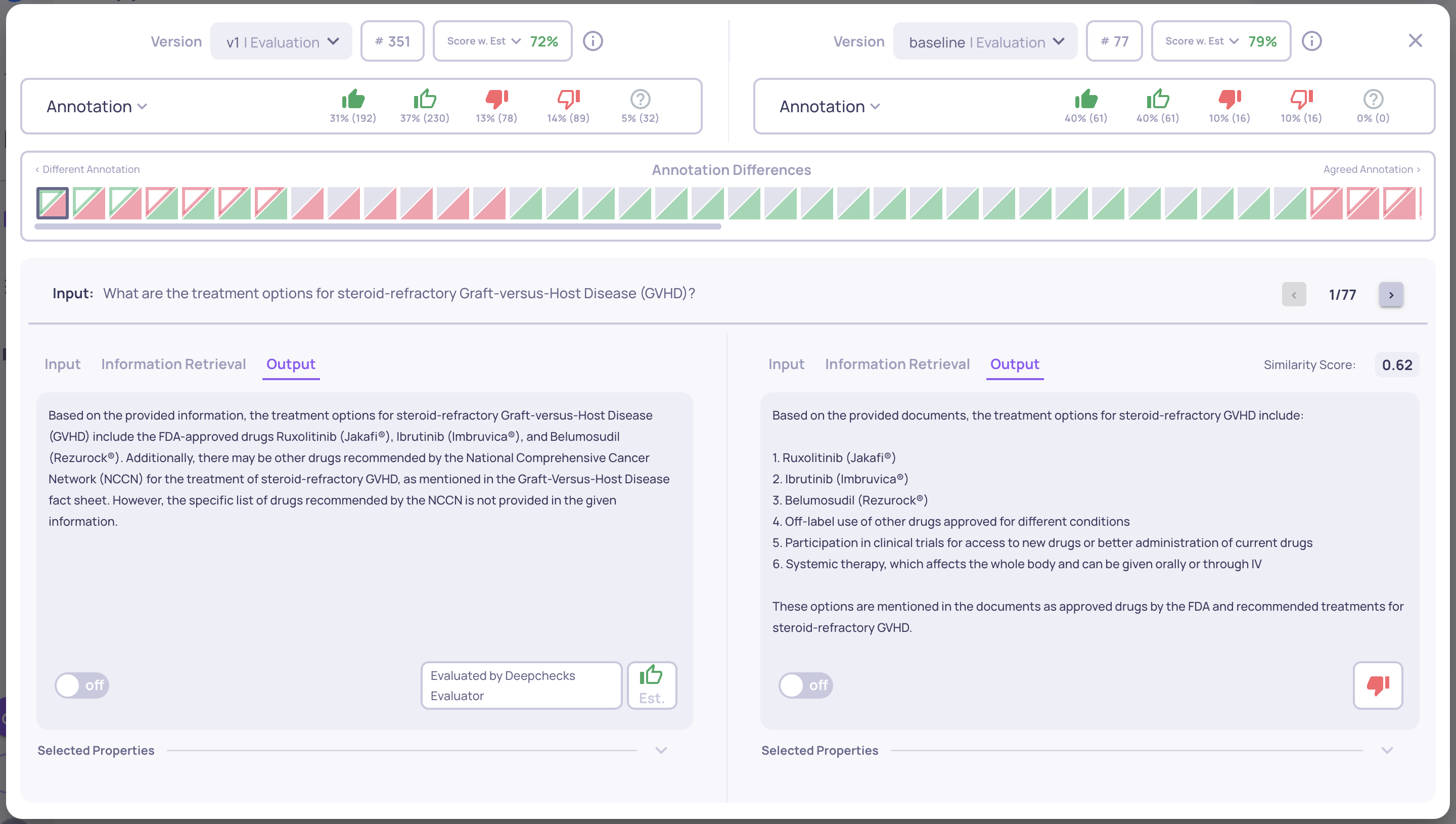

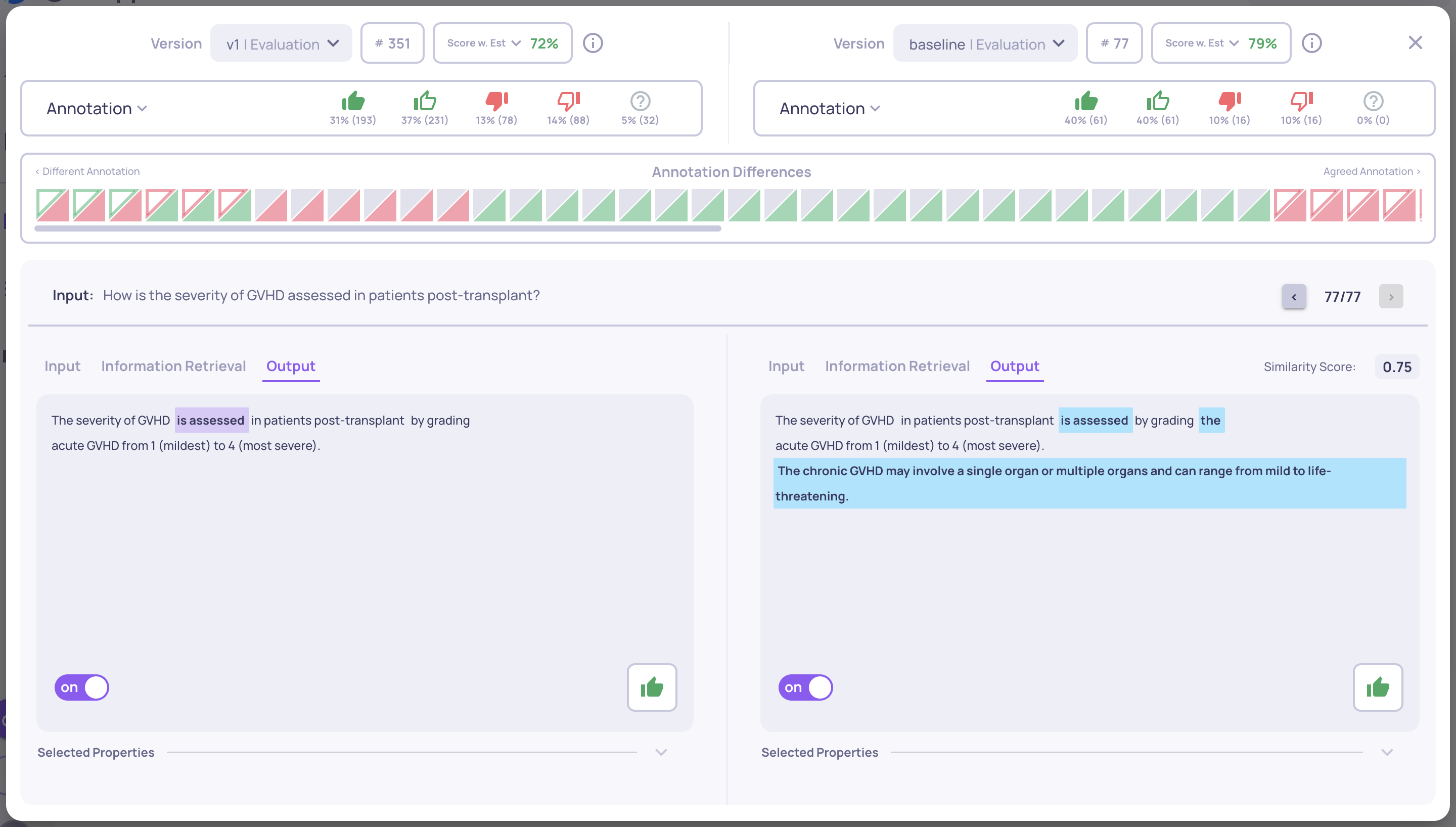

Select a few versions to inspect the interactions that changed the most between them. This change can be according to parameters of your choice: whether it’s the least similar outputs (for the same inputs), or interactions with the most different scores on a specific property (e.g. grounded in context, avoided answer), or interactions whose annotations are different.

-

The differences in the interactions across the versions can be inspected side by side, by opening the interaction comparison view (from the versions screen or the data screen).

-

For example, to identify the problems solved between v1 and v2, you can navigate to the data screen, filter for all “thumbs down” interactions in v1, and then compare them to v2 to quickly identify the improvements and their nature. This enables efficient pinpointing and characterization of the changes between versions.

Note: the above flows (getting the different version scores, getting the data and ids for the most different interactions, etc.) can be interacted with via API as well.

Updated 11 days ago