Customizing the Auto Annotation Configuration

Configuring your auto annotation mechanism when using Deepchecks to evaluate your LLM-based-app.

The auto-annotation process may differ between various interaction types. Deepchecks has a default auto-annotation process that is customizable to fit your needs and data. This page covers the technical aspects of the auto-annotation configurations. The first part focuses on the structure of the YAML, while the second part provides a guide on how to update the pipeline for your specific data.

This page includes two sections:

Recalculating Annotations

After uploading a new automatic annotation configuration, the pipeline must be rerun to update the annotations. If additional annotations were added, we recommend retraining the Deepchecks Evaluator for the interaction type. Rerunning the annotation pipeline and retraining Deepchecks Evaluator do not incur any token usage.

Annotation Configuration: YAML File Structure

The multi-step auto-annotation pipeline is defined in a YAML file, where each block represents a step in the process. These steps run sequentially, meaning each block only attempts to annotate samples that remain unannotated from the previous blocks. At each step, the specified annotation condition is applied to the unannotated samples, and those that meet the condition are labeled accordingly.

You can delete existing steps or add new ones by including additional sections in the file. Any samples that remain unannotated after the final step will receive the default_annotation label ("good" by default).

Below, you will find a general explanation with examples for each type of annotation block. To fully understand the YAML mechanism and customize it for your data, it’s recommended to explore the UI, as described in the second part.

Properties-Based Annotation

Properties' scores play a crucial role in evaluating interactions. The structure for property-based annotation includes the following key options:

- Annotation: The label assigned to samples that meet the specified conditions.

- Relation Between Conditions: Determines whether all conditions (AND) or any condition (OR) must be satisfied.

- Operator: Defines how conditions are evaluated. This includes greater than (GT), greater equal (GE), less than (LT), and less equal (LE) for numerical properties, as well as equality (EQ), inequality (NEQ), membership in a set (IN, NIN), and overlap between sets (OVERLAP) for categorical properties.

- Value: The specific value to which the operator is applied. For example, for the GE operator, the value can be seen as a threshold, while for the IN operator, you would provide a set or list of values.

Example 1: The block shown below labels any samples as 'bad' if they meet at least one property condition. For instance, if the 'Toxicity' score of the output is greater than or equal to 0.96, the interaction is annotated as bad.

- type: property

annotation: bad

relation_between_conditions: OR

conditions:

- property_name: Grounded in Context

operator: LE

value: 0.1

- property_name: Toxicity

operator: GE

value: 0.96

- property_name: PII Risk

operator: GT

value: 0.5

Example 2: The block shown below labels any samples as 'bad' if they meet both property conditions. For instance, if the 'Text Quality' score of the output is lower than or equal to 2, and the property column_name is either 'country' or 'city', then the interaction is annotated as 'bad.'

- type: property

annotation: bad

relation_between_conditions: AND

conditions:

- property_name: Text Quality

operator: LE

value: 2

- property_name: column_name

operator: NIN

value: ['country', 'city']

Similarity-Based Annotation

Using the similarity mechanism is useful for auto annotation of an evaluation set during regression testing. The similarity score ranges from 0 to 1 (1 being identical outputs) and is calculated between the output of a new sample and the output of previously annotated samples with the same user interaction id, if such samples exist.

Example 1: If an output closely resembles a previously annotated response (with a similarity score of 0.9 or higher) that shares the same user interaction id, it will copy its annotation.

- type: similarity

annotation: copy

condition:

operator: GE

value: 0.9

Example 2: Using similarity to identify examples with bad annotations. The block below illustrates the following mechanism: Whenever there is an unannotated interaction that has a low similarity score to a 'good' annotated example (with the same user interaction id), the unannotated interaction will be labeled as 'bad'.

- type: similarity

annotation: bad

condition:

operator: LE

value: 0.1

Deepchecks Evaluator-Based Annotation

The last block is the Deepchecks Evaluator, a high-quality annotator that learns from your data.

- type: Deepchecks Evaluator

Updating the Configuration YAML

-

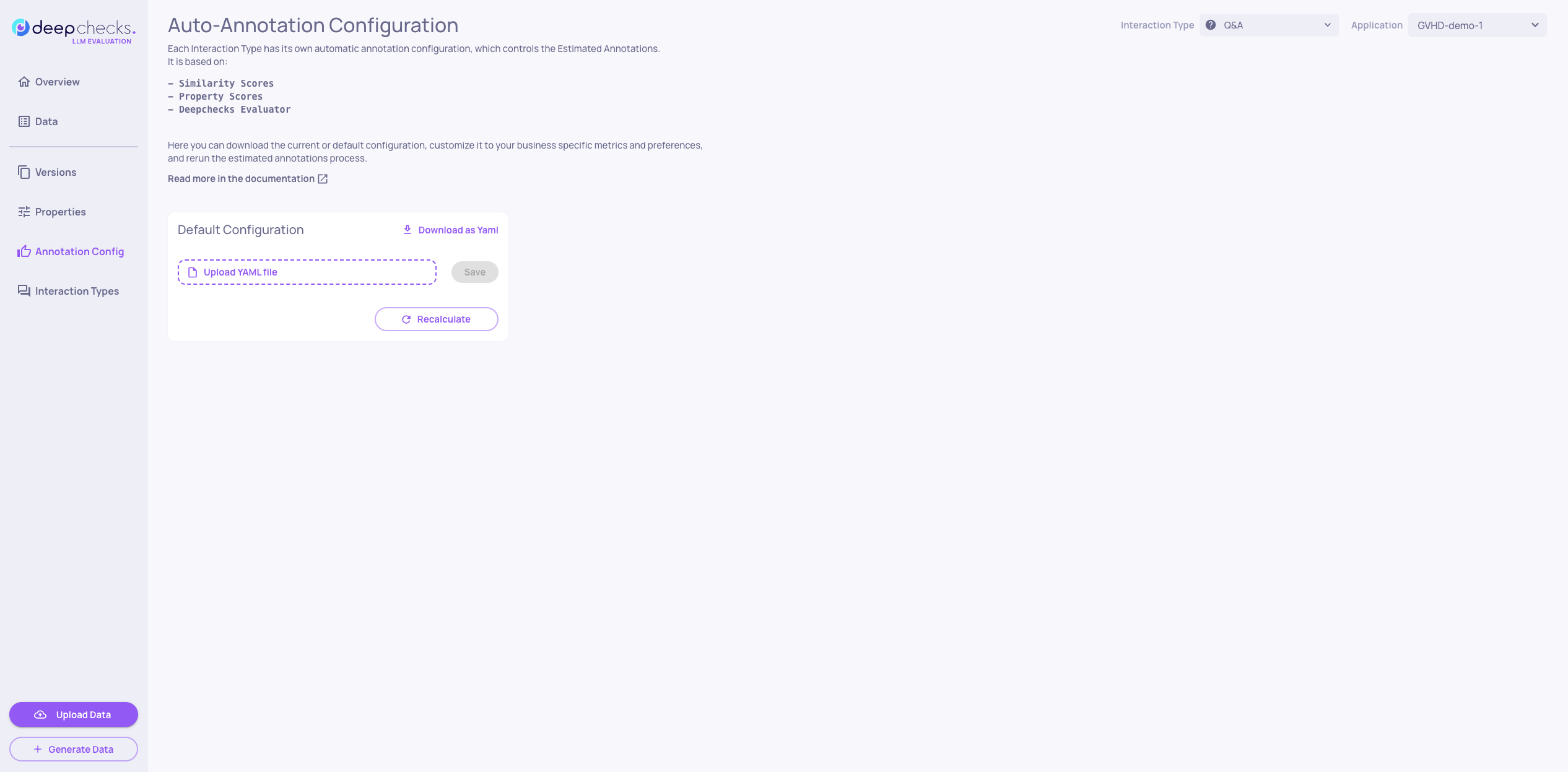

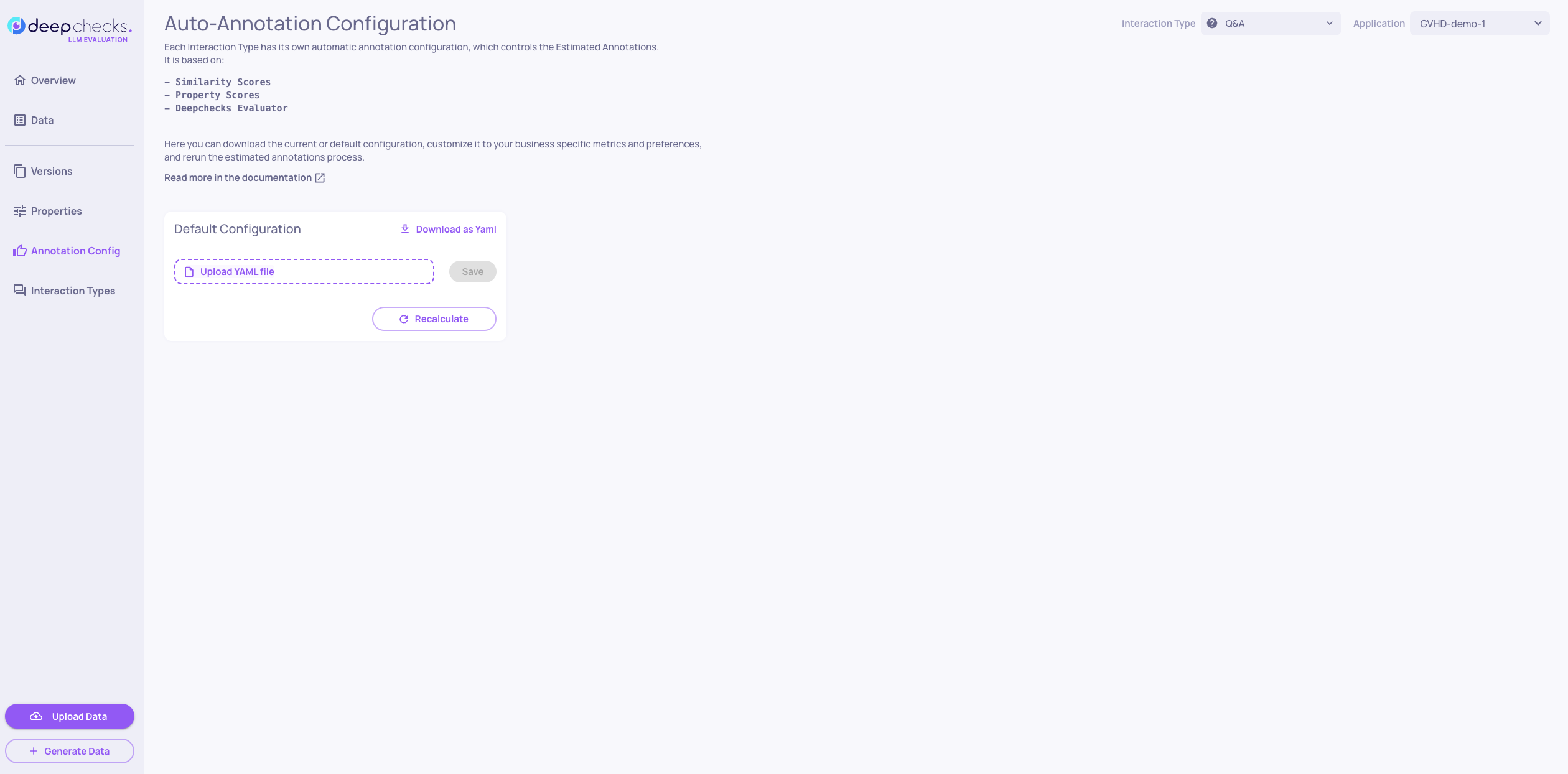

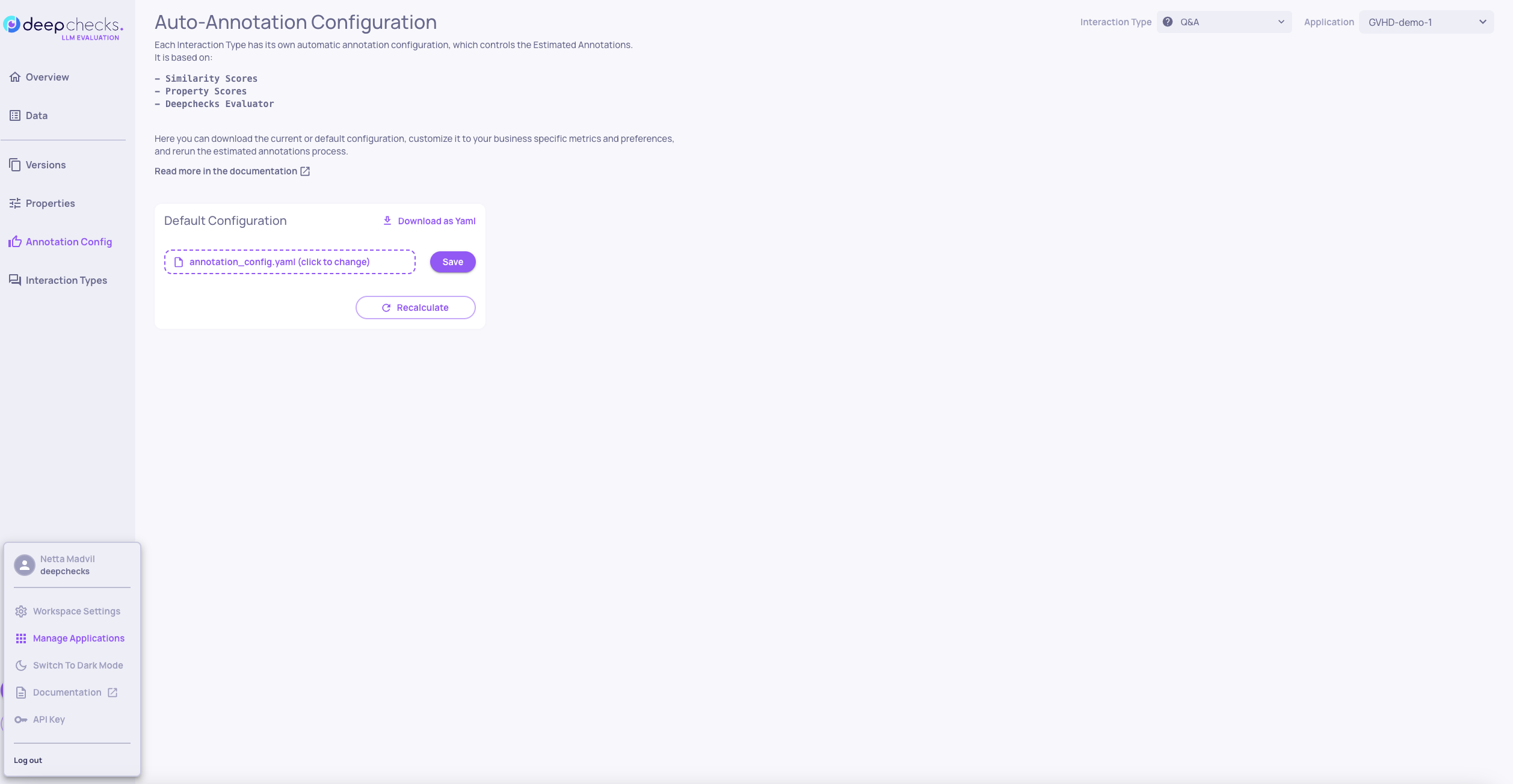

Download the existing YAML file. To do this, go to the 'Annotation Config' tab in the app and click on 'Download Current Configuration.' "Make sure to select the correct interaction type in the top-right corner. In this case, it should be 'Q&A.'"

-

Modify the YAML file locally as needed. You have the flexibility to add or remove properties, including user-value ones, adjust thresholds, modify condition logic, rearrange the order of blocks, and more. This allows you to tailor the configuration to specific interaction types and workflows.

-

Once done, upload your new YAML file and click 'Save.'

- Next, choose the version and environment in the app to recalculate the annotations based on the modified YAML. Decide whether to include retraining the Deepchecks Evaluator. This step does not affect user-provided annotations and does not incur any token usage.

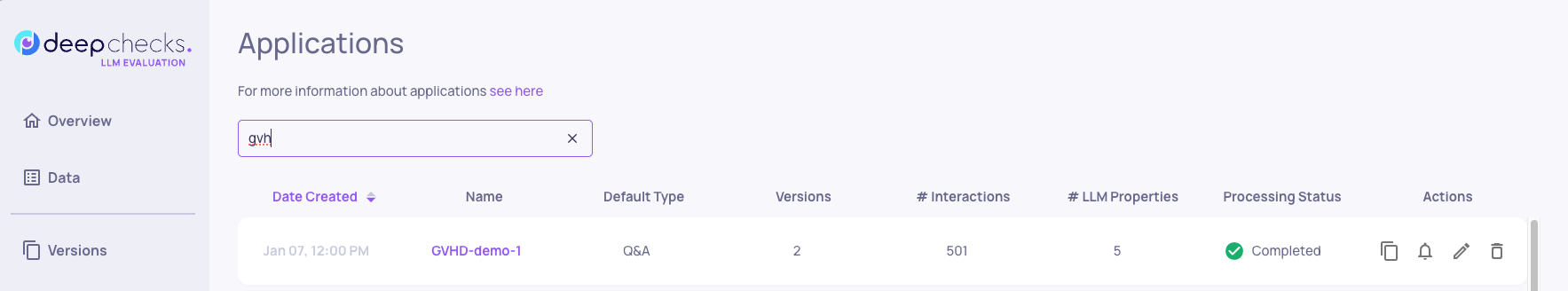

- Finally, wait for the new estimated annotations to be completed. Check the interaction type management view to see that the 'Pending' processing status changes to 'Completed'.

Updated about 1 month ago