Cost Tracking

Automatic LLM cost tracking and analysis across interactions, sessions, and versions based on configured model pricing

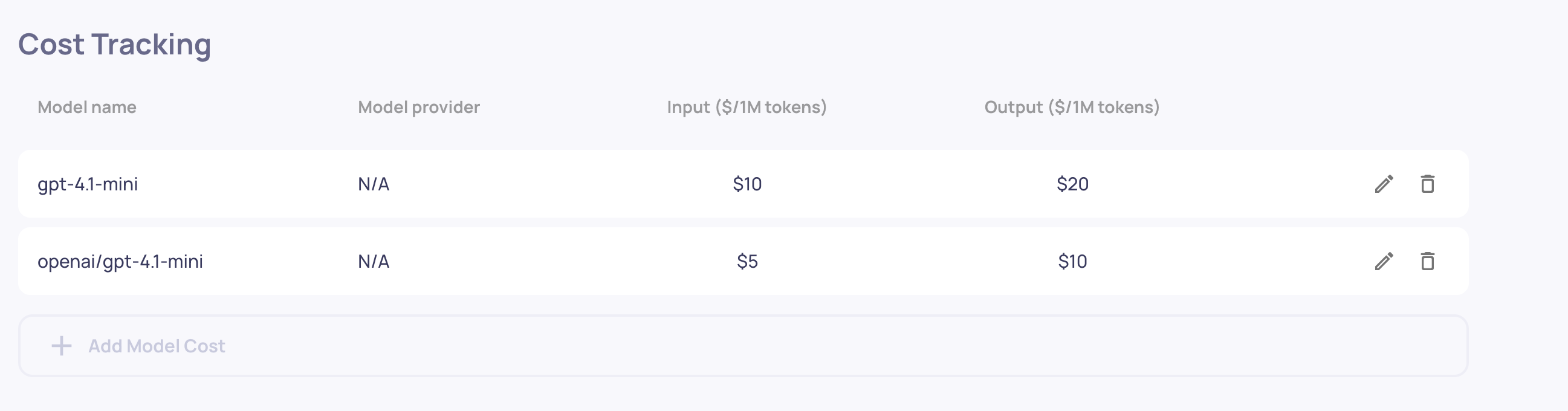

Cost Tracking

Overview

Cost tracking provides automatic visibility into your LLM spending across all interactions. By configuring model pricing once at the organization level, Deepchecks calculates costs for every interaction based on token usage, giving you insights from individual requests to application-wide trends. This helps you identify expensive patterns, compare cost-efficiency between model versions, and make data-driven decisions about model selection and prompt optimization.

How It Works

Cost tracking runs automatically for all interactions once model pricing is configured. When you log interactions or spans with input_tokens, output_tokens, and model fields, Deepchecks matches the model name against your configured pricing and calculates costs.

All pricing is configured in USD per 1 million tokens, and costs are calculated with 6 decimal places of precision for accuracy.

Configuration

Navigate to Workspace Settings → Cost Tracking to configure model pricing. You'll need:

- Model Name (required) - Must exactly match the model name in your logged data

- Model Provider (optional) - e.g., "OpenAI", "Anthropic", "Azure" - provides additional matching accuracy

- Input Token Price (required) - Price in USD per 1 million prompt tokens

- Output Token Price (required) - Price in USD per 1 million completion tokens

Click Add Model Cost to create a new entry. You can edit or delete existing configurations at any time. Configuration changes apply immediately to all new interactions. Note that this can be done by the Admins and Owners of the organization alone as part of Deepchecks' access control.

Model Name Matching

Cost calculation requires an exact match (case-insensitive) between the model name in your configuration and the model name logged in your interactions or spans. For example:

- ✅ Configuration:

"gpt-4"matches logged data:model: "gpt-4"ormodel: "GPT-4" - ❌ Configuration:

"gpt-3.5"does NOT match logged data:model: "gpt-3.5-turbo"

Provider Field: If you use the same model name across different providers (e.g., "gpt-4" from both OpenAI and Azure), add the provider to disambiguate. Deepchecks will:

- First try to match both model name and provider

- Fall back to matching just the model name if no provider was specified

- Return no cost if no match is found

This ensures accurate cost calculation even when using multiple providers.

Viewing Costs

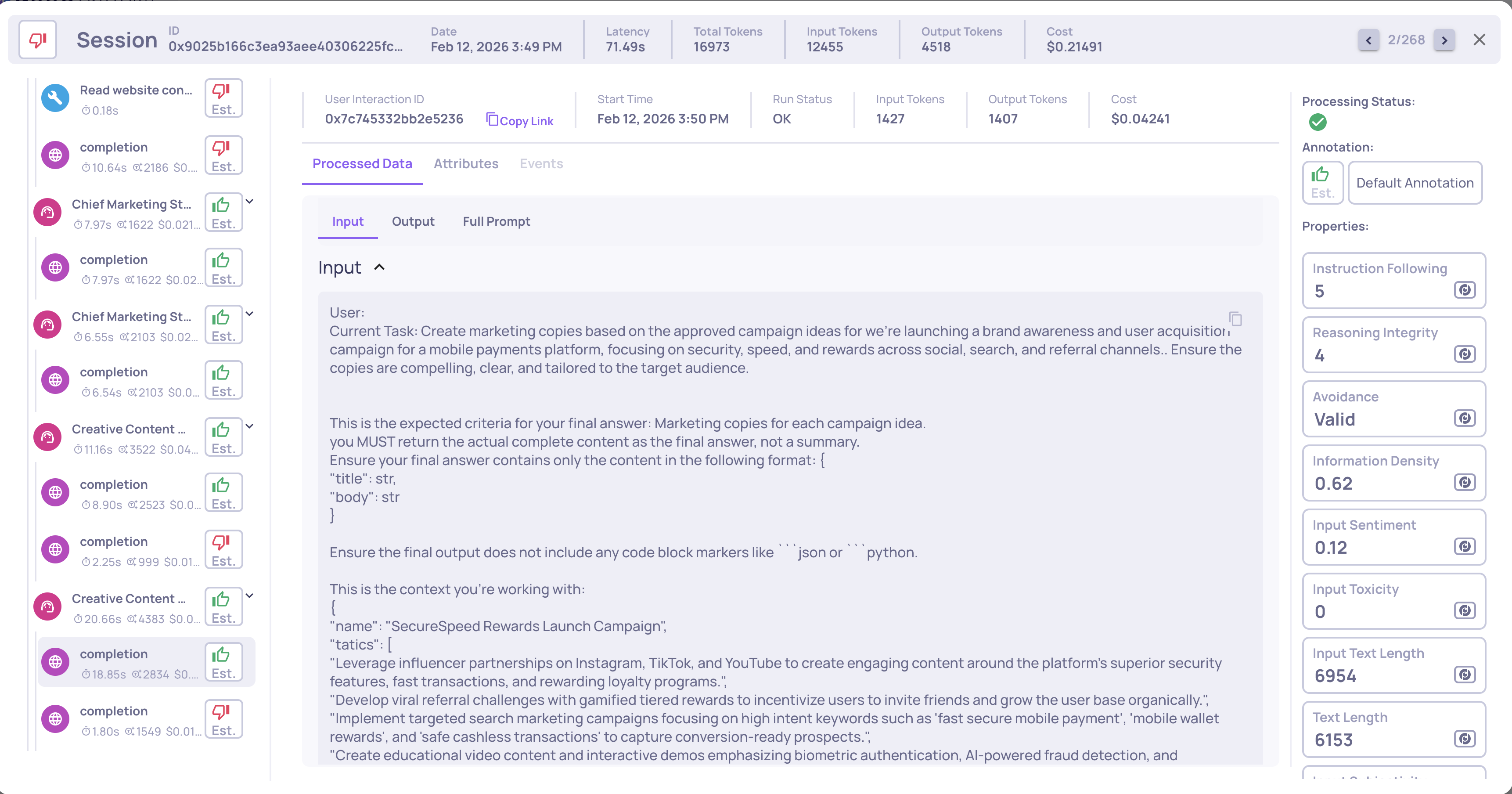

Interaction Level

Costs appear on individual interaction details:

- System Metrics Section - Shows total cost with 6 decimal precision (e.g.,

$0.000123) - Input/Output Breakdown - Separate

input_costandoutput_costvalues available in the data - Filtering - Use the interactions table filters to find interactions by cost range

Interactions without matching model configurations or missing token data will not show cost information.

For multi-step agentic flows using spans, costs are automatically aggregated:

- LLM Spans - Direct token usage costs calculated from

input_tokensandoutput_tokens - Parent Spans (CHAIN, AGENT, TOOL, RETRIEVAL) - Aggregate costs from all descendant LLM spans

- Root Interactions - Total cost includes all nested span costs

This provides accurate end-to-end cost tracking for complex agent workflows.

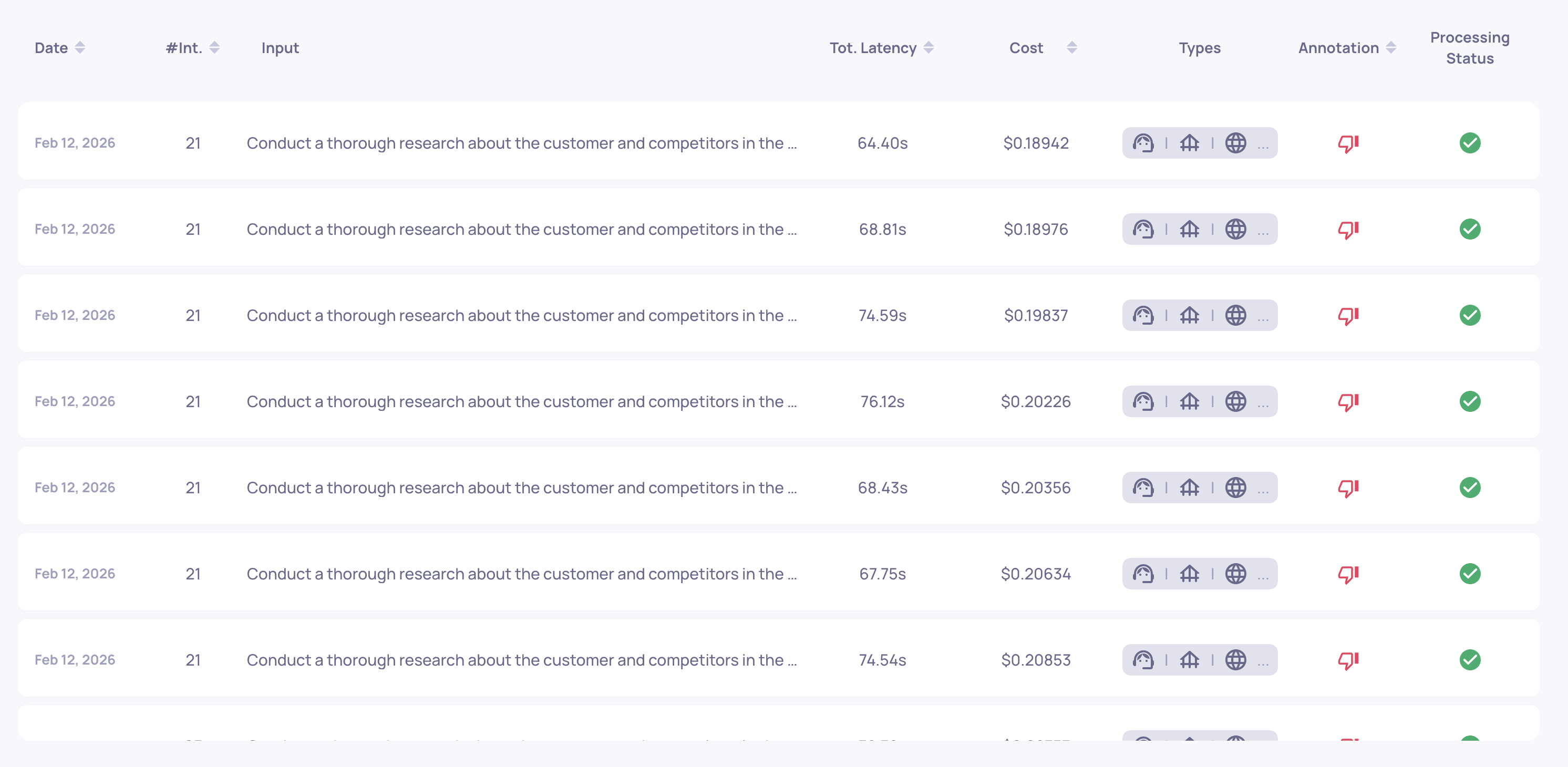

Session Level

The Sessions view displays aggregated costs:

- Session Cost - Sum of all interaction costs within the session

- Token Metrics - Session-level input tokens, output tokens, and total tokens alongside cost

This helps identify expensive conversation flows or user sessions.

Version Level

The Configuration → Versions table shows version-level cost metrics:

- Total Cost - Sum of all interaction costs for the version

- Average Cost - Mean cost per interaction

- Cost Comparison - Compare costs across versions to evaluate optimization efforts

Both PROD and EVAL environments display separate cost metrics, allowing you to assess production spending versus evaluation costs.

Requirements

To enable cost tracking, your logged data must include:

model- The model name (e.g.,"gpt-4","claude-3-opus")input_tokens- Number of input/prompt tokens usedoutput_tokens- Number of output/completion tokens generatedmodel_provider(optional) - Provider name for disambiguation

These fields can be included in both the SDK's log_interaction() method and Span objects for trace-based logging. For CSV uploads, include columns with these exact names.

Updated 2 days ago