Deepchecks LLM Evaluation

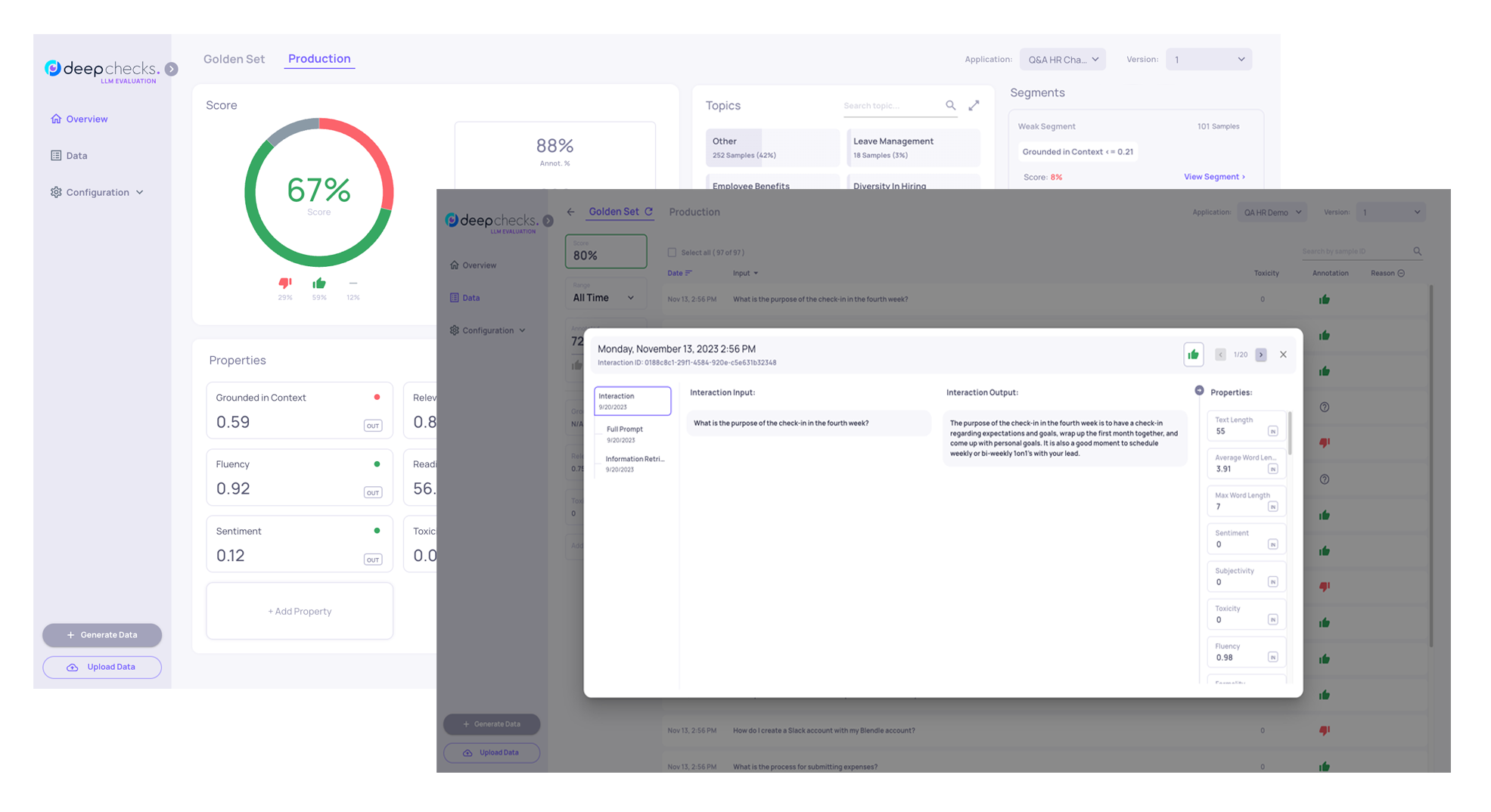

If you need to evaluate your LLM-based apps by understanding their performance, finding where it fails, identifying and mitigating pitfalls, and automatically annotating your data, you are in the right place!

Click here to Get Started With Deepchecks Hands-on (with Demo Data)

What is Deepchecks?

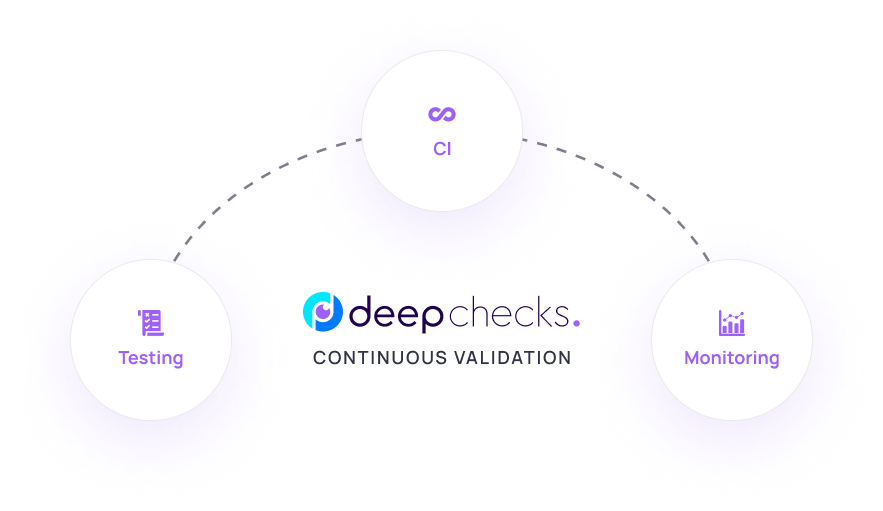

Deepchecks is a comprehensive solution for AI validation - helping you make sure your models and AI applications work properly from research, through deployment and during production.

Deepchecks has the following offerings:

- Deepchecks LLM Evaluation (Here) - for testing, validating and monitoring LLM-based apps

- Deepchecks Testing Package - for tests during research and model development for tabular and unstructured data

- Deepchecks Monitoring - for tests and continuous monitoring during production, for tabular unstructured data

Deepchecks for LLM Evaluation

With Deepchecks you can continuously validate LLM-based applications including characteristics, performance metrics, and potential pitfalls throughout the entire lifecycle from pre-deployment and internal experimentation to production.

- If you don't yet have access for the system, click here to sign up

- Get started with Deepchecks and upload your data for evaluation

- Check out our Core Features, explore the concept of Properties at the core of the system, or check out our various cookbooks.

Updated 19 days ago