[Old] Similarity-based Comparison and Annotation

In this section we'll inspect Deepechecks' similarity-based capabilities.

For every version that is uploaded to the system, deepchecks uses an algorithm to calculate a similarity score between 0 and 1 for every two corresponding interactions.

Similarity ScoreThe similarity score is calculated between the outputs of every two interactions that have the same

user_interaction_id, symboling that they represent interactions with the same inputs, across both versions.

We will demonstrate here:

- How you can explore Deepchecks' similarity based scoring, and how it functions within the Deepchecks auto-annotation pipeline.

- How to manually update the annotations based on the similarity-based recommendation.

Similarity Based Scoring

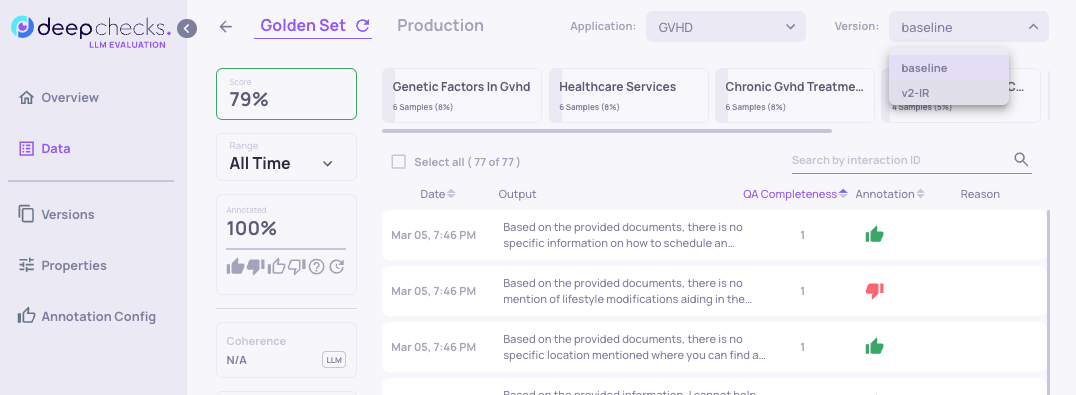

- Navigate to the "Data" page.

- Select "v2-IR" version

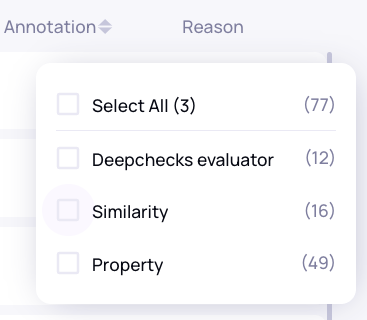

- Filter on the scoring "Reason", and choose "Similarity".

We can now see all interactions which's annotations were copied due to having a high similarity score, and you can compare their outputs, using the "View Similar Response" link.

The threshold for what is considered "similar" for the auto-annotation pipeline is customizable, and can be changed in the auto-annotation configuration.

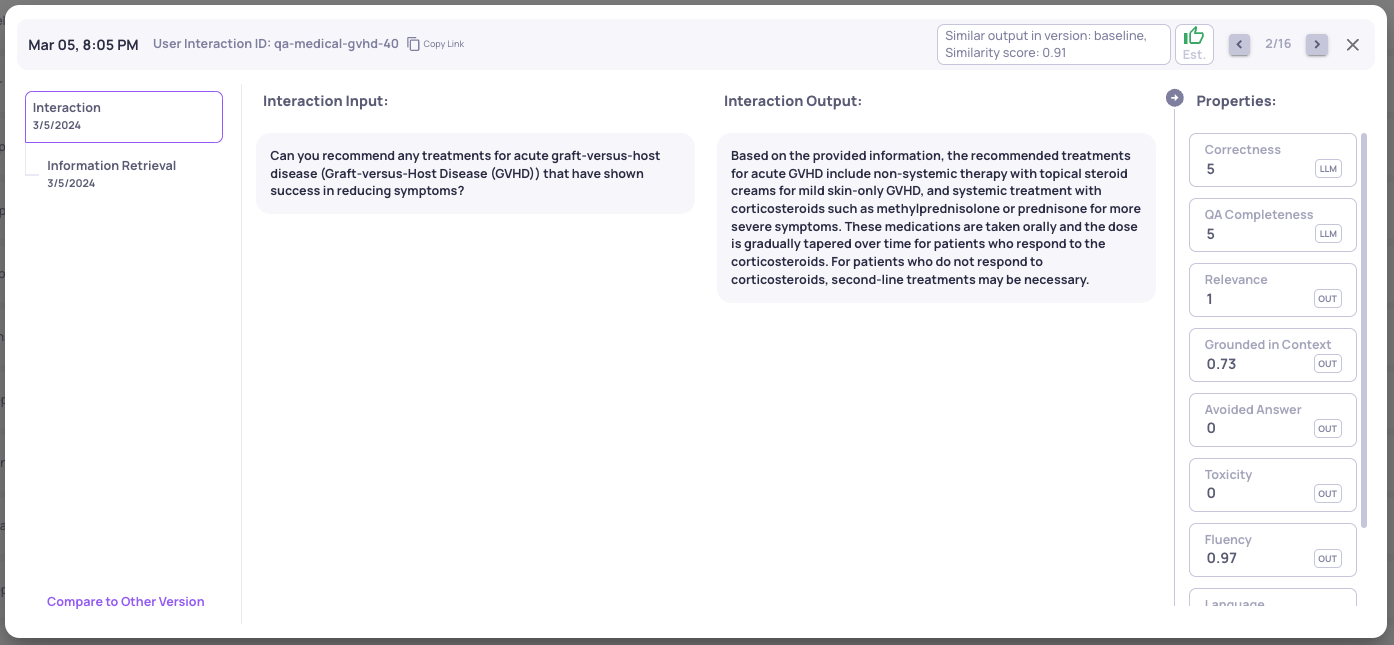

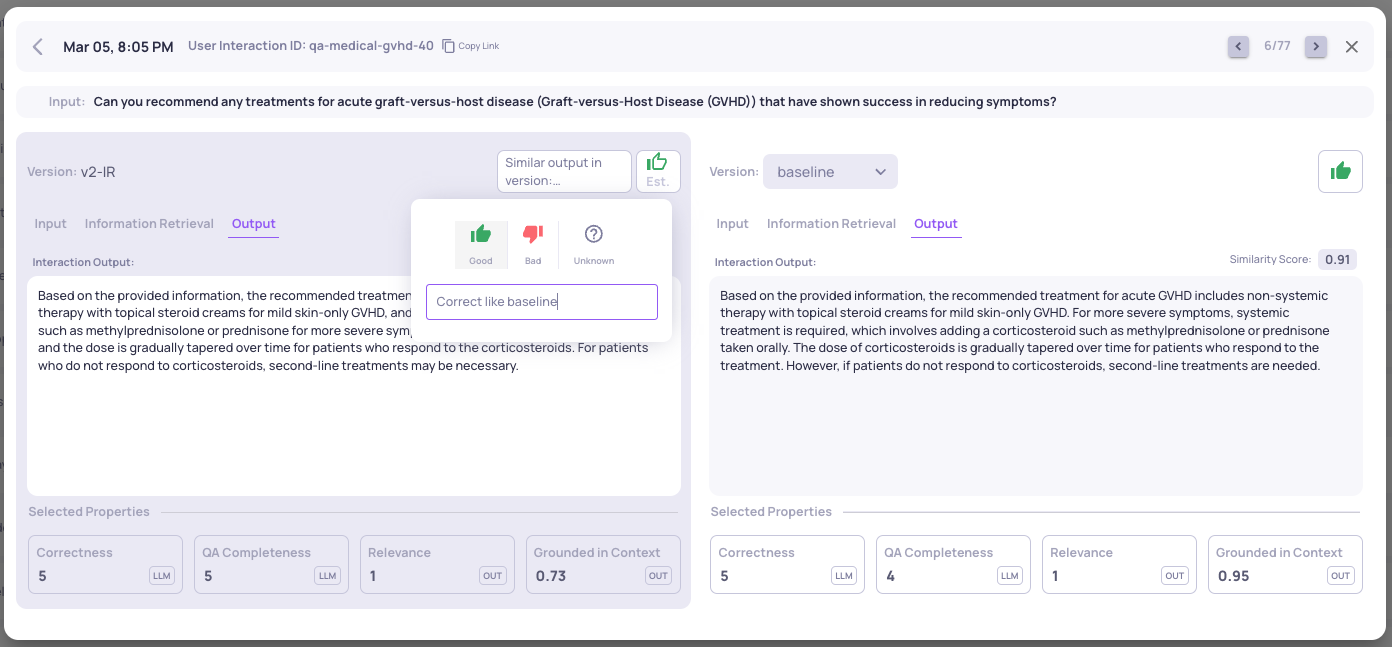

Interaction Comparison View

This view enables browsing (using the arrows on the top right) through all of the filtered interactions in the "Data" page, and comparing the interactions' different steps.

You can also reach the interaction comparison dialog by clicking on the "Compare to Other Version" button on the bottom left of the Interaction view, for any chosen interaction.

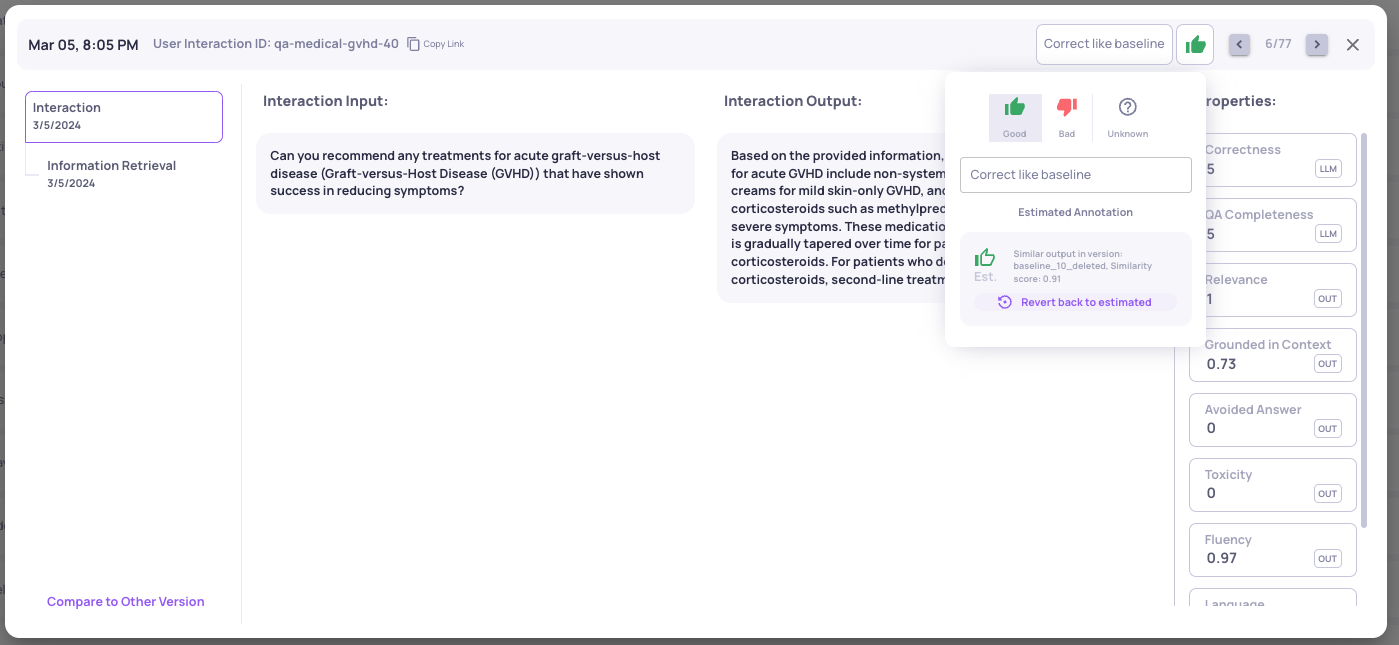

### Updating Estimated Annotations to User AnnotationsNow, we can click the "👍 Est." icon, and choose the "Good" annotation, with an optional "Reason", to immediately convert the "estimated annotation" to a user annotation.

### Updating Estimated Annotations to User AnnotationsNow, we can click the "👍 Est." icon, and choose the "Good" annotation, with an optional "Reason", to immediately convert the "estimated annotation" to a user annotation.

The original estimated annotation is still viewable, by clicking again the annotation.

Annotations and inspecting the estimated annotations can be done from various locations.

These include: directly from the data page, from the single interaction view, or from the interaction comparison view.

Updated 3 months ago