[Old] Identify Problems Using the Properties and Estimated Annotations

In this section we'll identify problematic LLM behaviors, using the "Grounded in Context", "Retrieval Relevance" and "Relevance" Properties.

In addition, we'll have a look at the "Avoided Answer" property, that is sometimes related to these findings, and thus filtering it out can help pinpointing the desired issues.

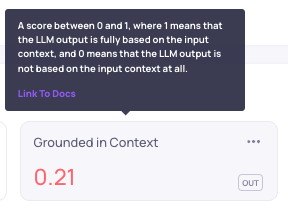

More info about each of these properties can be seen upon hovering on the property in the UI:

If a property isn't visible, it can be added using the "Add Property" in the Overview page, or the "Add property filter" in the Data page:

Identifying and Annotating Problematic Samples based on Properties

-

Go to the Data Screen, and sort according to "bad" and "estimated bad". This can be done by selecting the thumbs down filters on the side of the page.

-

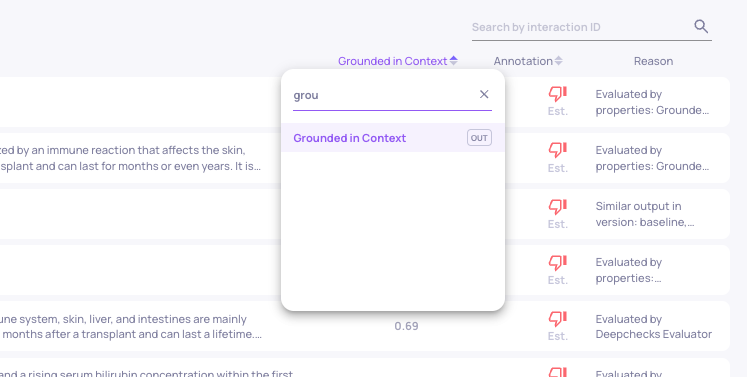

Choose the "Grounded in Context" property, and sort the interactions from low to high.

We can see that there are a few interactions that have a very low "Grounded in Context score".

Select one of these interactions. In the annotation reason, you can see that they were indeed annotated as bad due to their low "Grounded in Context" score. This is configurable in the Auto-annotation configuration YAML.

Have a look at the data, and inspect whether the "Interaction Output" is indeed based (or not) on the "Information Retrieval" data, which is the provided context.

Browse to see additional interactions with a low score.

Note that in the following gif, we first add the "Grounded in Context" Property to the side view, so that we'll be able to inspect its values on the right side of the interaction view, without scrolling.

-

You can repeat the same with the "Retrieval Relevance" and "Relevance" properties, to evaluate the relevance of the retrieved information to the input, and of the output to the input.

-

You may see that some of the interactions that receive low scores, are due to the LLM avoiding answering.

To filter out this behavior, we can apply a filter on the "Avoided Answer" property in the Data page, such that only interactions with low "Avoided Answer" scores will be viewable, to help distinguish between the source of the problems.

Updated 3 months ago