0.28.0 Release Notes

10 months ago by Yaron Friedman

This version includes **Support of agent use-cases, experimentation management components and annotations on the session level,**along with more features, stability and performance improvements, that are part of our 0.28.0 release.

Deepchecks LLM Evaluation 0.28.0 Release:

- 🕵️♂️ Support of Agent Use-Cases

- 🥼 Experiment Management

- ⏱️ Introducing Tracing Metrics (Latency and Tokens)

- 👍 Session Annotation

- 🗃️ Additional Retrieval Use-Case Properties

What’s New and Improved?

-

Support of Agent Use-Cases

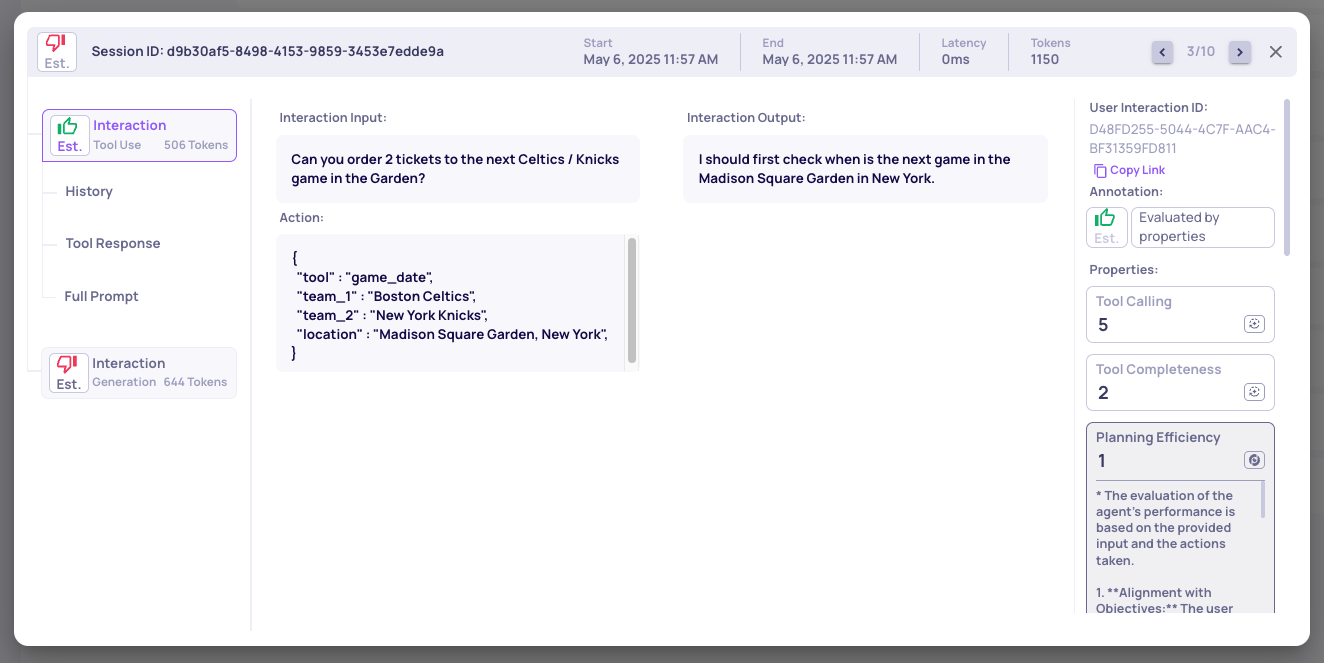

- We've introduced a new interaction type: Tool Use, designed to evaluate agentic workflows where LLMs invoke external tools (e.g., calculators, web search, APIs) during multi-step reasoning. This structure captures each step's observation, action, and response, enabling detailed analysis of the agent's decision-making process.

- To support this, we've added specialized properties such as Tool Appropriateness, Tool Efficiency, and Action Relevance, allowing for nuanced evaluation of tool-based interactions. These properties help assess whether the chosen tools are suitable, efficiently used, and relevant to the task at hand. For more details, click here.

Example of an Agent Use-Case Session with Tool-Use Unique Properties

-

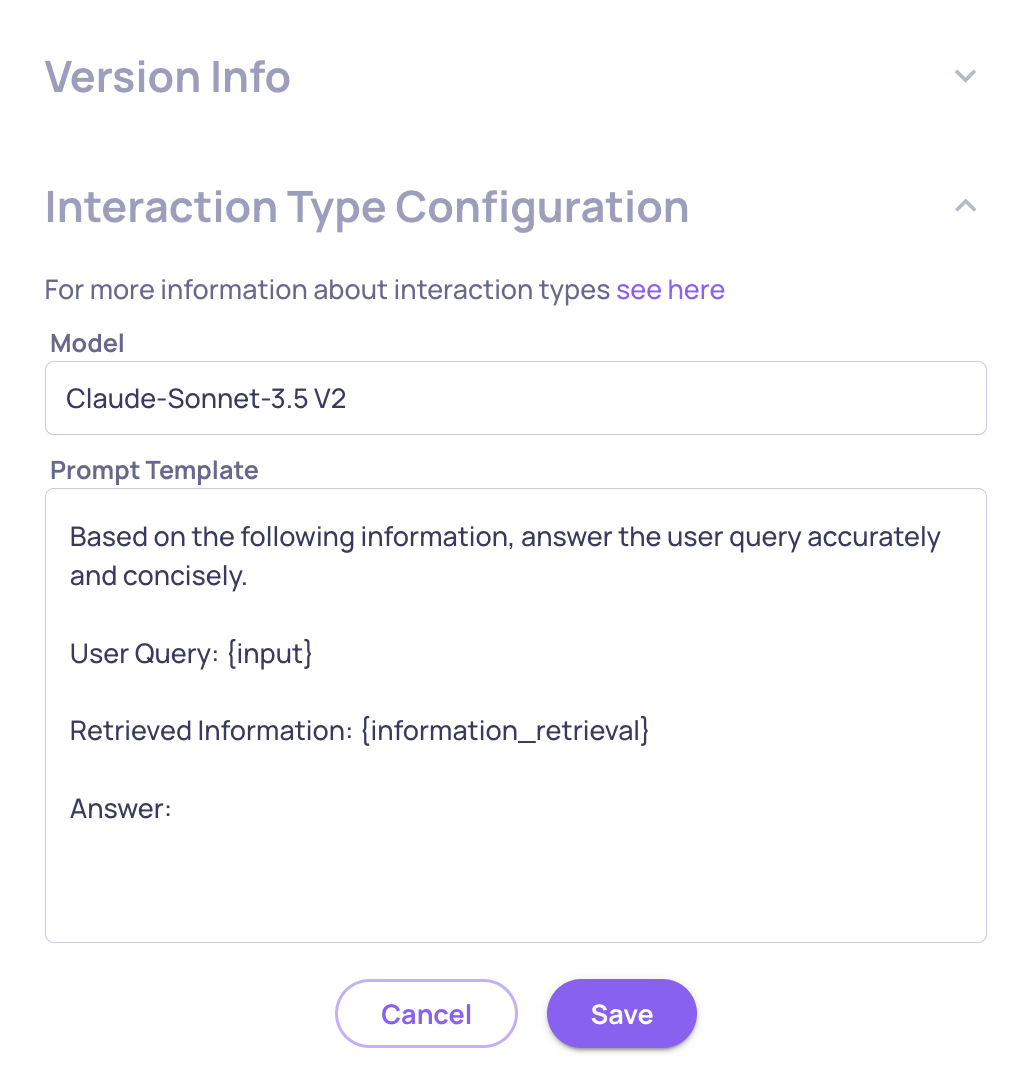

Experiment Management

- We've enhanced our experiment management by introducing interaction-type-level configuration. Beyond version-level metadata, you can now define experiment-specific details—such as model identifiers, prompt templates, and custom tags—directly within each interaction type. This granularity enables more precise comparisons across experiments and a clearer understanding of how specific configurations impact performance. For more details, click here.

Experiment Configuration Data on the Interaction Type Level

-

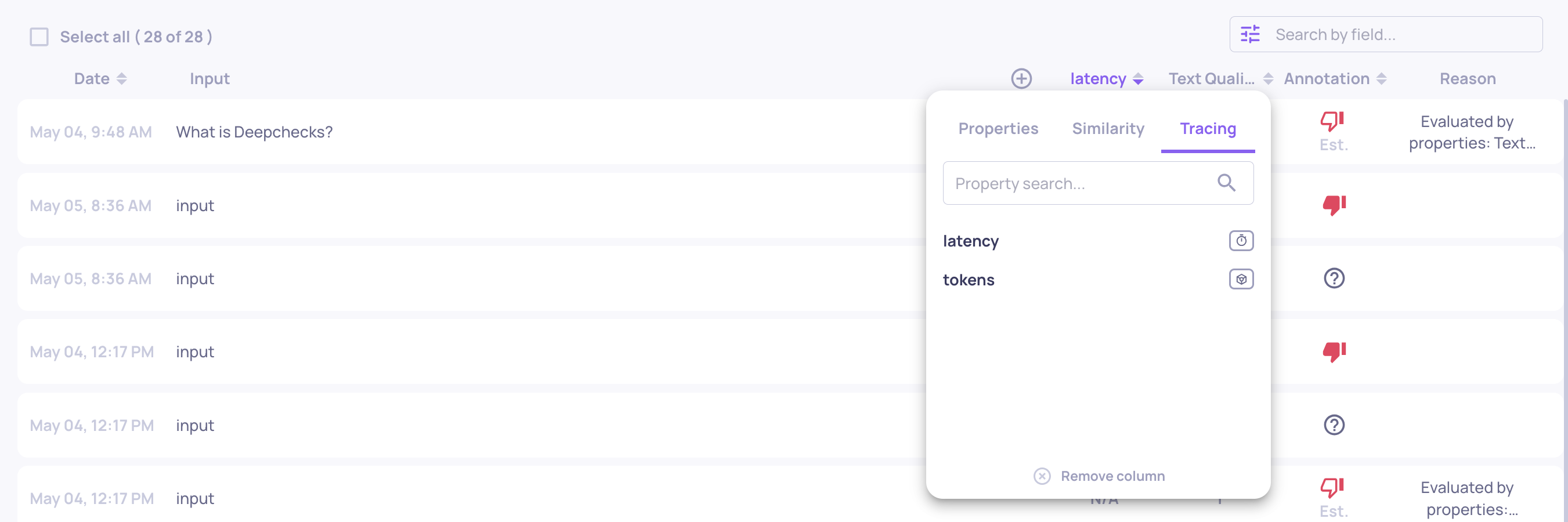

Introducing Tracing Metrics

- We've added support for tracing metrics, enabling you to analyze interaction latency and token usage across your LLM workflows. These metrics are aggregated at the session level, providing a comprehensive view of performance over multi-step interactions. This enhancement facilitates deeper analysis of version behavior and more effective comparisons between different configurations. For more details, click here.

Sorting and Filtering by tracing data on the Data Screen

-

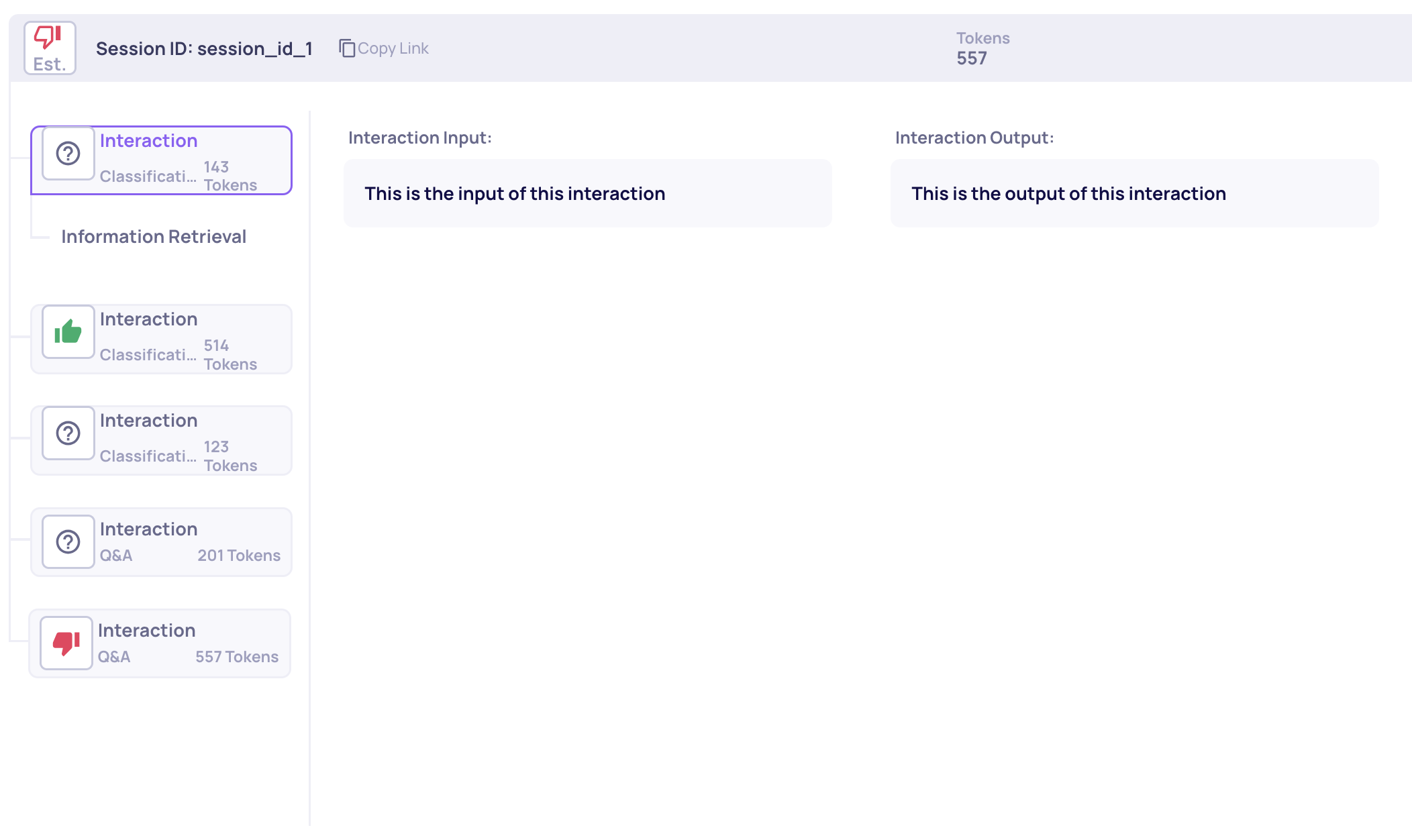

Session Annotations

- We've expanded our annotation capabilities by introducing session-level annotations. Previously, annotations were available only at the interaction level. Now, Deepchecks aggregates these into a single session annotation using a configurable logic. This enhancement is particularly beneficial for evaluating multi-step workflows, such as agentic or conversational sessions, where understanding the overall session quality is crucial. For more details, click here.

A session that was annotated 'bad' due to a bad interaction annotation on a flagged interaction type (Q&A)