Welcome to Deepchecks LLM Evaluation

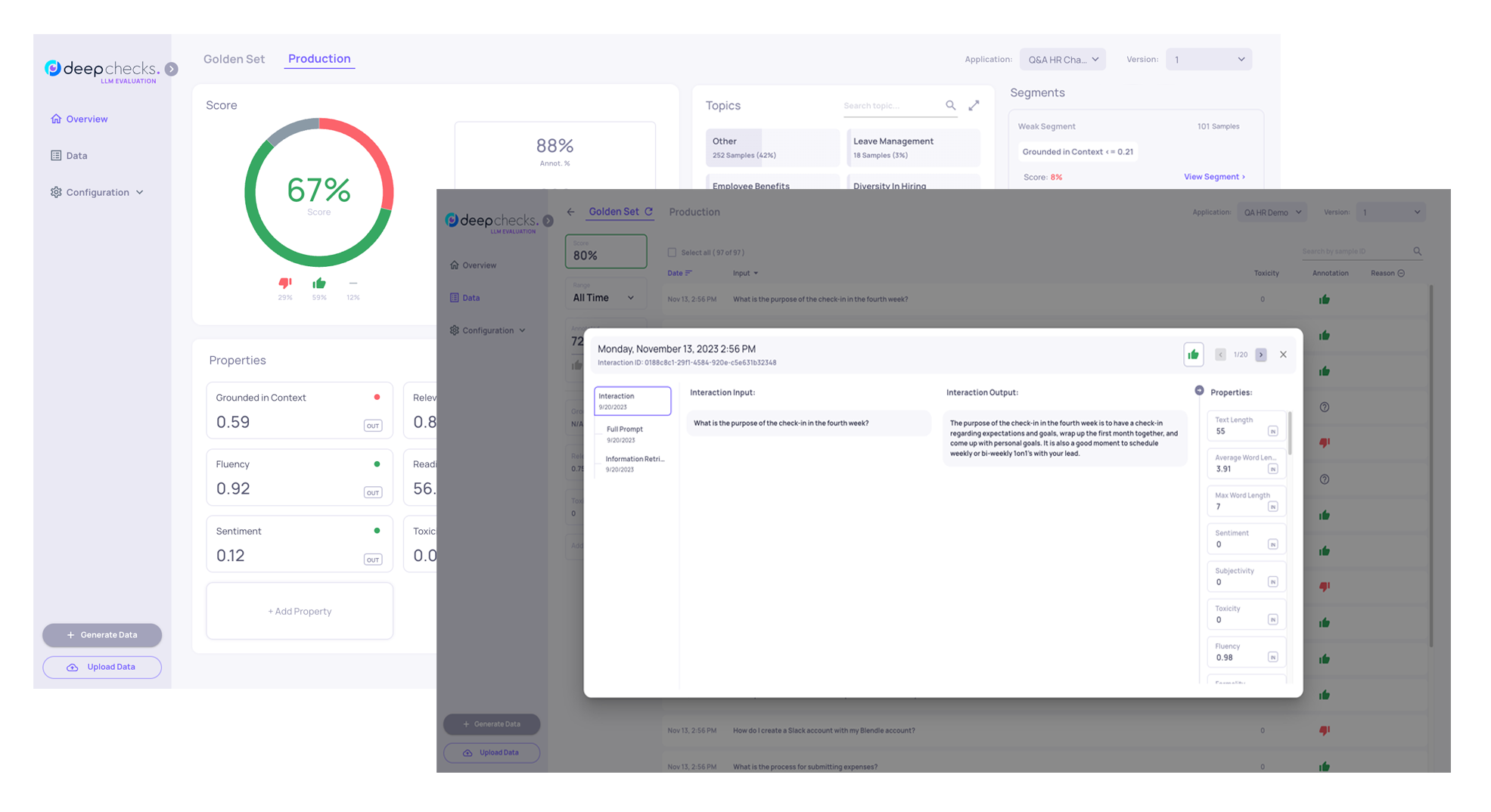

If you need to evaluate your LLM-based apps by: understanding the performance of your LLM-based pipeline, finding where it fails, identifying and mitigating pitfalls, and making your annotation cycle more efficient, automatic, and methodological, you are in the right place!

What is Deepchecks?

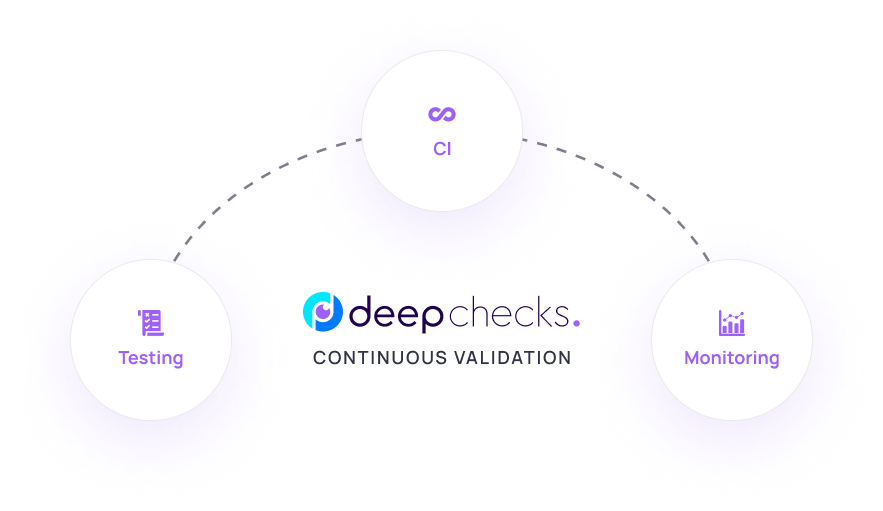

Deepchecks is a comprehensive solution for AI validation. Helping you make sure your models and AI applications work properly from research, through deployment and during production.

Deepchecks has the following offerings:

- Deepchecks LLM Evaluation (Here) - for testing, validating and monitoring LLM-based apps

- Deepchecks Testing Package - for tests during research and model development for tabular and unstructured data

- Deepchecks Monitoring - for tests and continuous monitoring during production, for tabular undstructured data

Get Started with Deepchecks for LLM Evaluation

With Deepchecks you can continuously validate LLM-based applications including characteristics, performance metrics, and potential pitfalls throughout the entire lifecycle from pre-deployment and internal experimentation to production. Get started by checking out our Main Features, exploring the Concepts at the core of the system, and hop on board with our Quickstarts (sign up https://deepchecks.com/solutions/llm-evaluation/ to apply for access to the system).

Updated 11 months ago