0.18.0 Release Notes

by Shir ChorevThis version includes improved understanding of your version’s performance with root cause analysis, added visibility the system’s usage, along with more features, stability and performance improvements, that are part of our 0.18.0 release.

Deepchecks LLM Evaluation 0.18.0 Release

- 💡 Version Insights Enhancements

- 🔎 Score Reasoning Breakdown

- 📶 Usage Plan Visibility

- 🦸♀️ Improvement to PII Property

- ⚖️⚖️ Versions Page Updates

- 📈 Production Overtime View Improvements

What’s New and Improved?

-

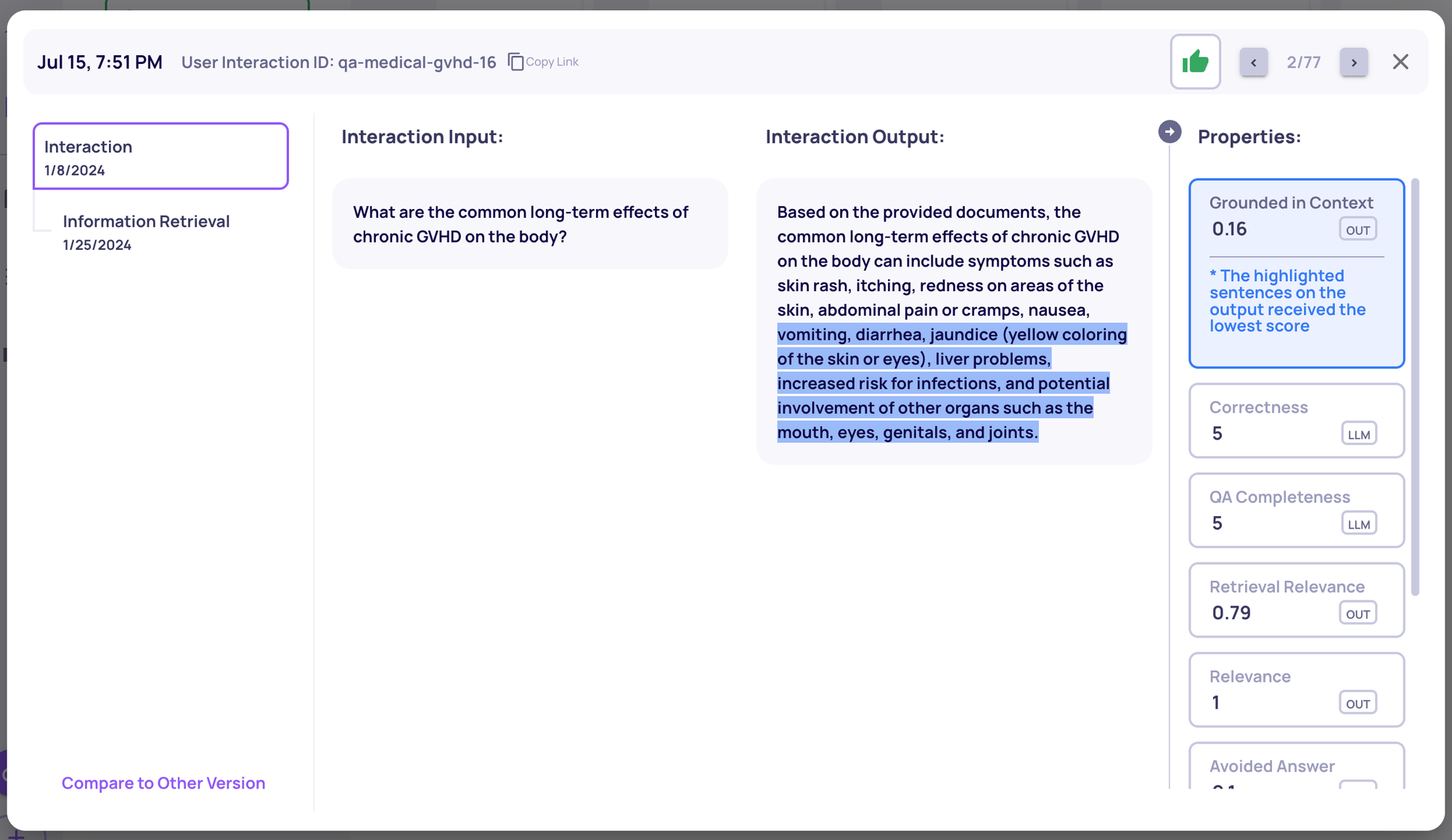

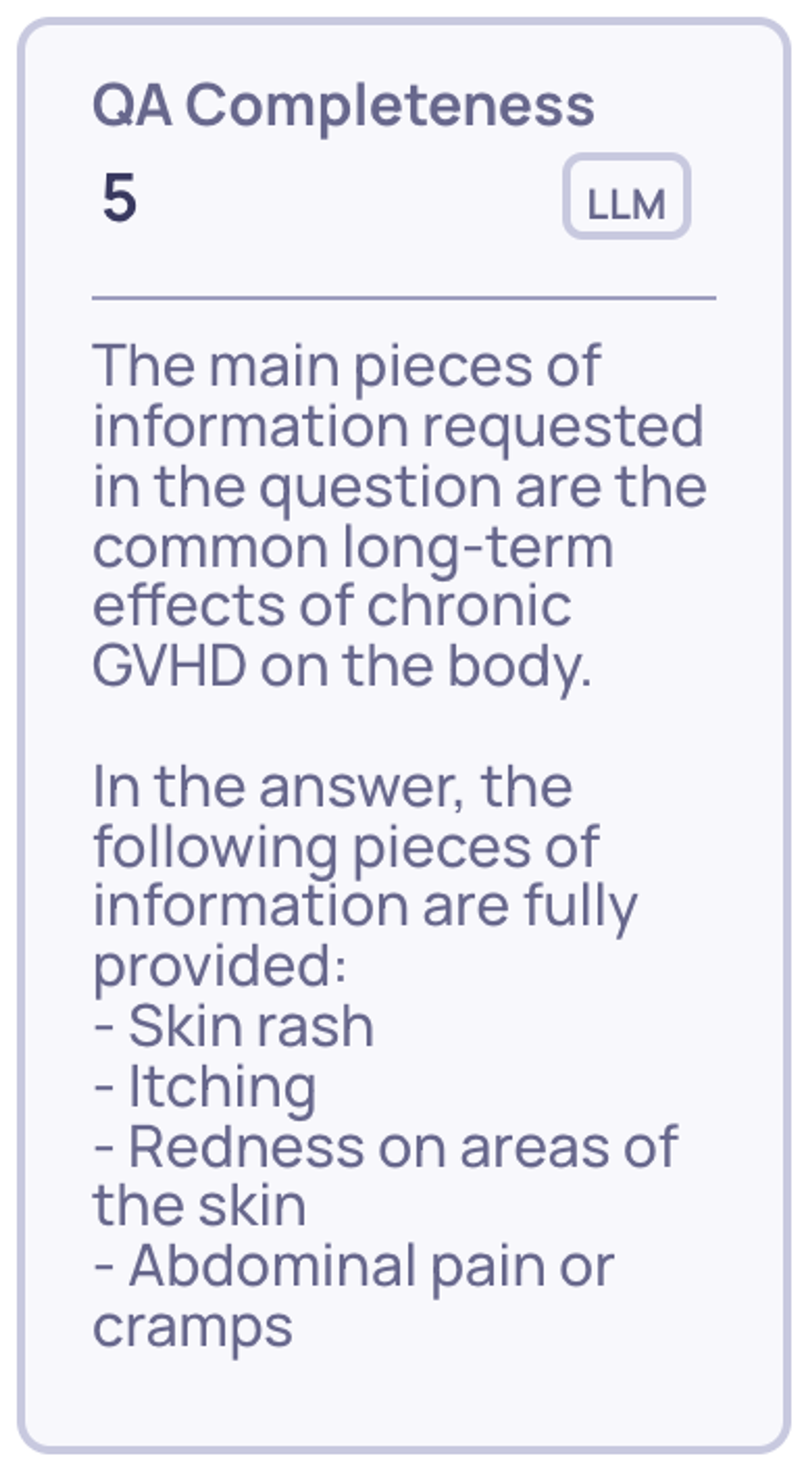

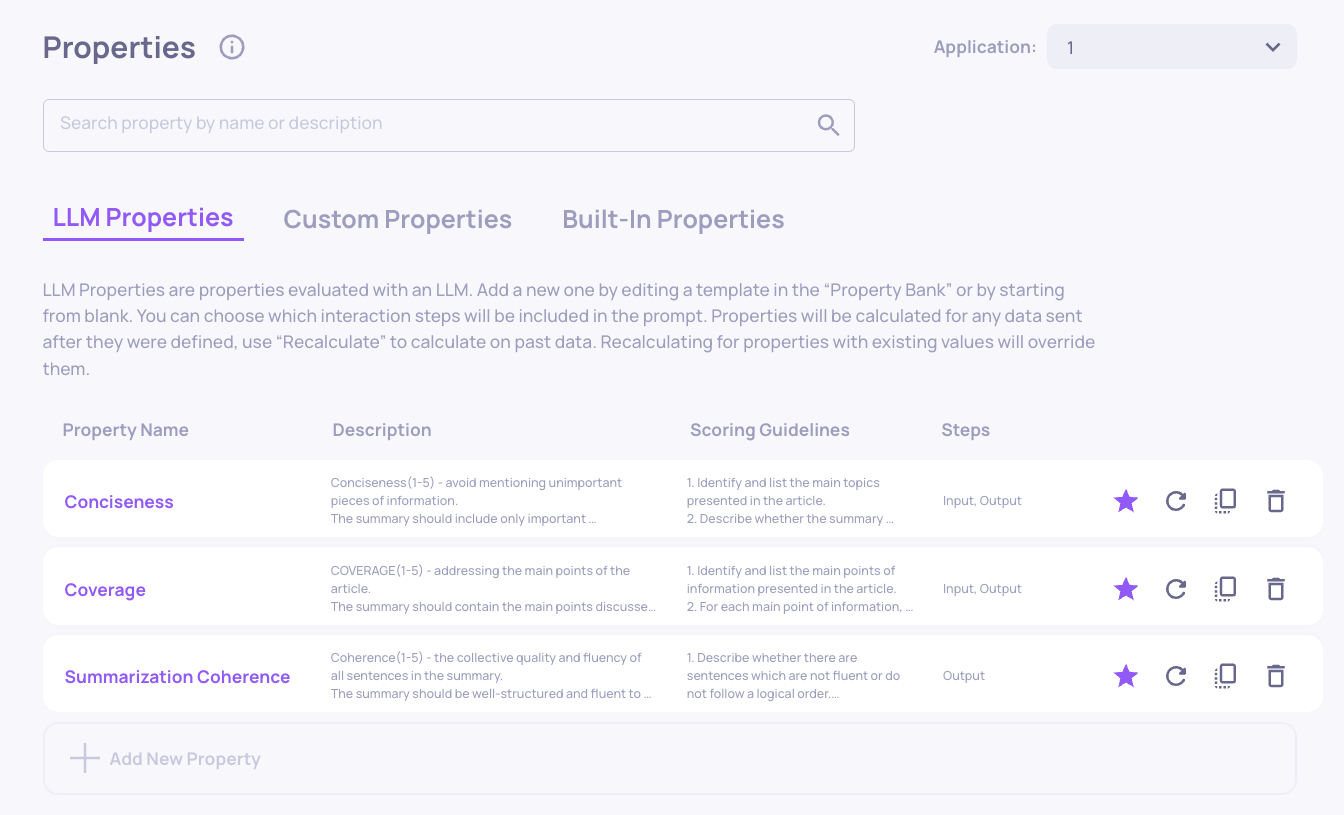

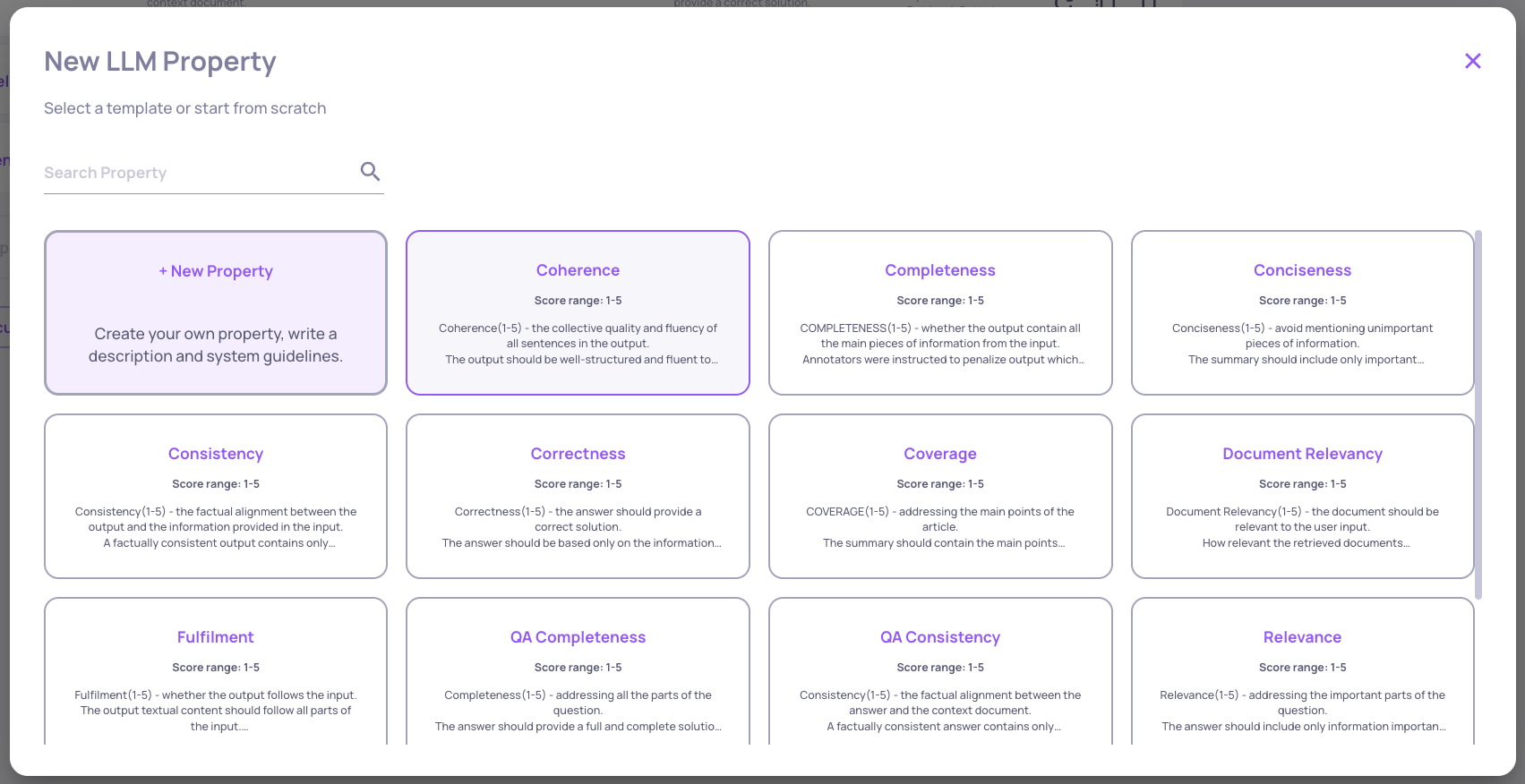

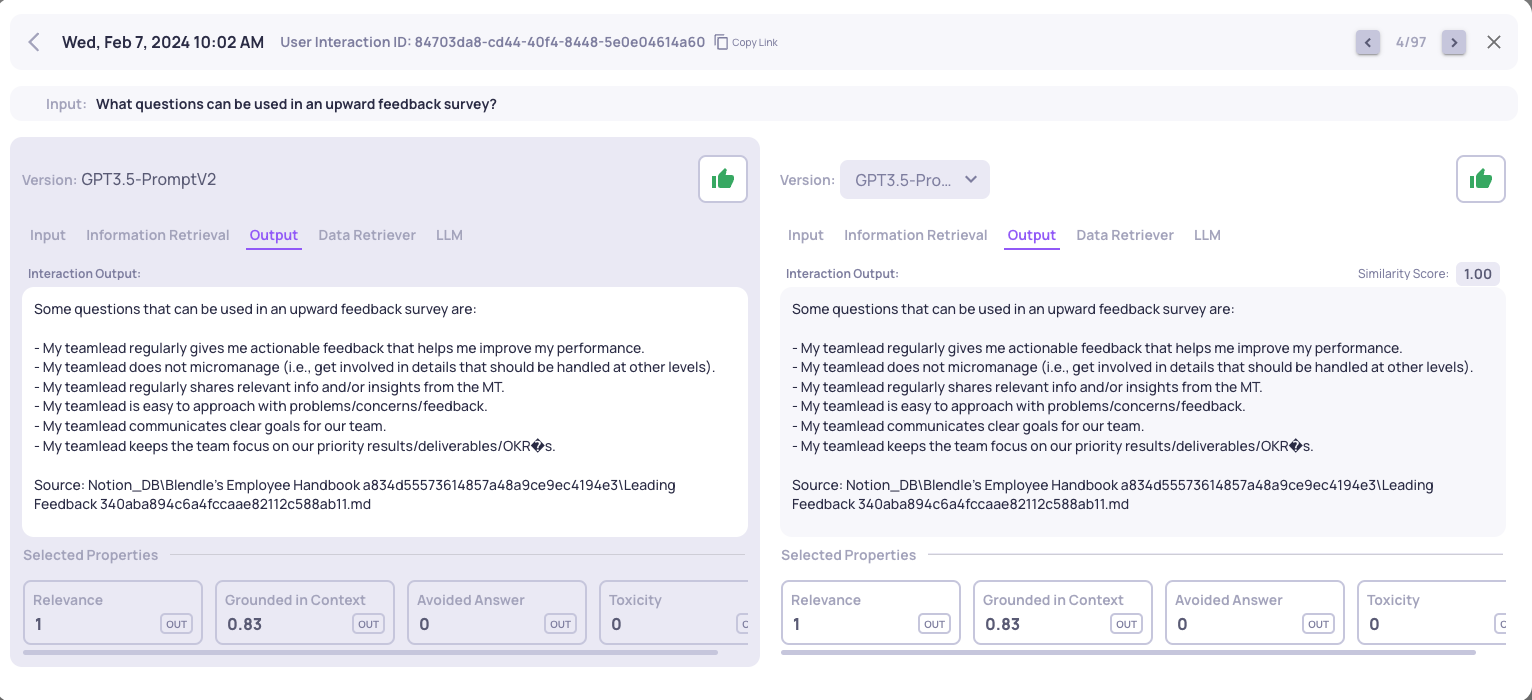

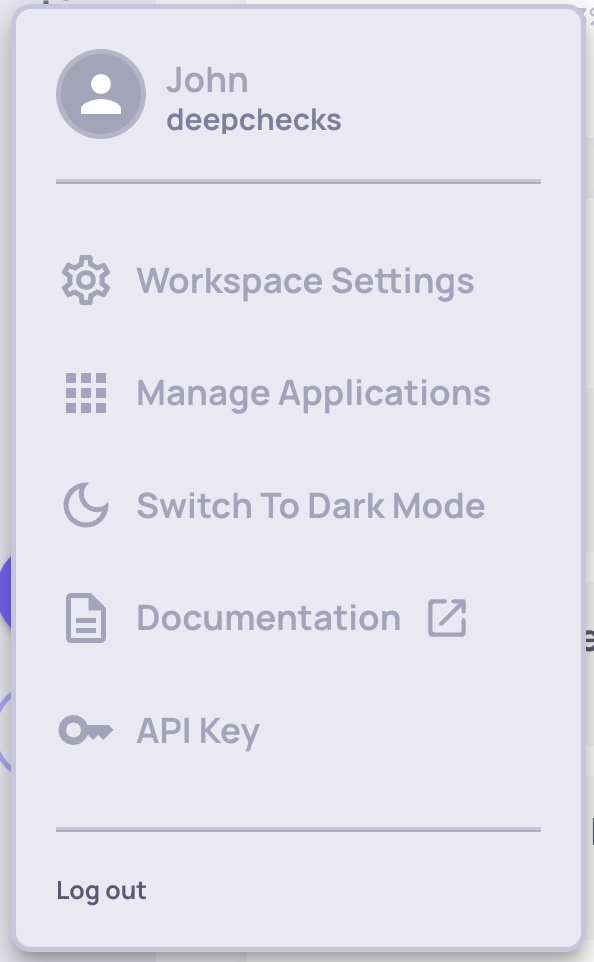

Version Insights Enhancements

-

Explainable insights with analysis, and actionable suggestion. Insights are based on property values. They Can be seen in the "Overview" screen per version, and in "Versions" screen to see application-wide insights with link to the relevant version.

-

-

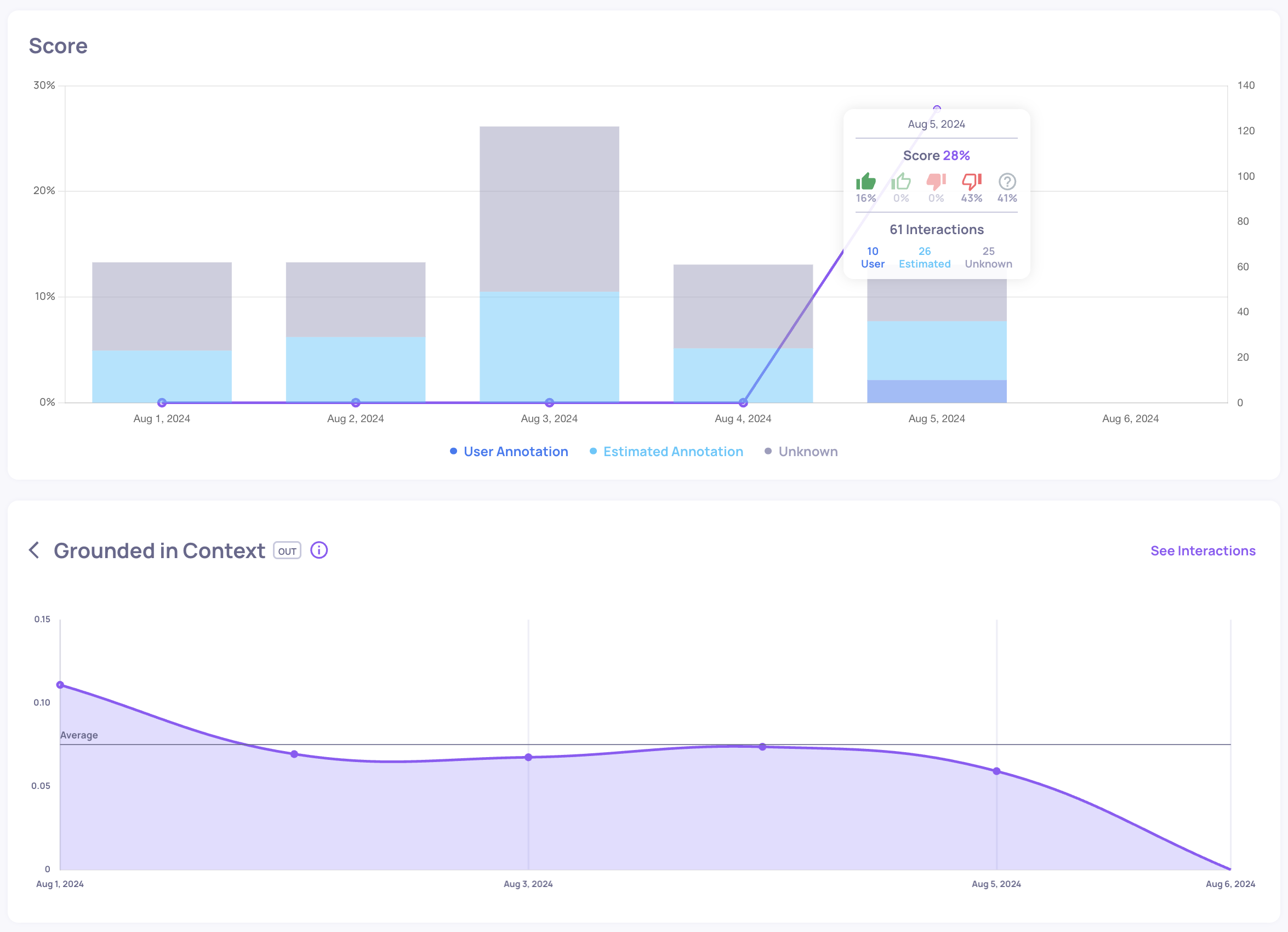

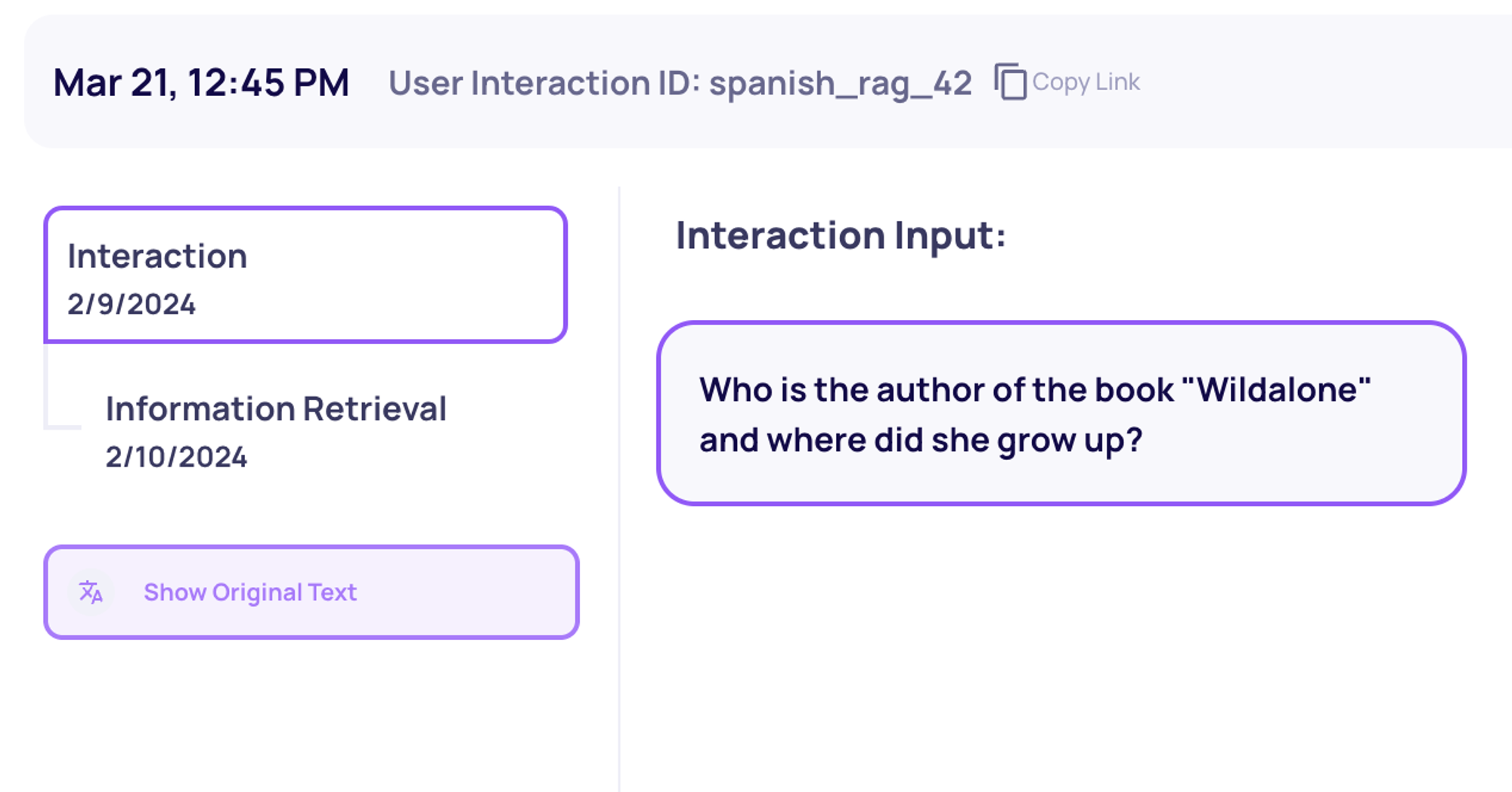

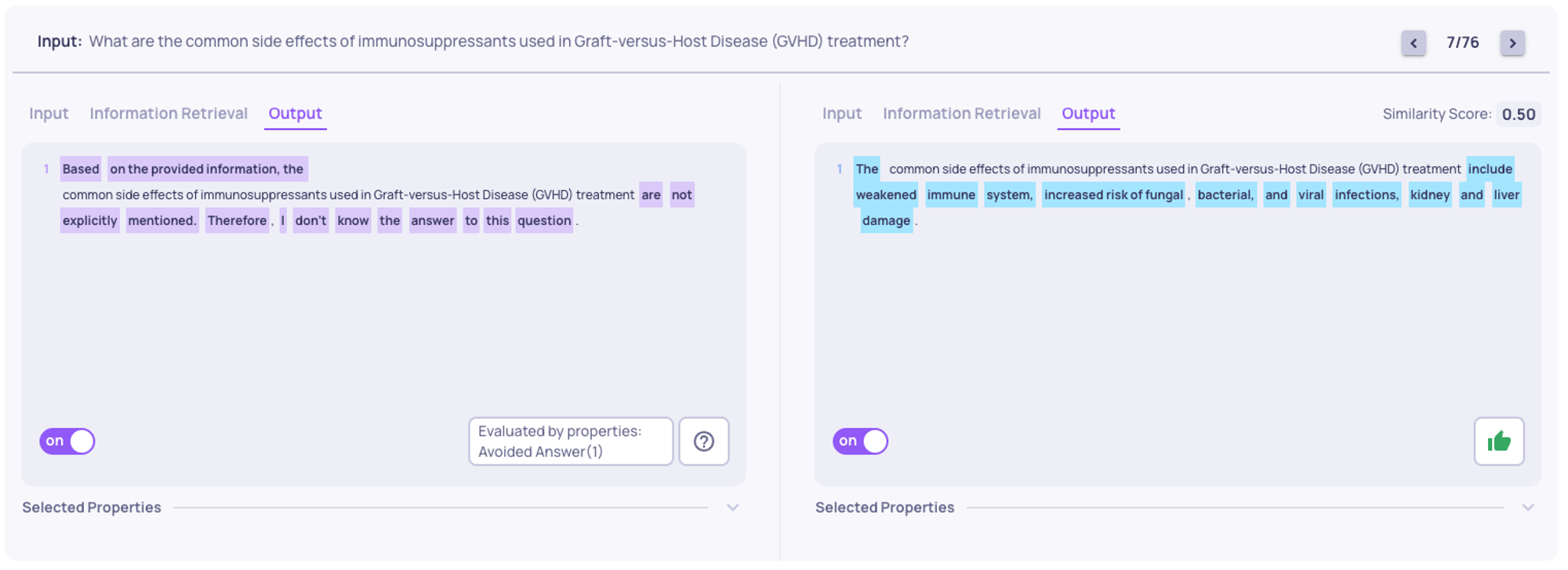

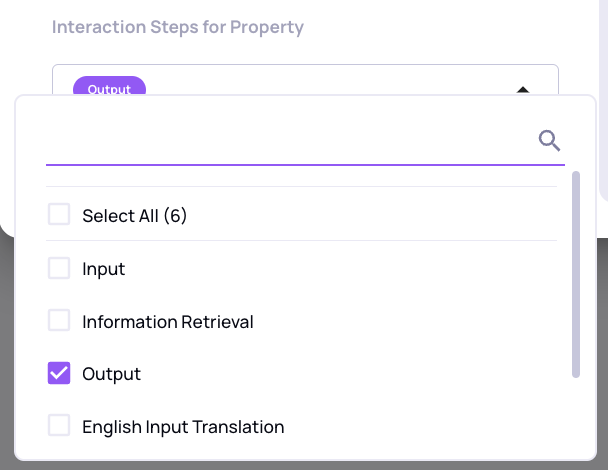

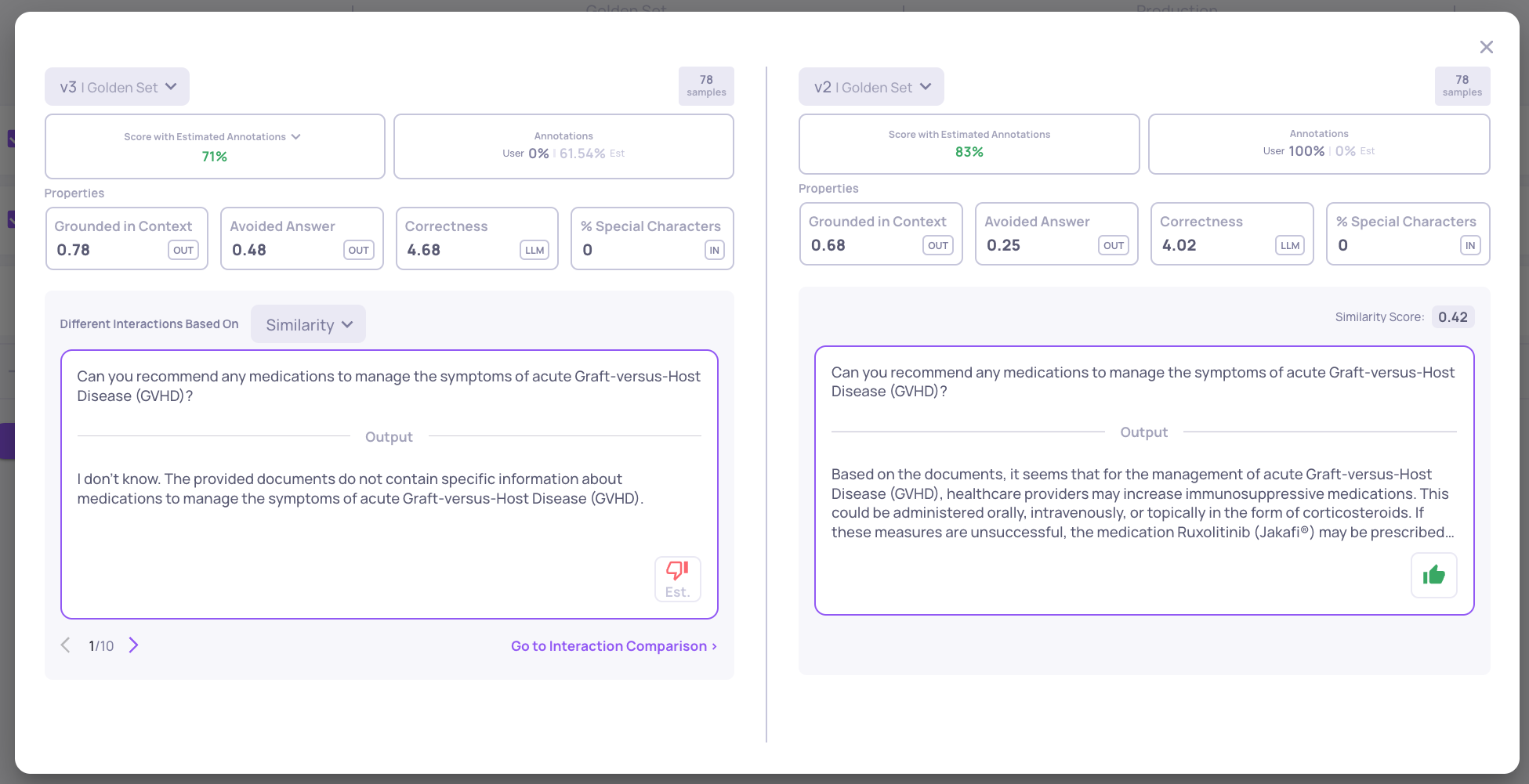

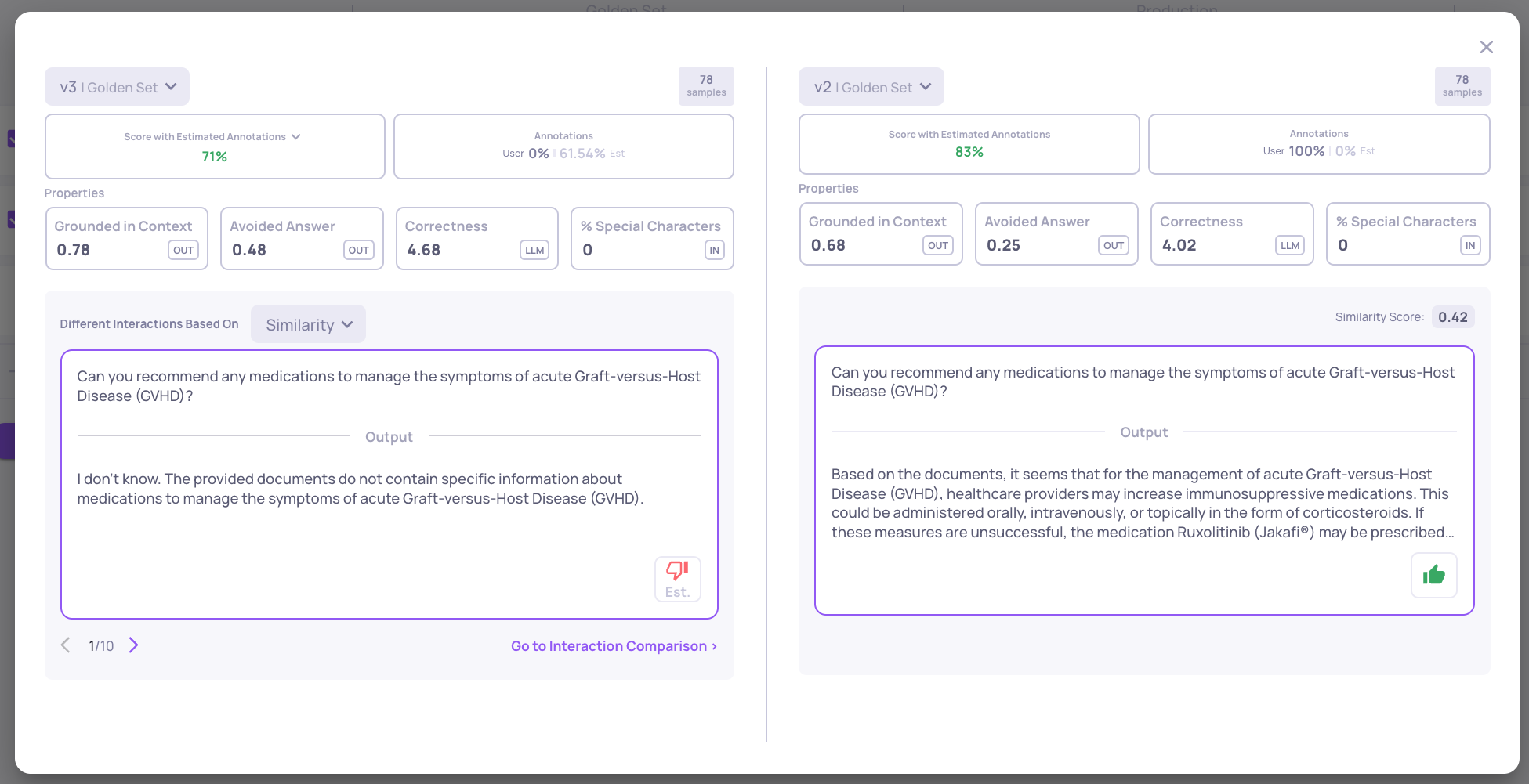

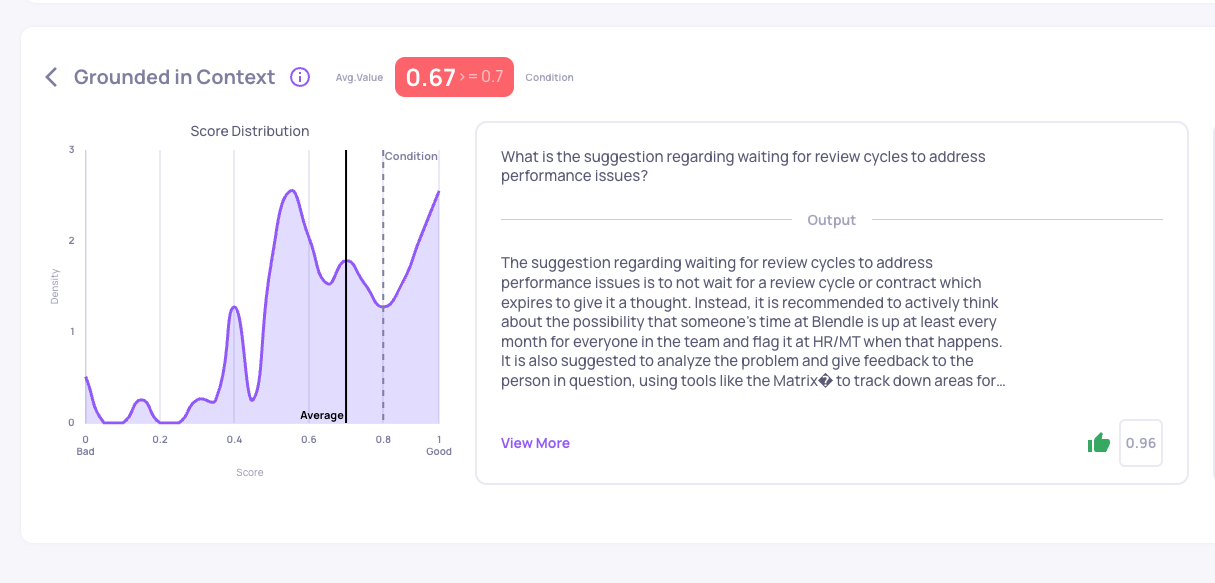

Score Reasoning Breakdown

-

According to Annotation Reason. Click the “Show breakdown” next to the Score on the Dashboard.

-

-

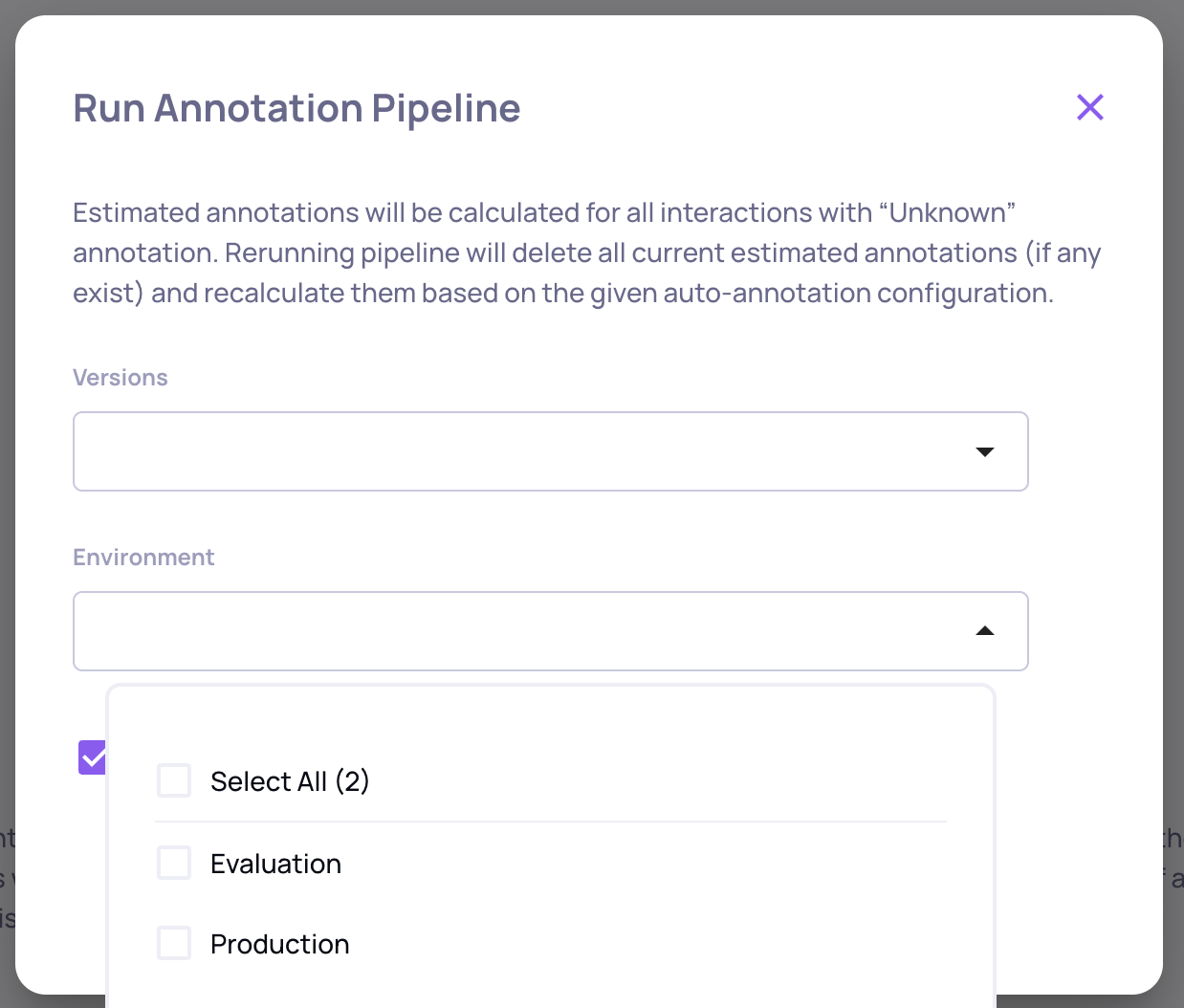

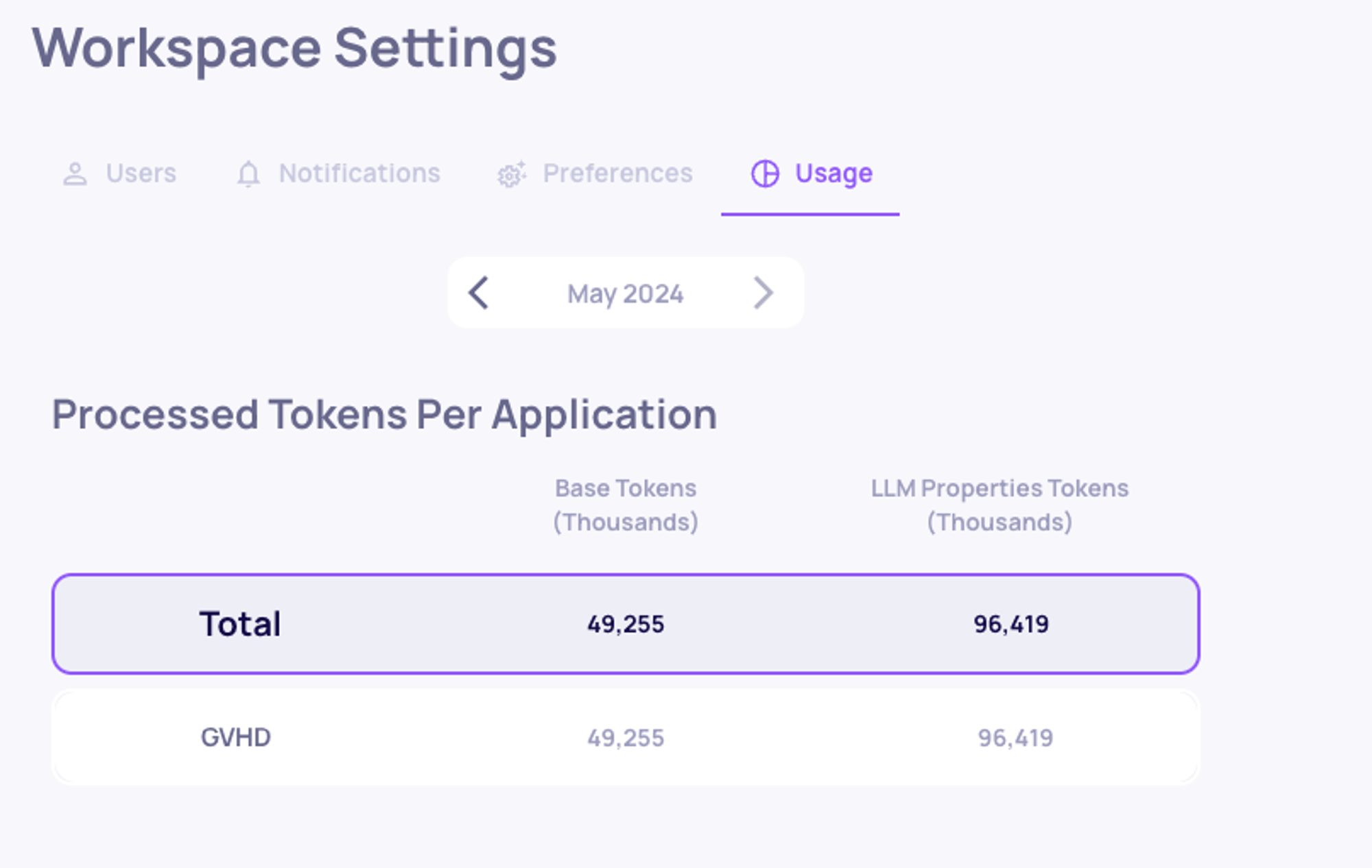

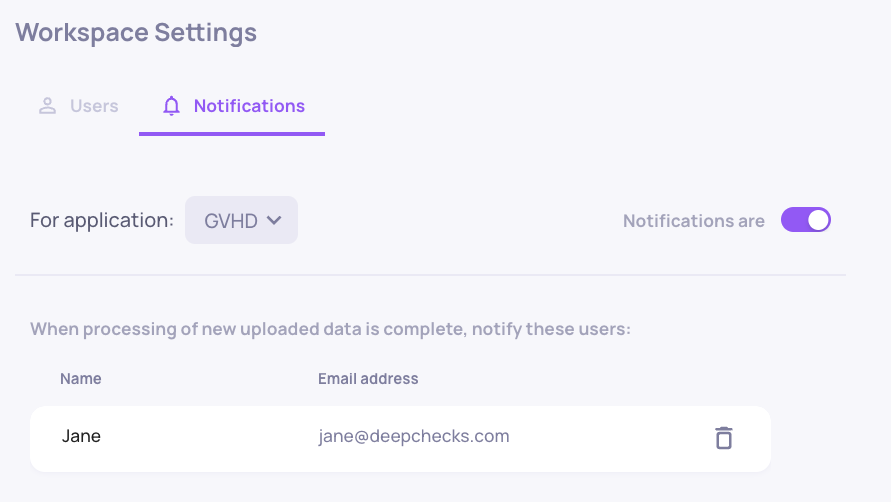

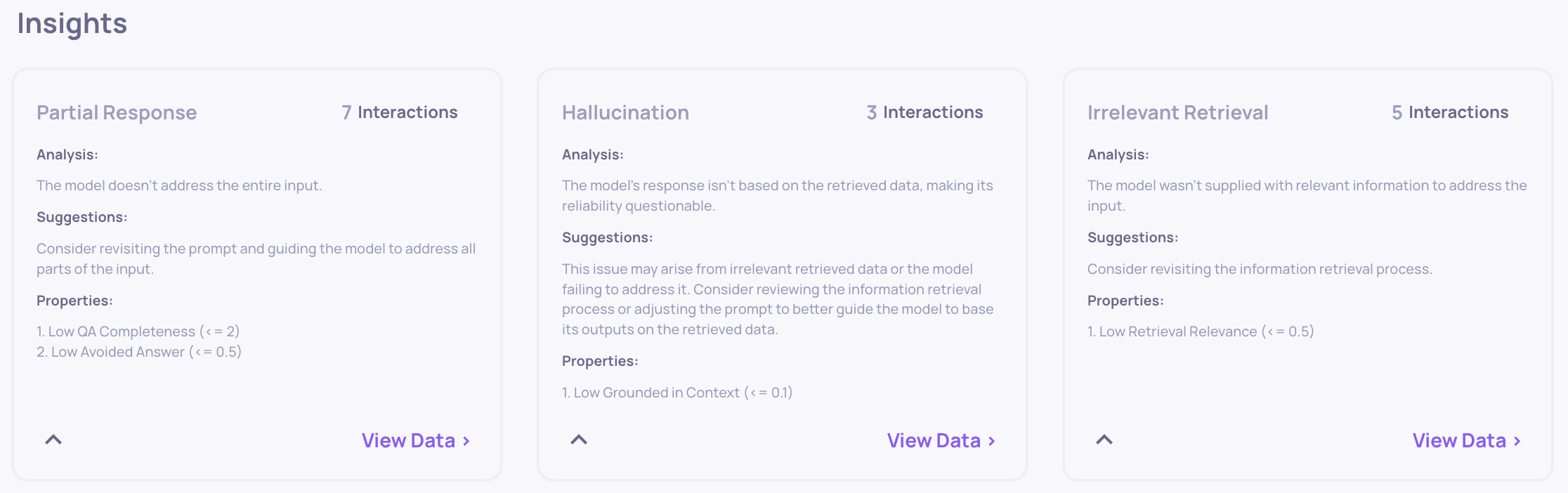

Usage Plan Visibility

-

Displaying your stats and limits (Applications, Users, Processed data tokens) in the "Usage Tab" in the workspace settings

-

-

Improvement to PII Property

- Combined several mechanisms to widen detection and improve detection recall and precision on a wide set of benchmarks

-

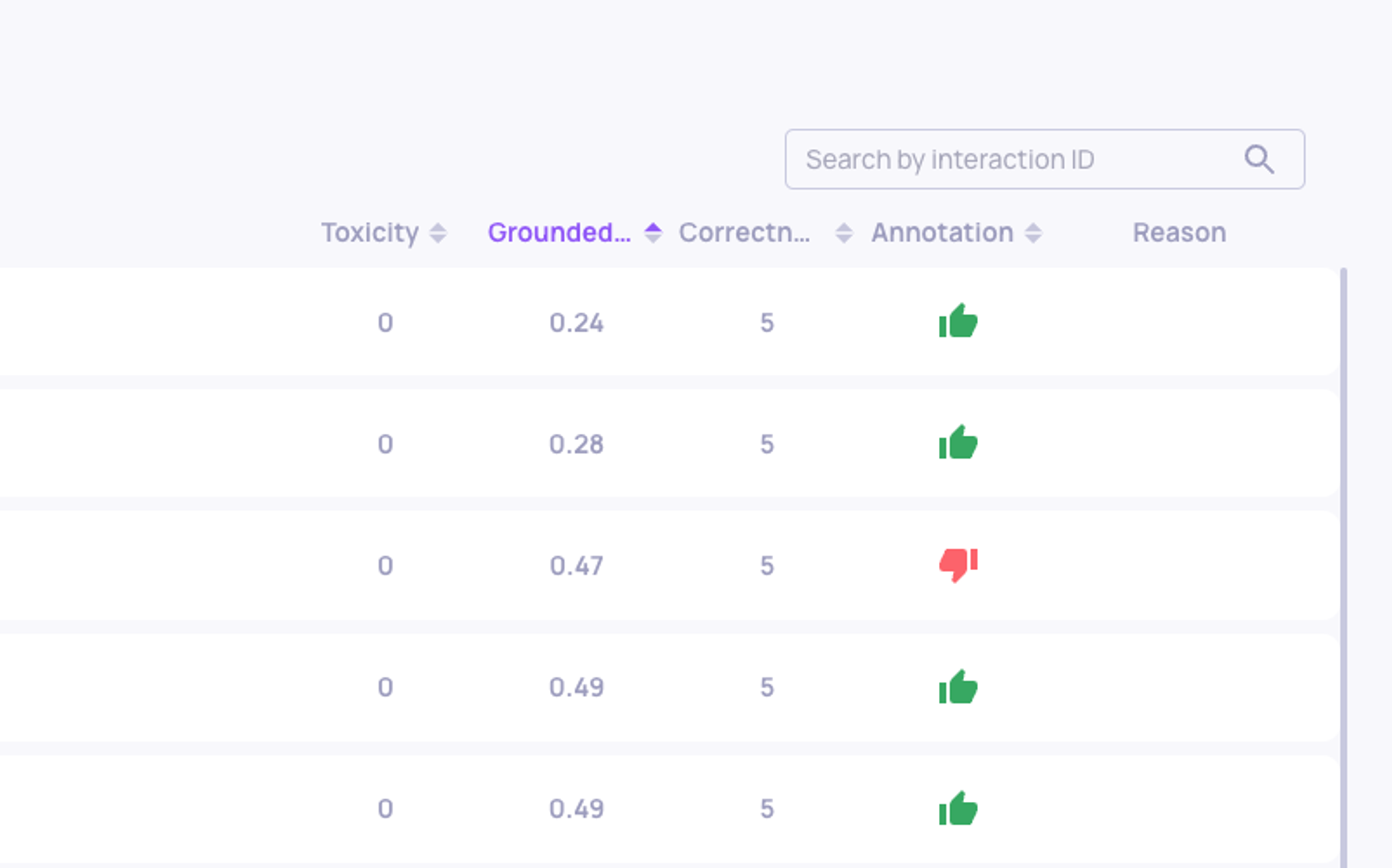

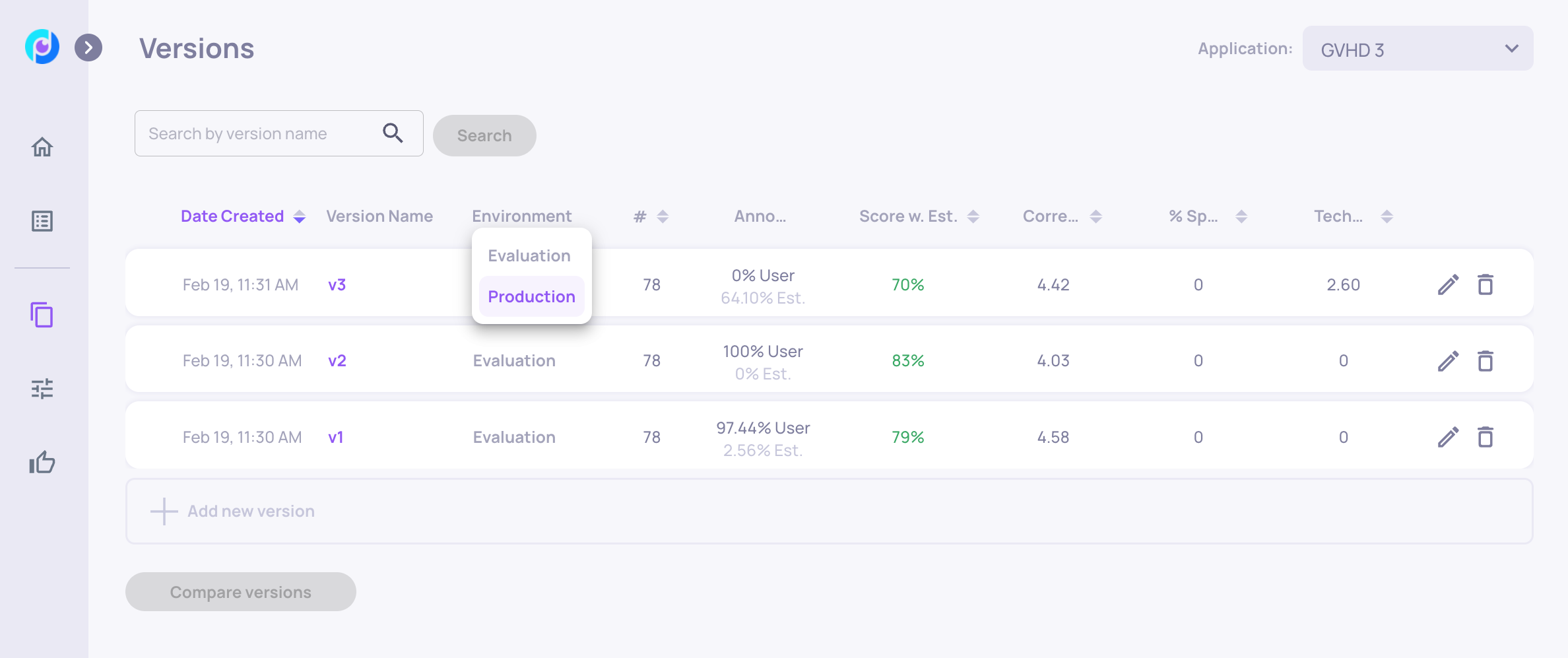

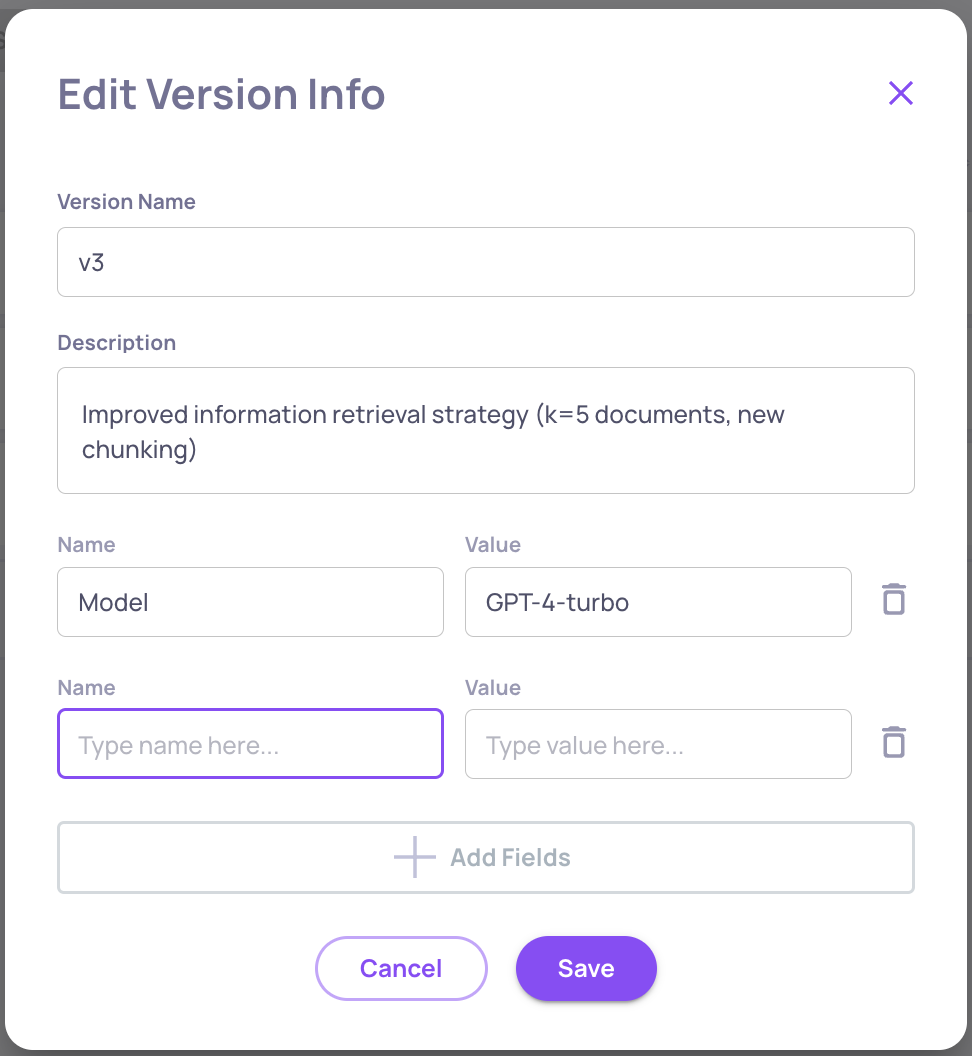

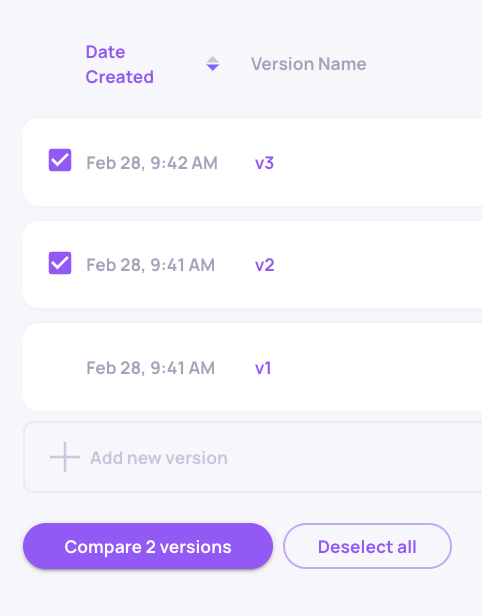

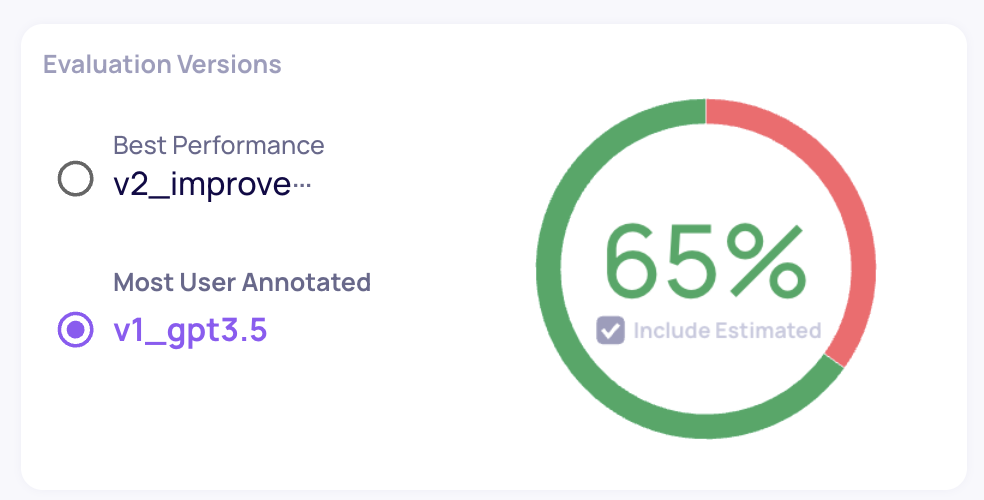

Versions Page Updates

-

Added high level data on version: insights on specific versions, and widget showing the most recent version, best performing one, etc.

-

-

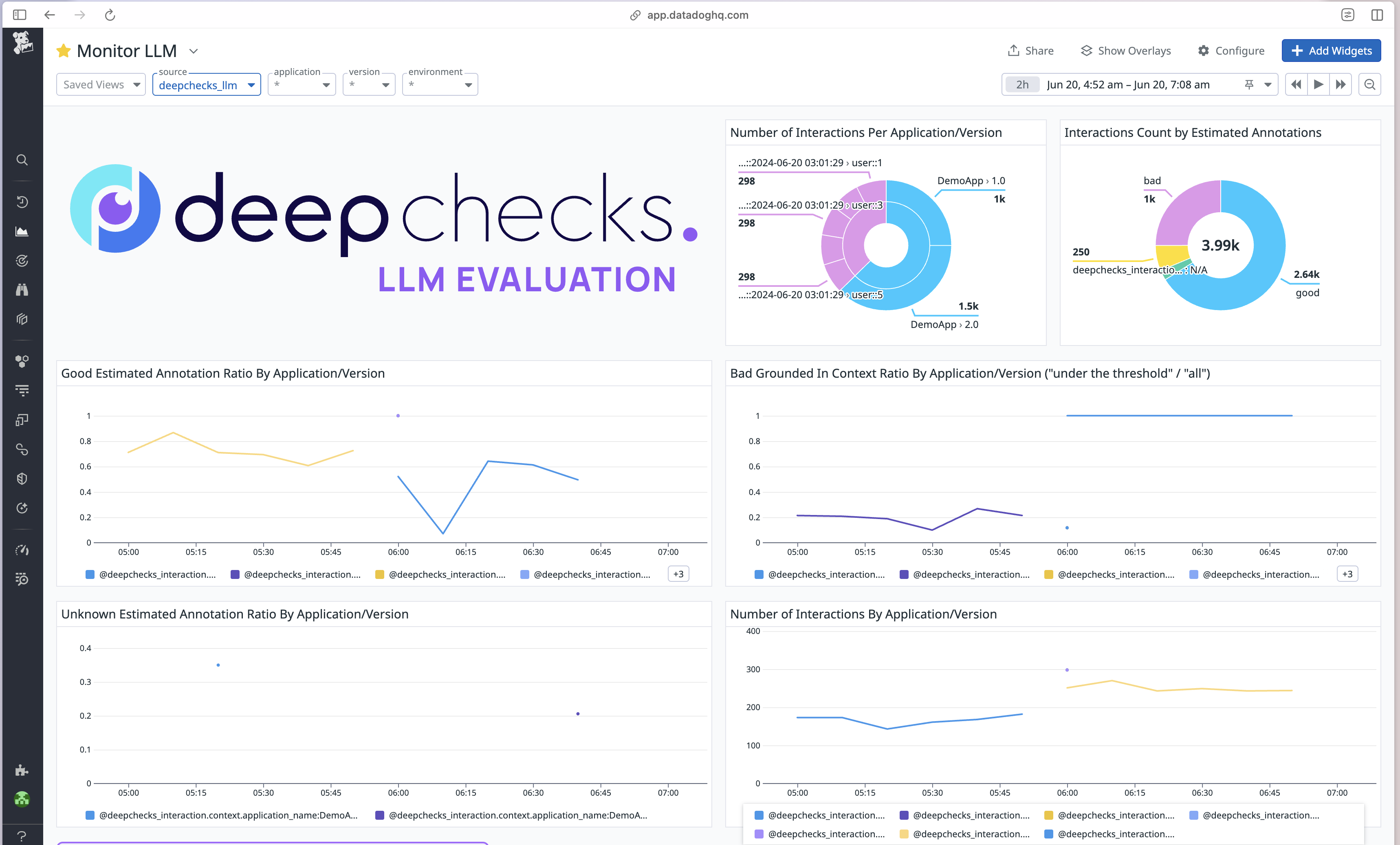

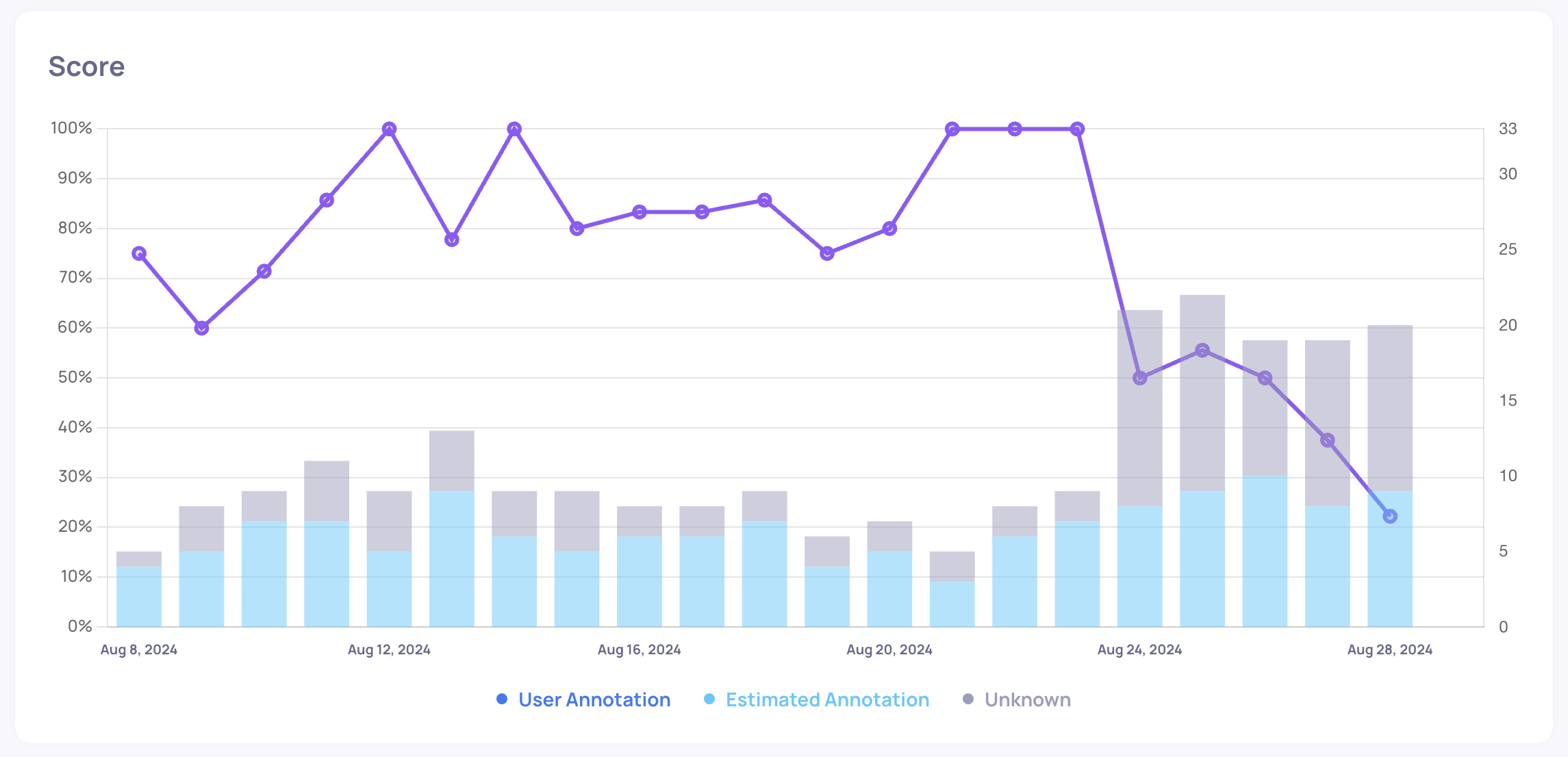

Production Overtime View Improvements

-

Default view now loads time ranges of most recent weeks of data

-