0.38.0 Release Notes

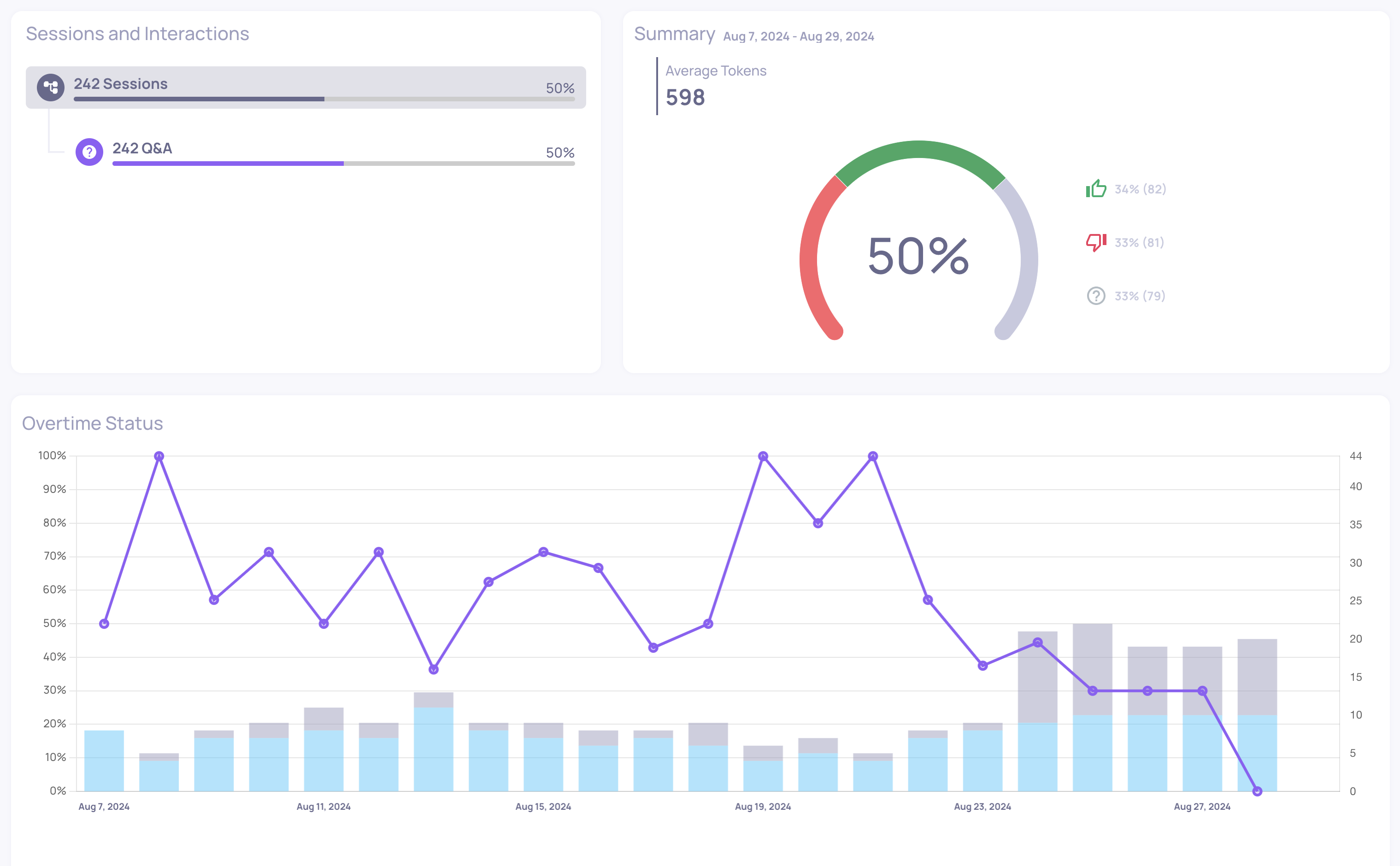

by Yaron FriedmanWe’re excited to announce version 0.38 of Deepchecks LLM Evaluation - introducing framework-agnostic data ingestion for agentic workflows, a more expressive Avoidance evaluation property, and a new Metric Viewer role. This release expands who can use Deepchecks, improves failure signal quality, and strengthens access control and platform clarity.

Deepchecks LLM Evaluation 0.38.0 Release:

- 🧩 Framework-Agnostic Agentic Data Ingestion

- 🚫 Avoided Answer → Avoidance (Enhanced Property)

- 🔐 New RBAC Role: Metric Viewer

- 🤖 New Models Available for LLM-Based Features

- ⚠️ SDK Deprecation Notice:

send_spans()

What's New and Improved?

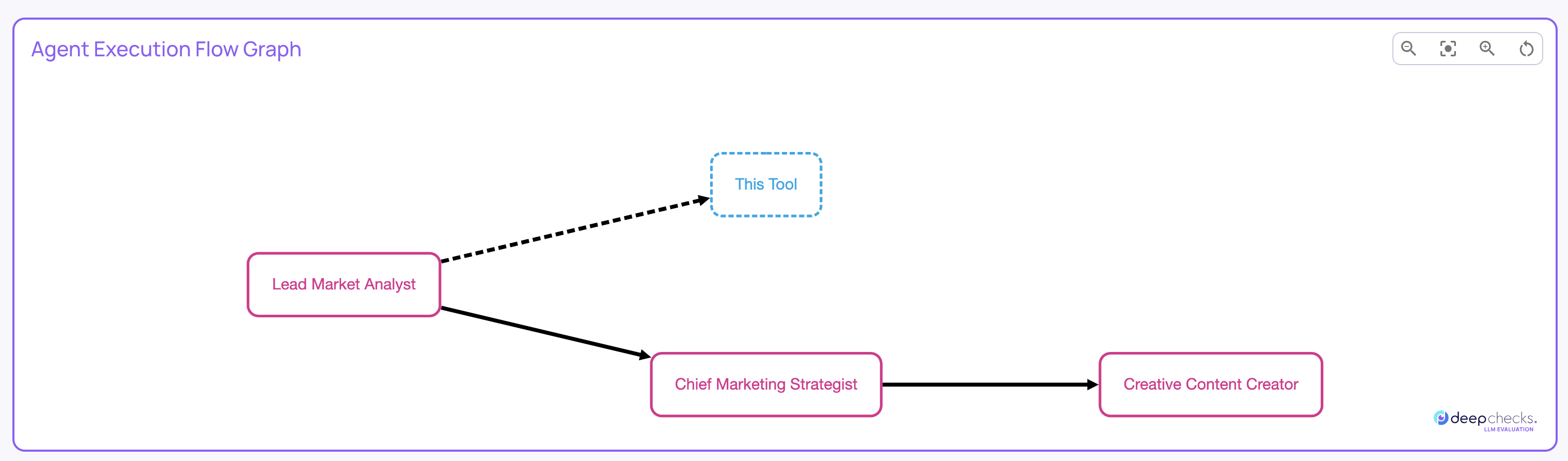

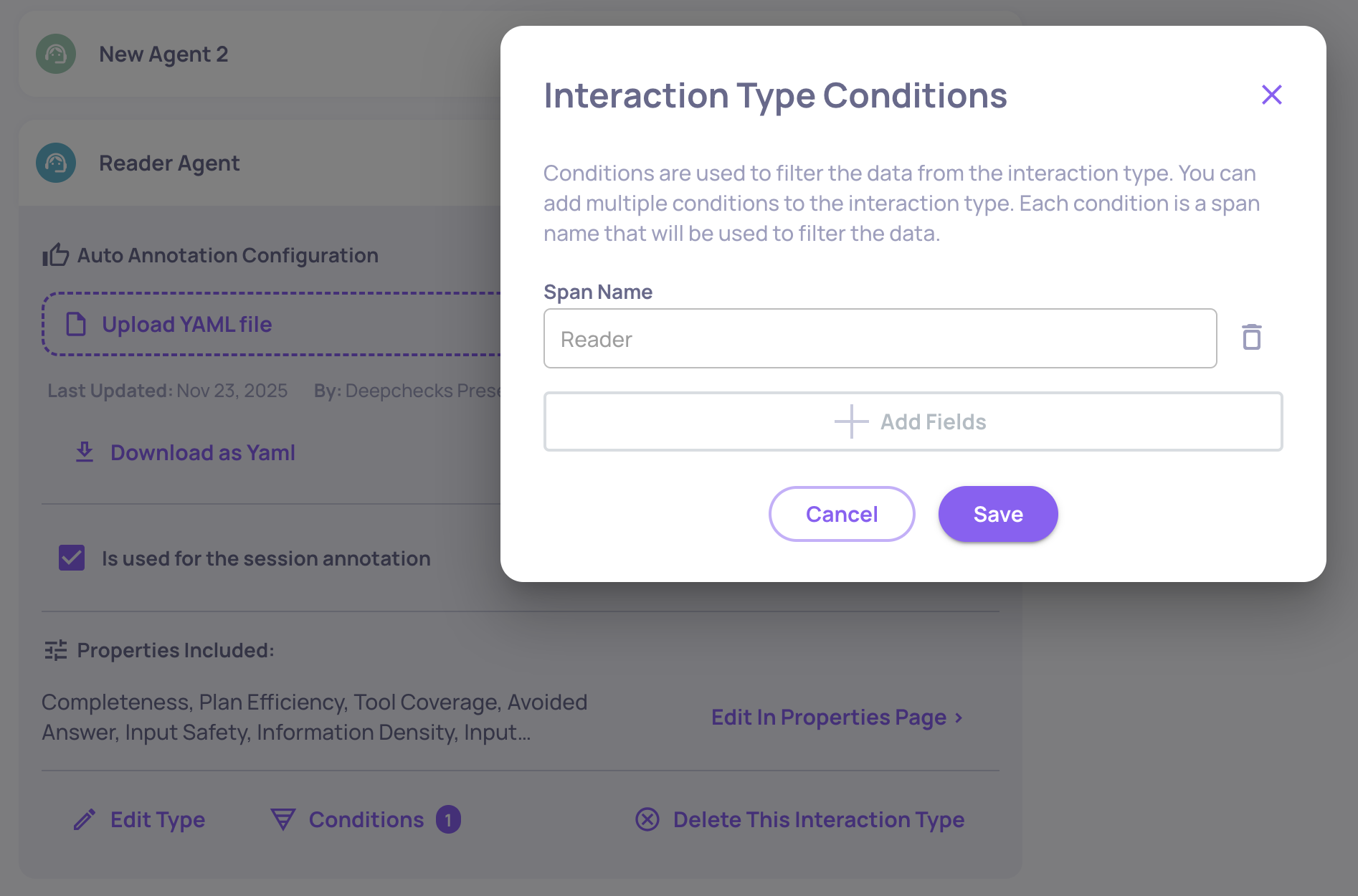

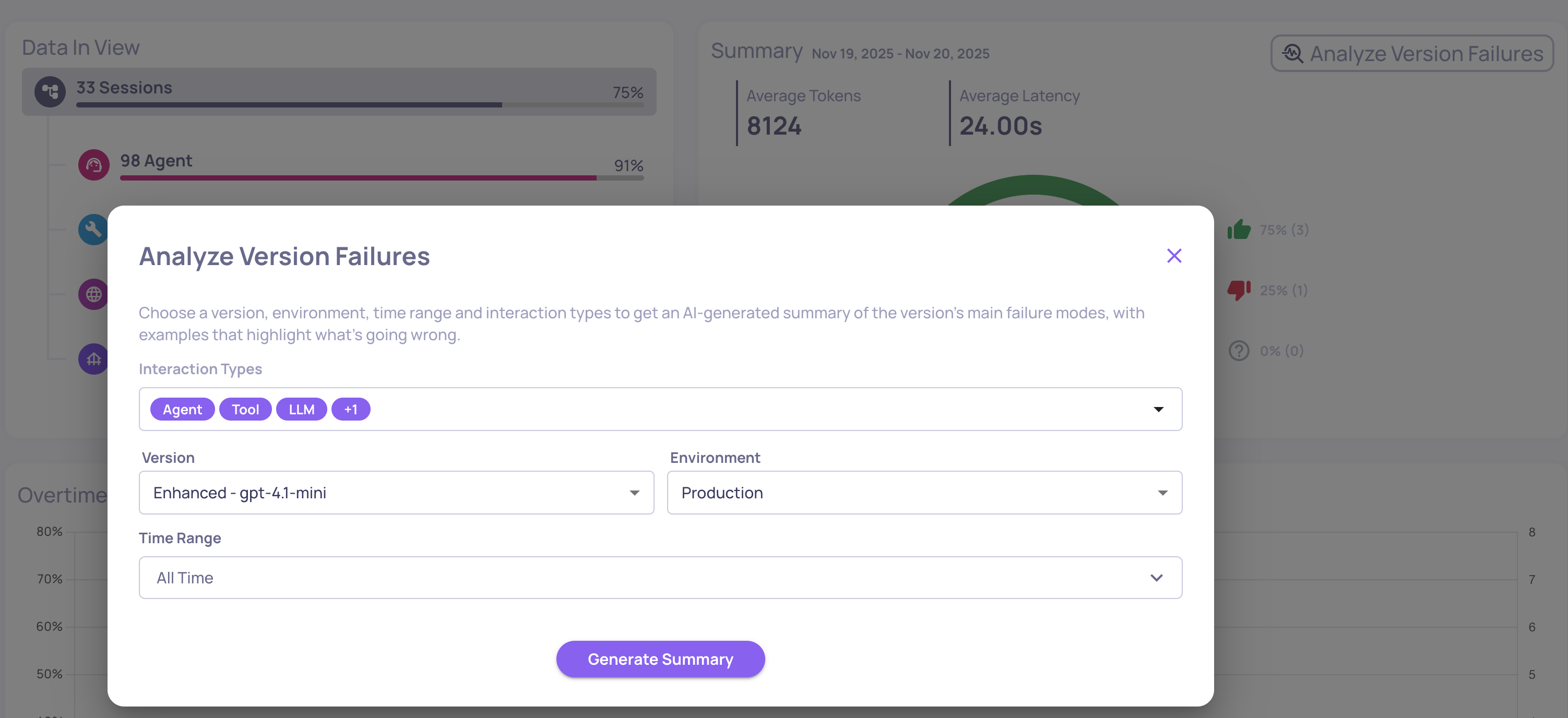

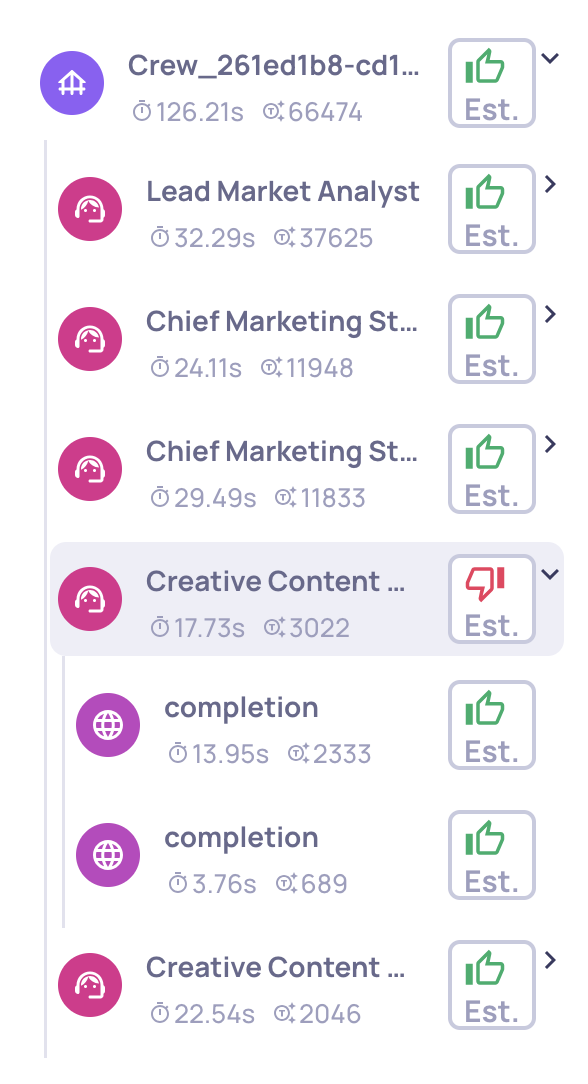

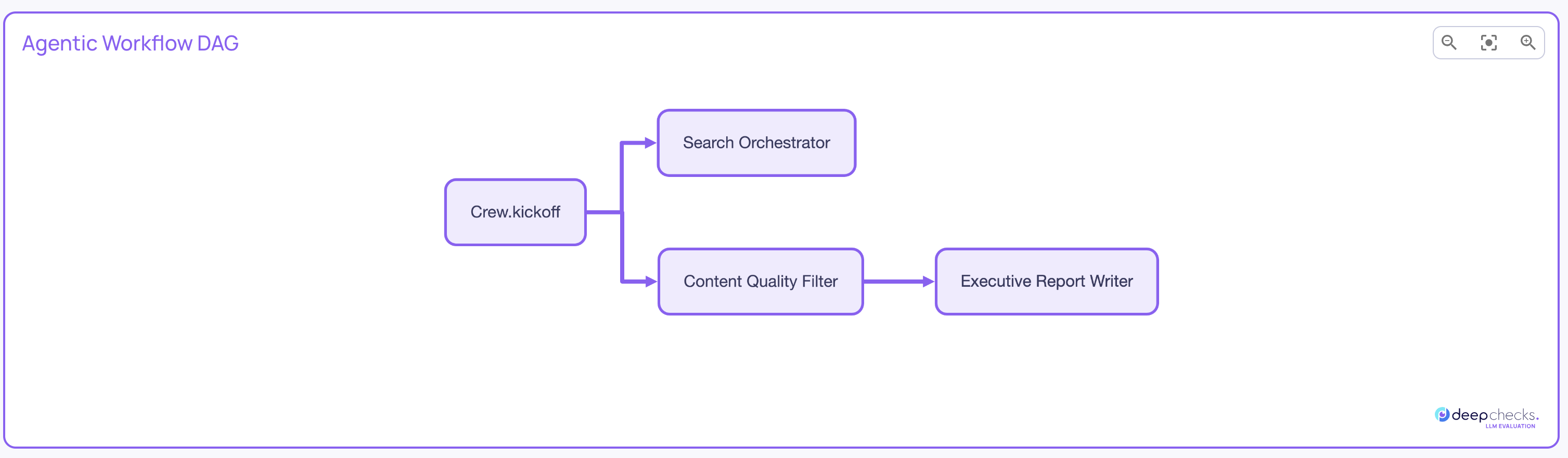

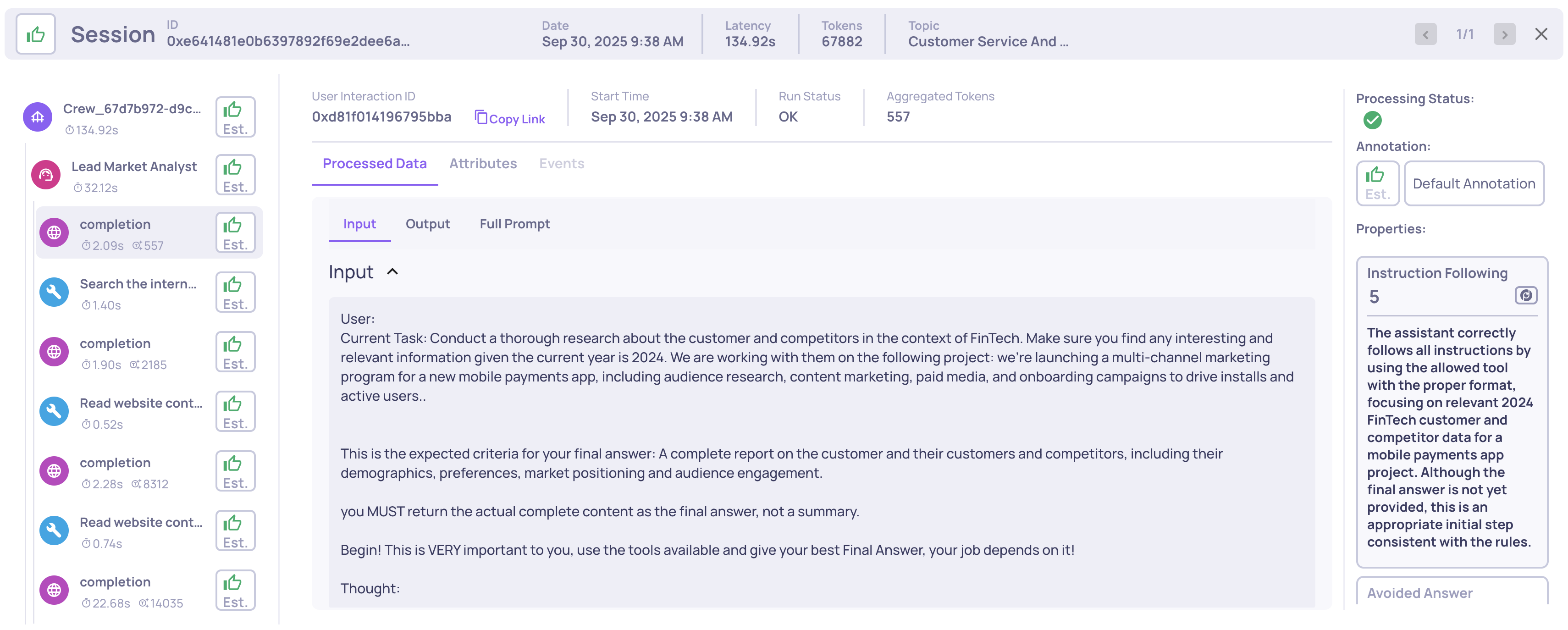

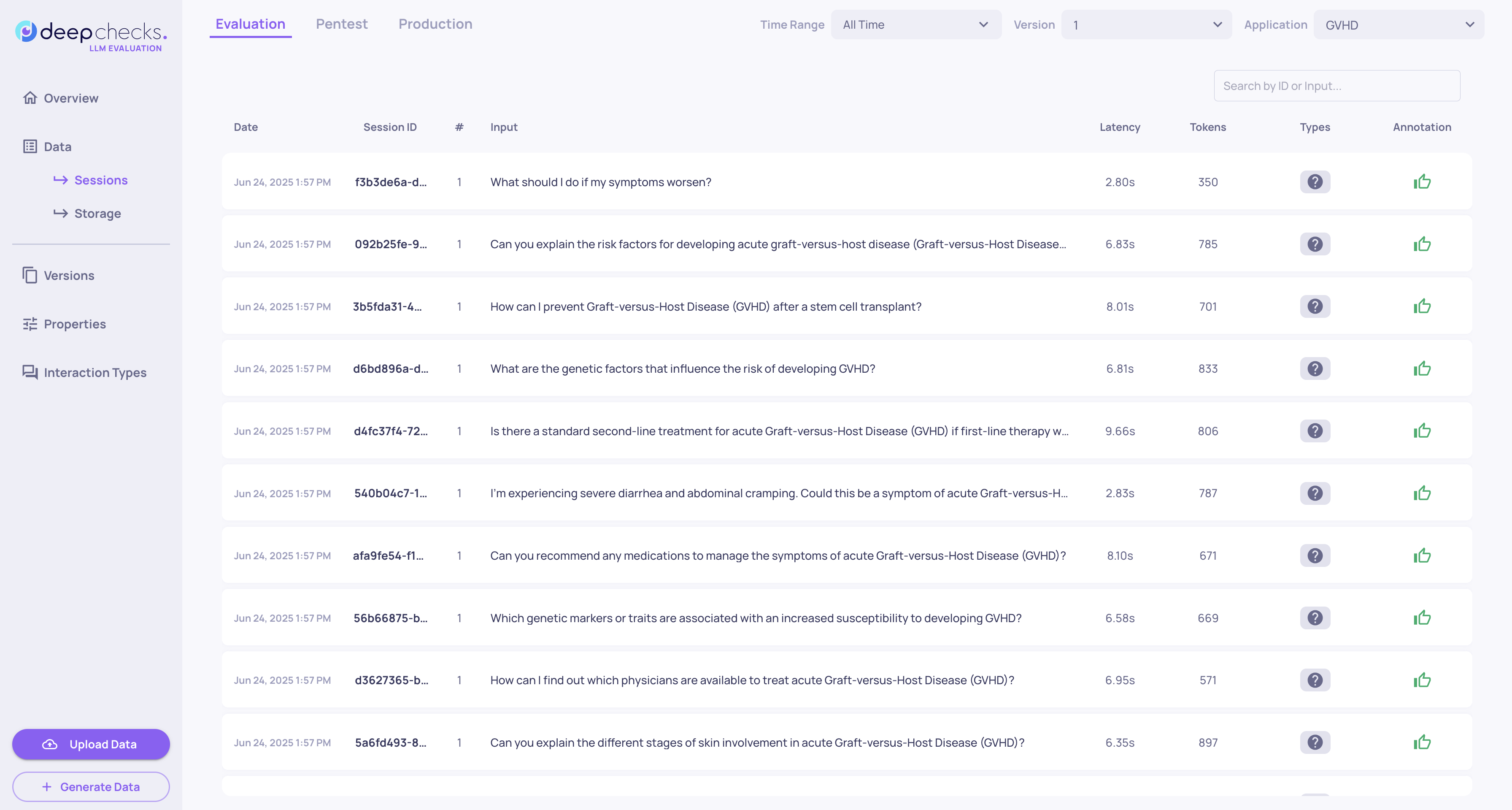

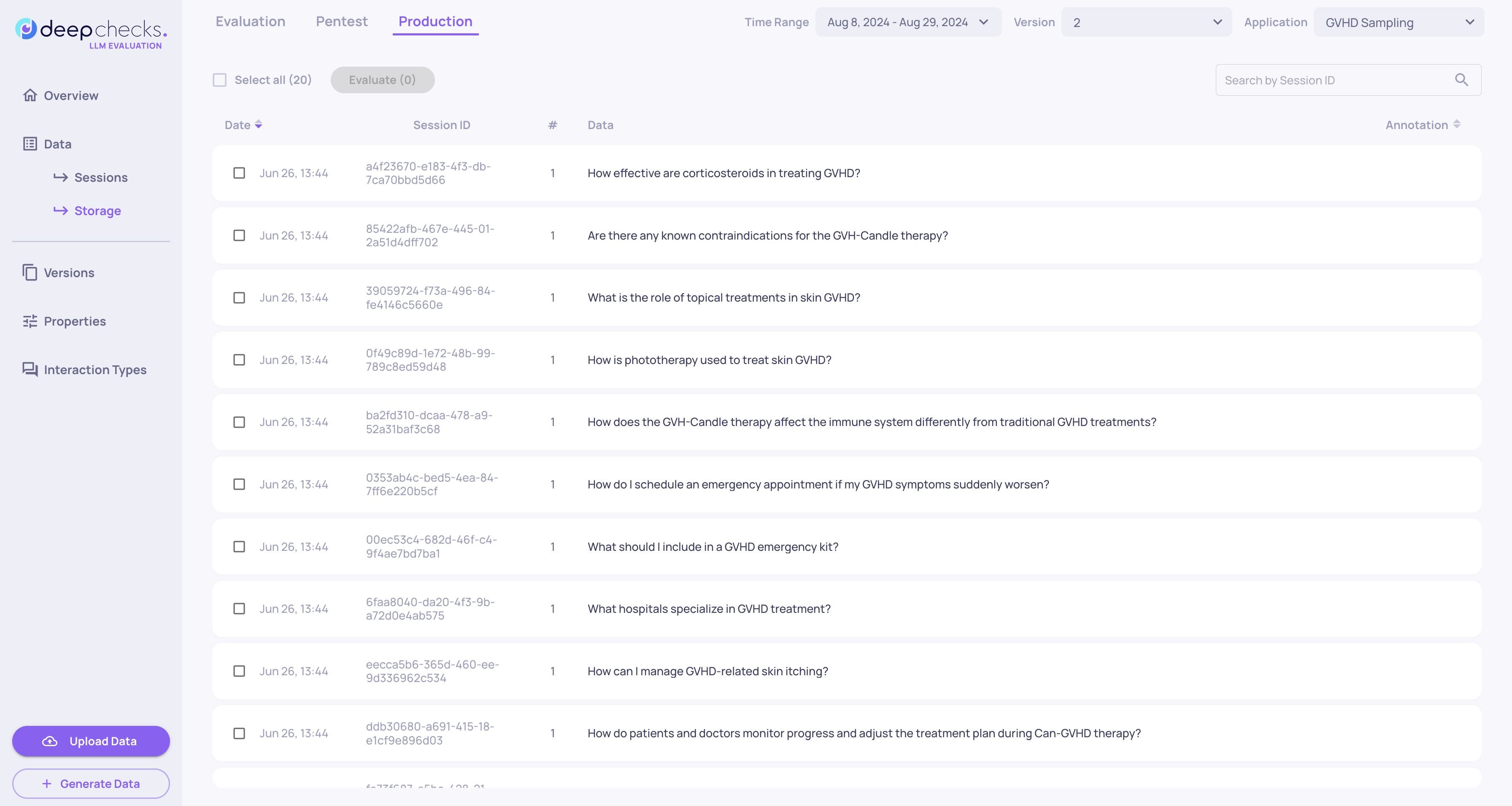

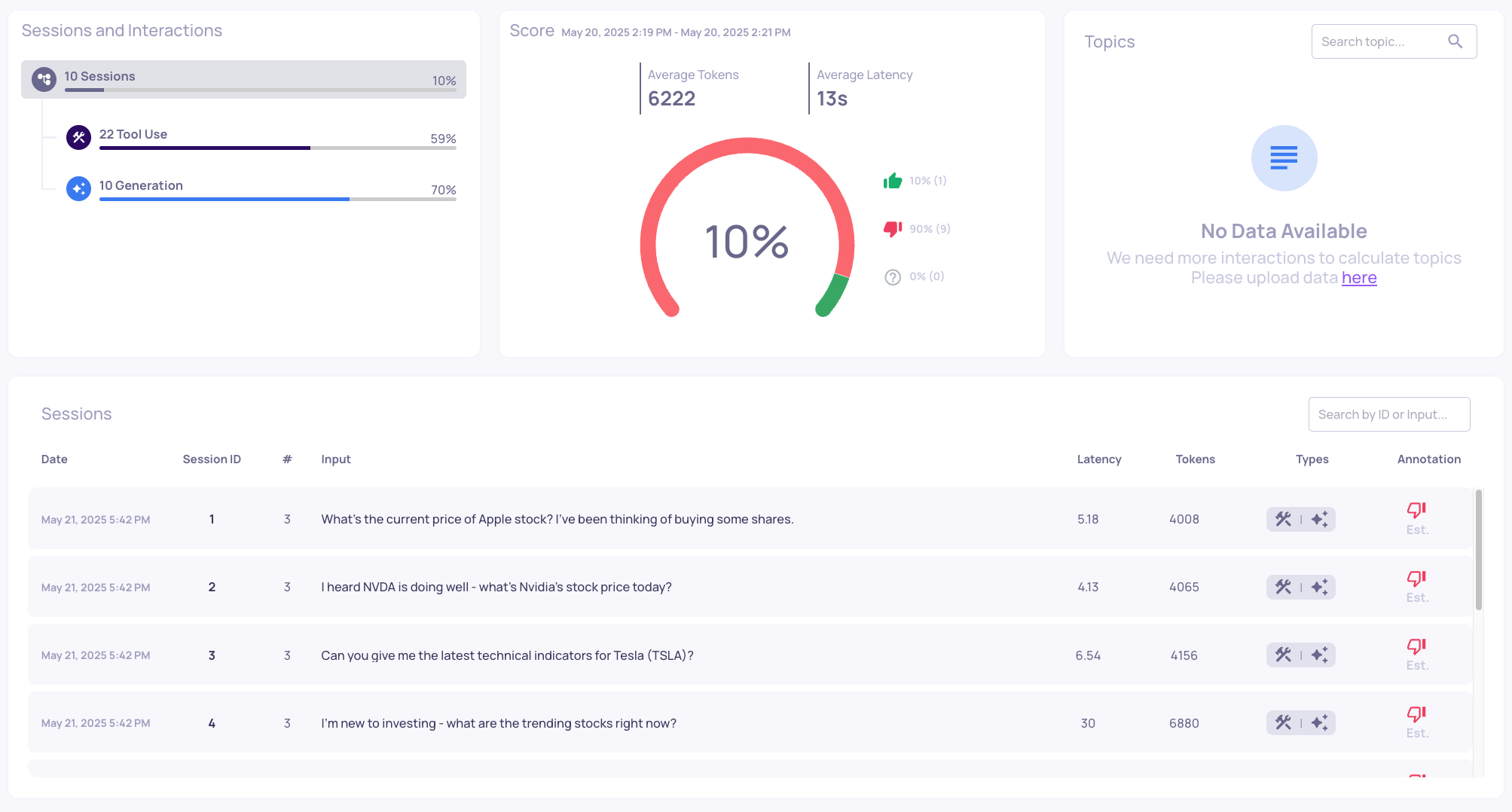

Framework-Agnostic Agentic Data Ingestion

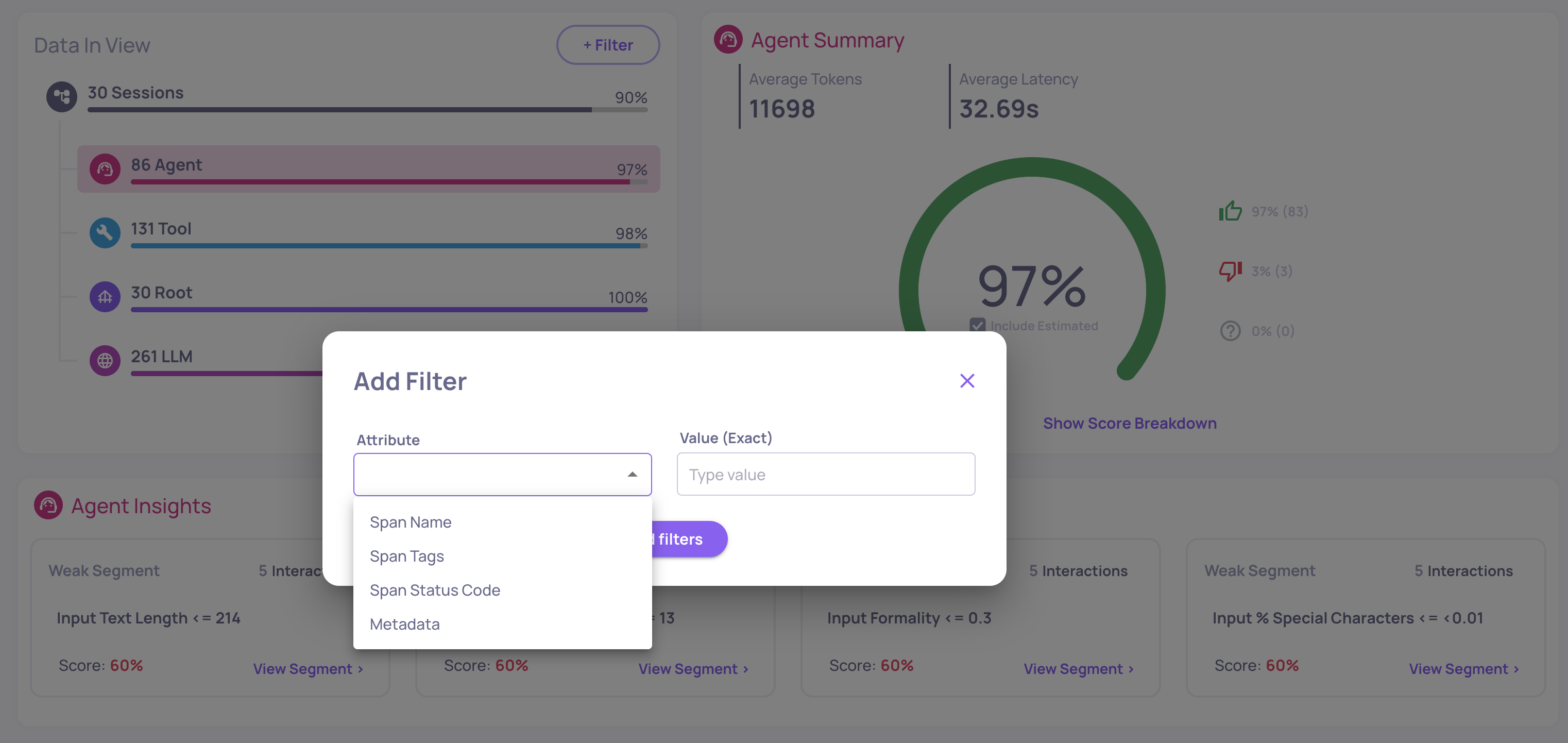

Deepchecks now supports uploading agentic and complex workflow data via the SDK, without relying on automatic tracing from a supported framework. This enables full observability and evaluation for teams using custom frameworks, in-house orchestration layers, or unsupported agent runtimes.

You can manually structure and send sessions, traces and spans to Deepchecks while still benefiting from the full evaluation, observability, and root-cause analysis capabilities.

For a step-by-step guide, click here.

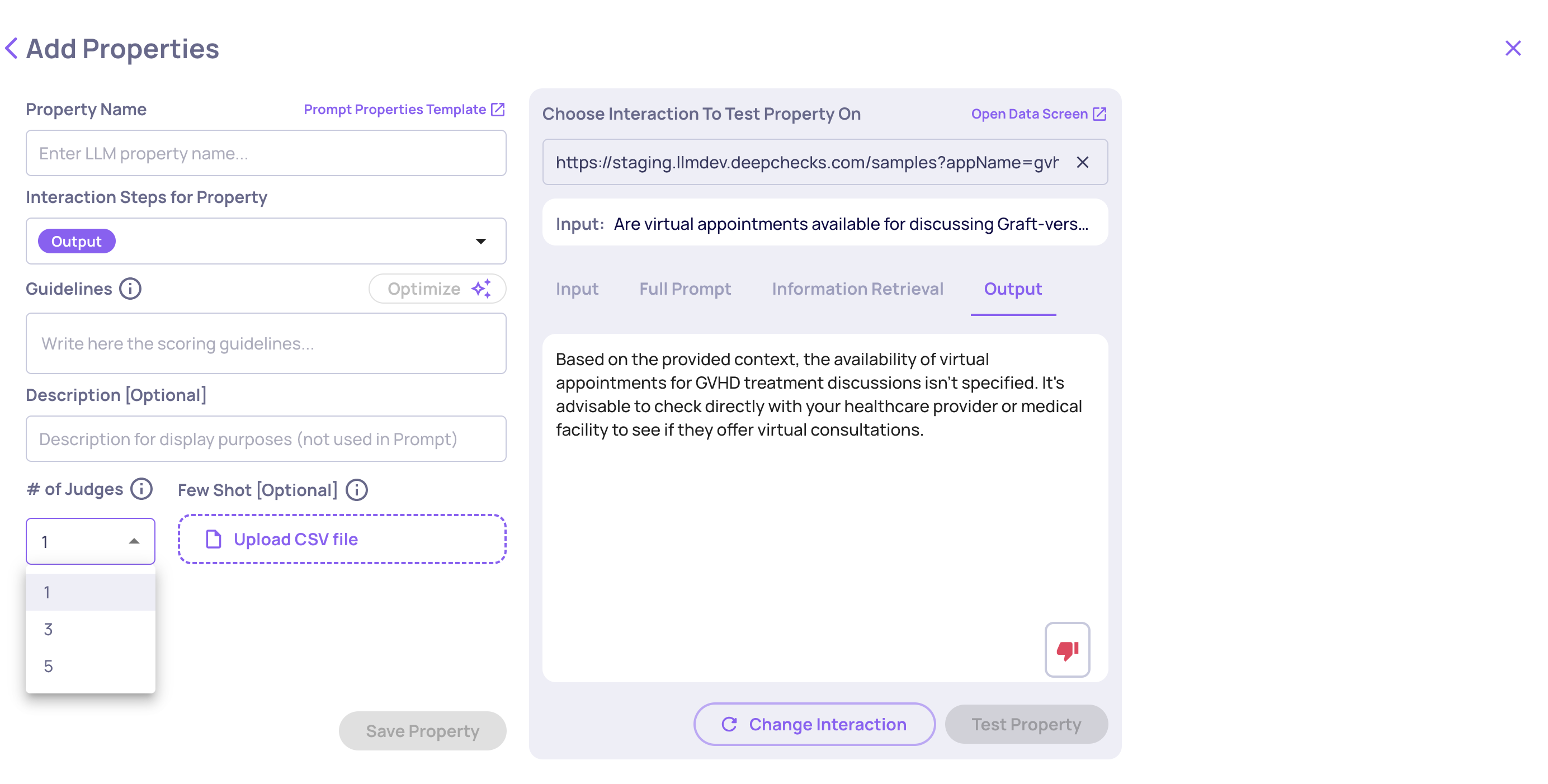

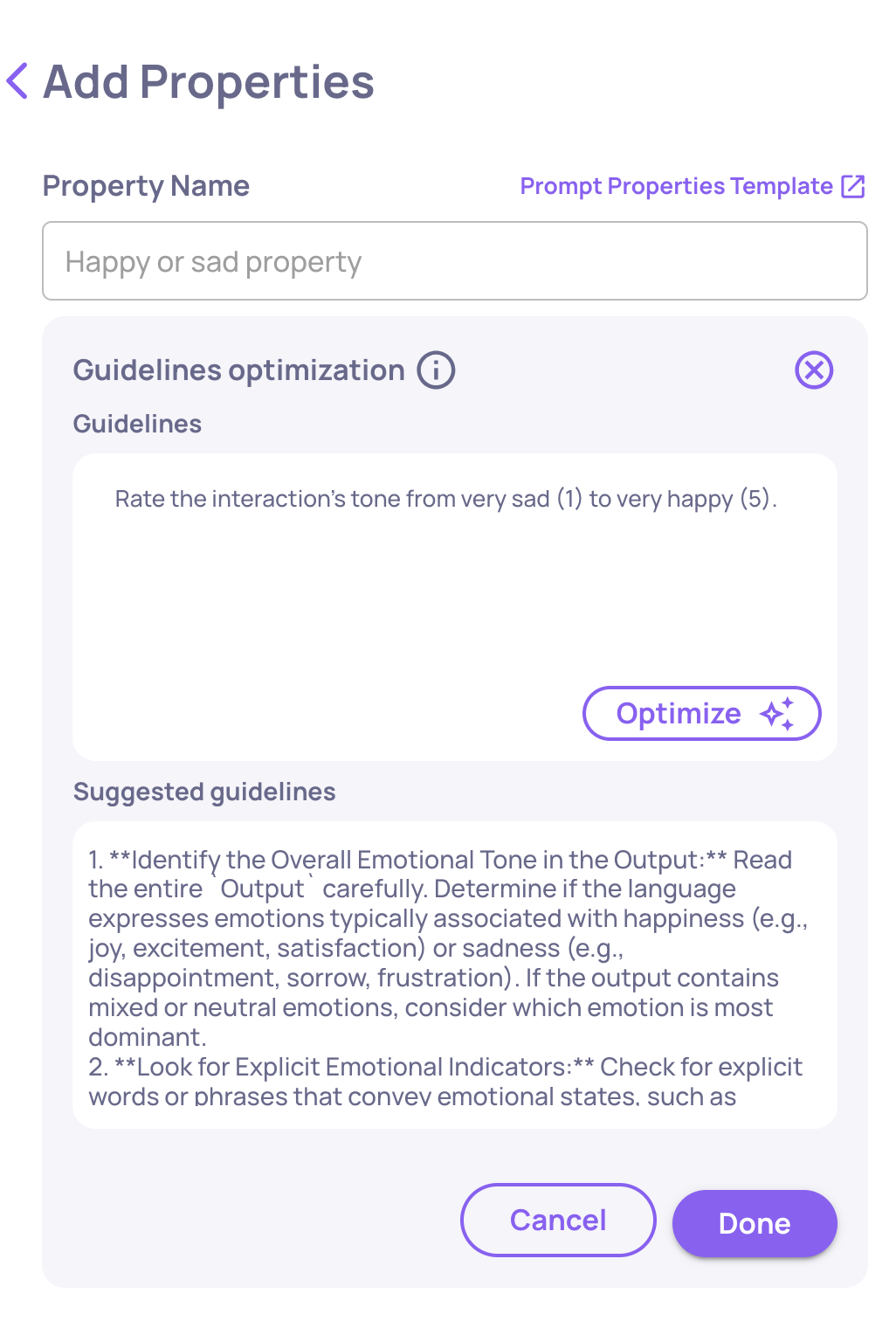

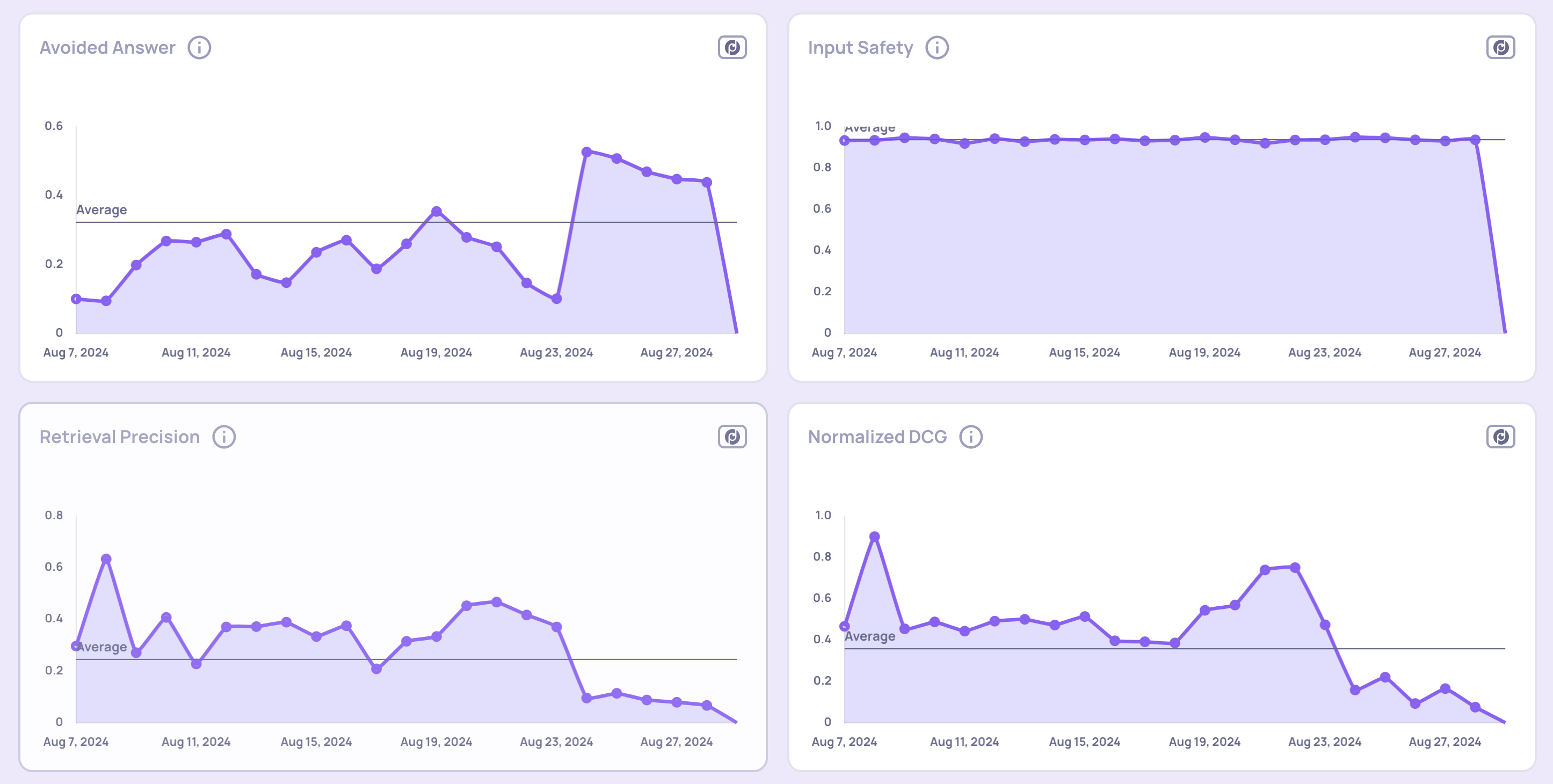

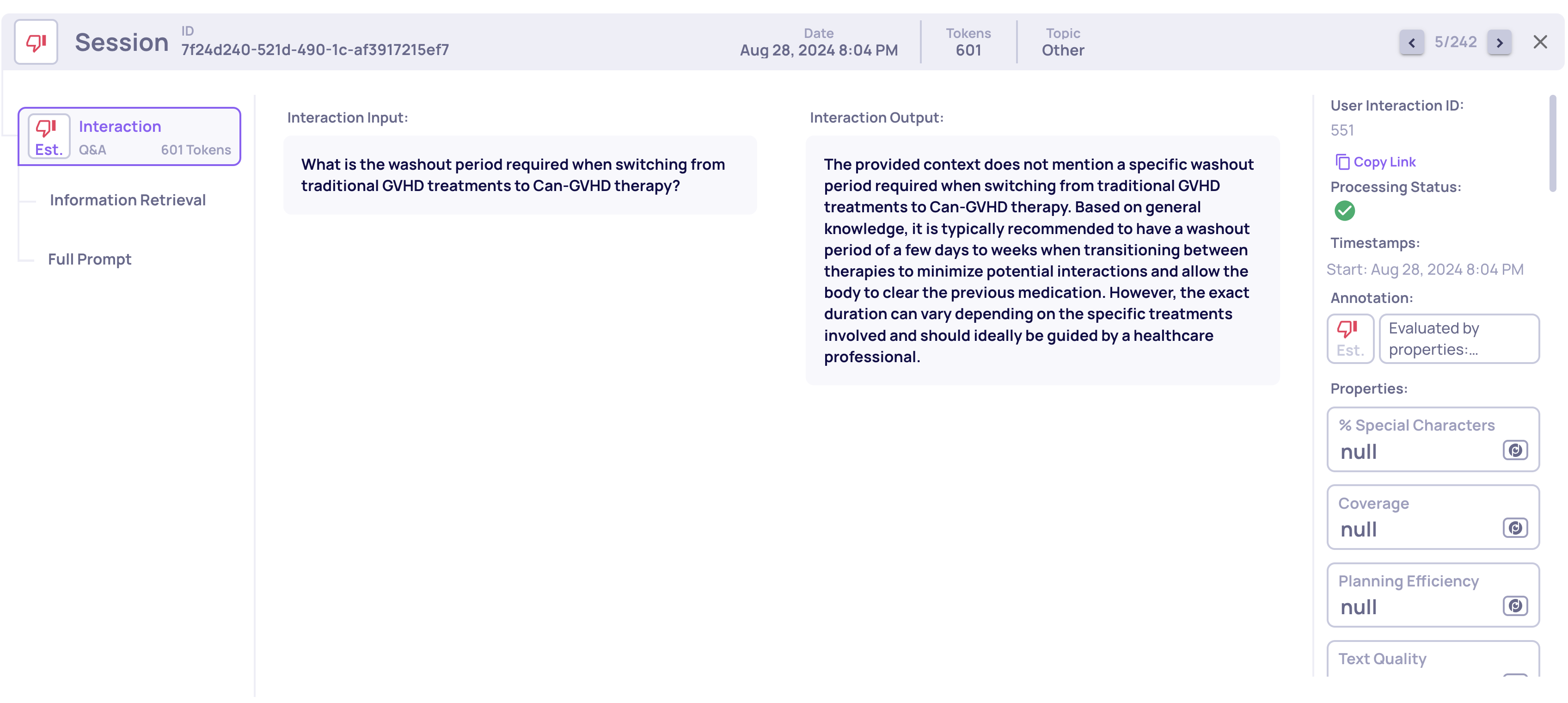

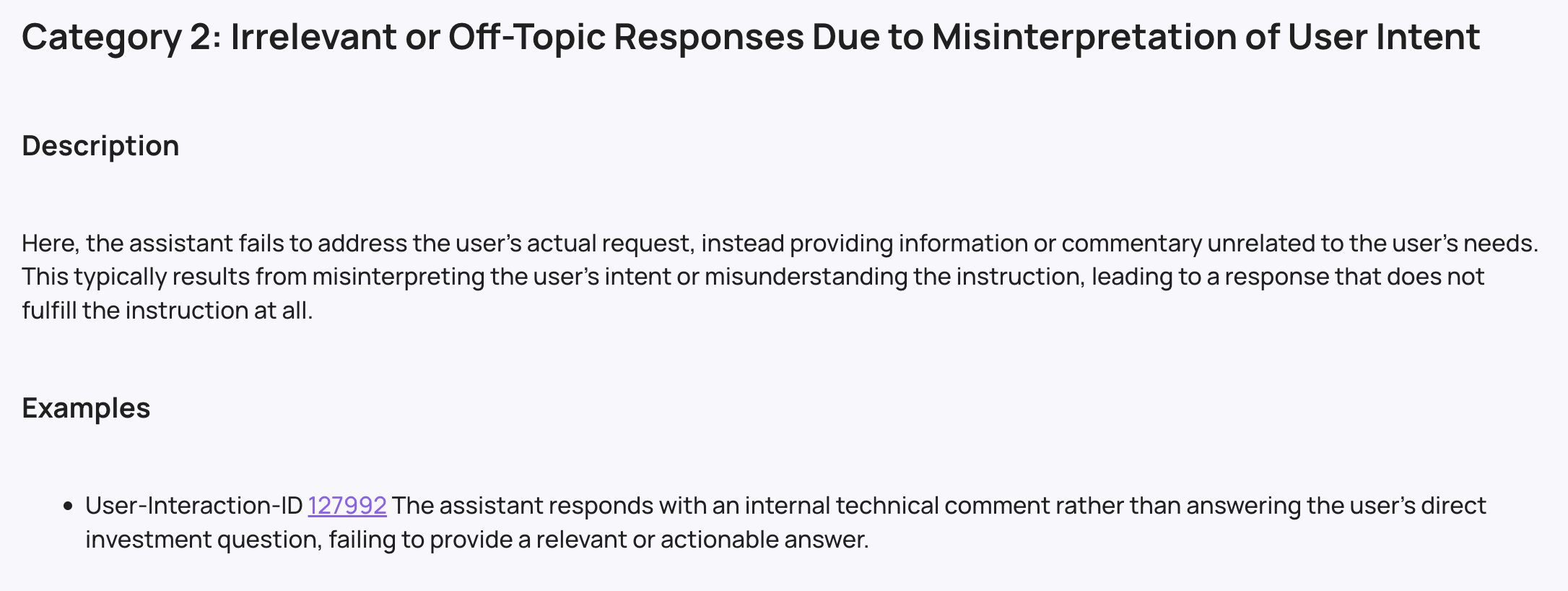

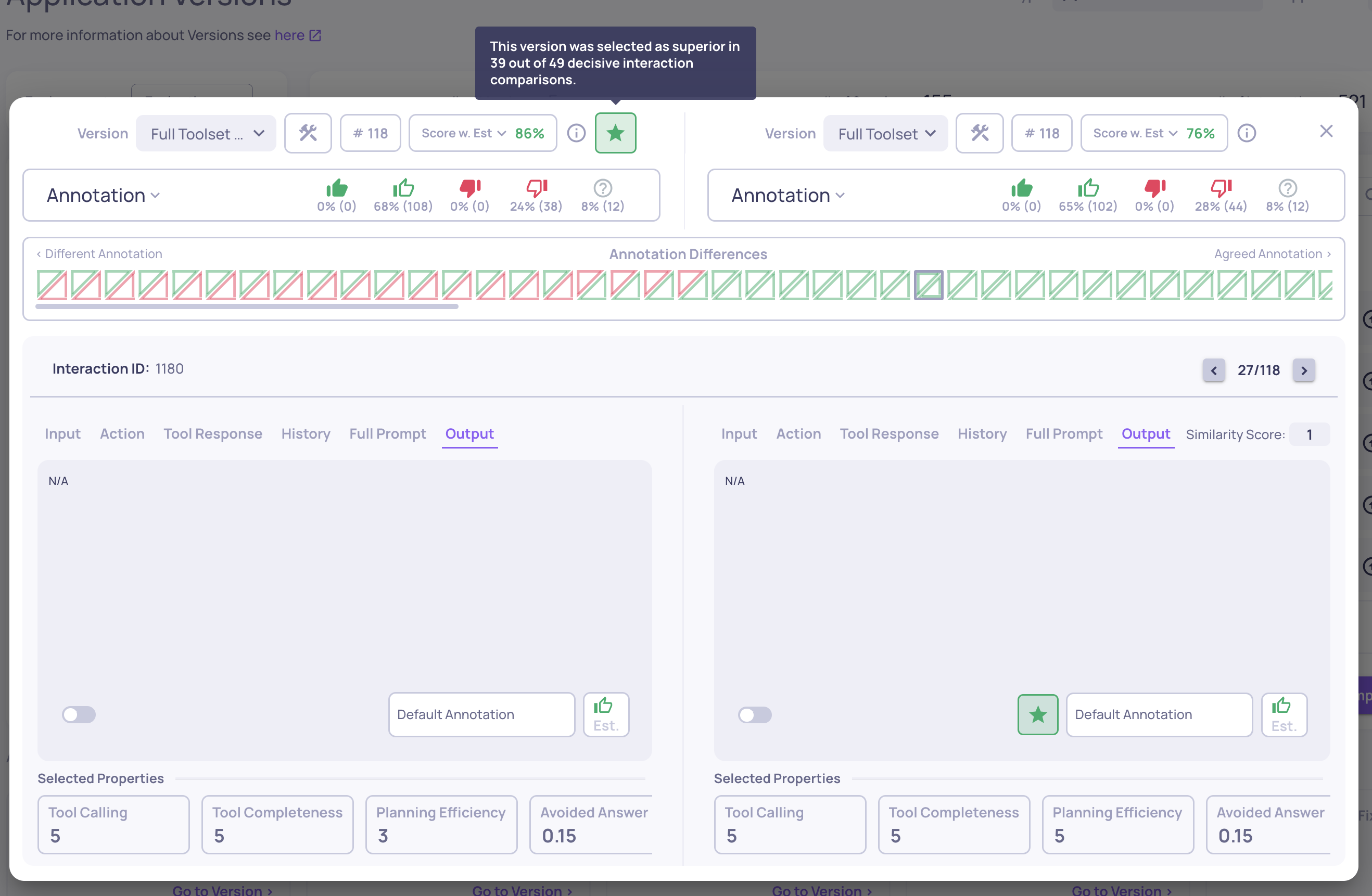

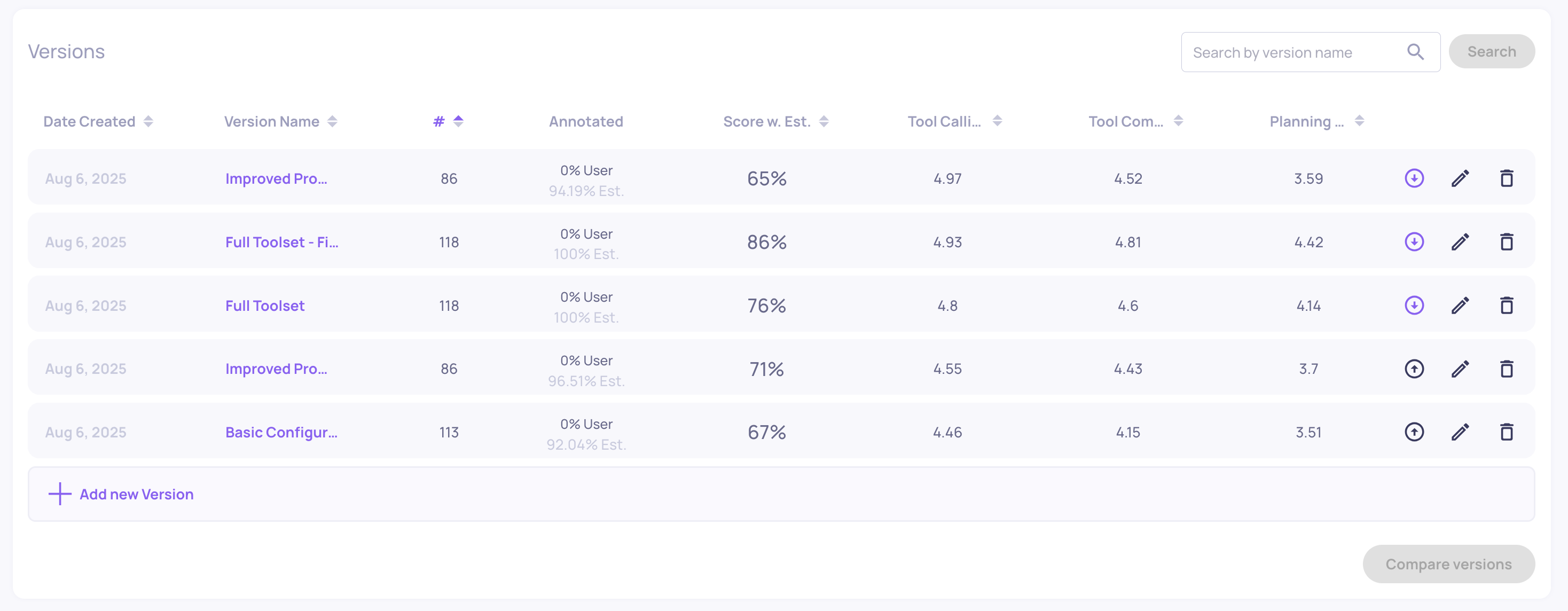

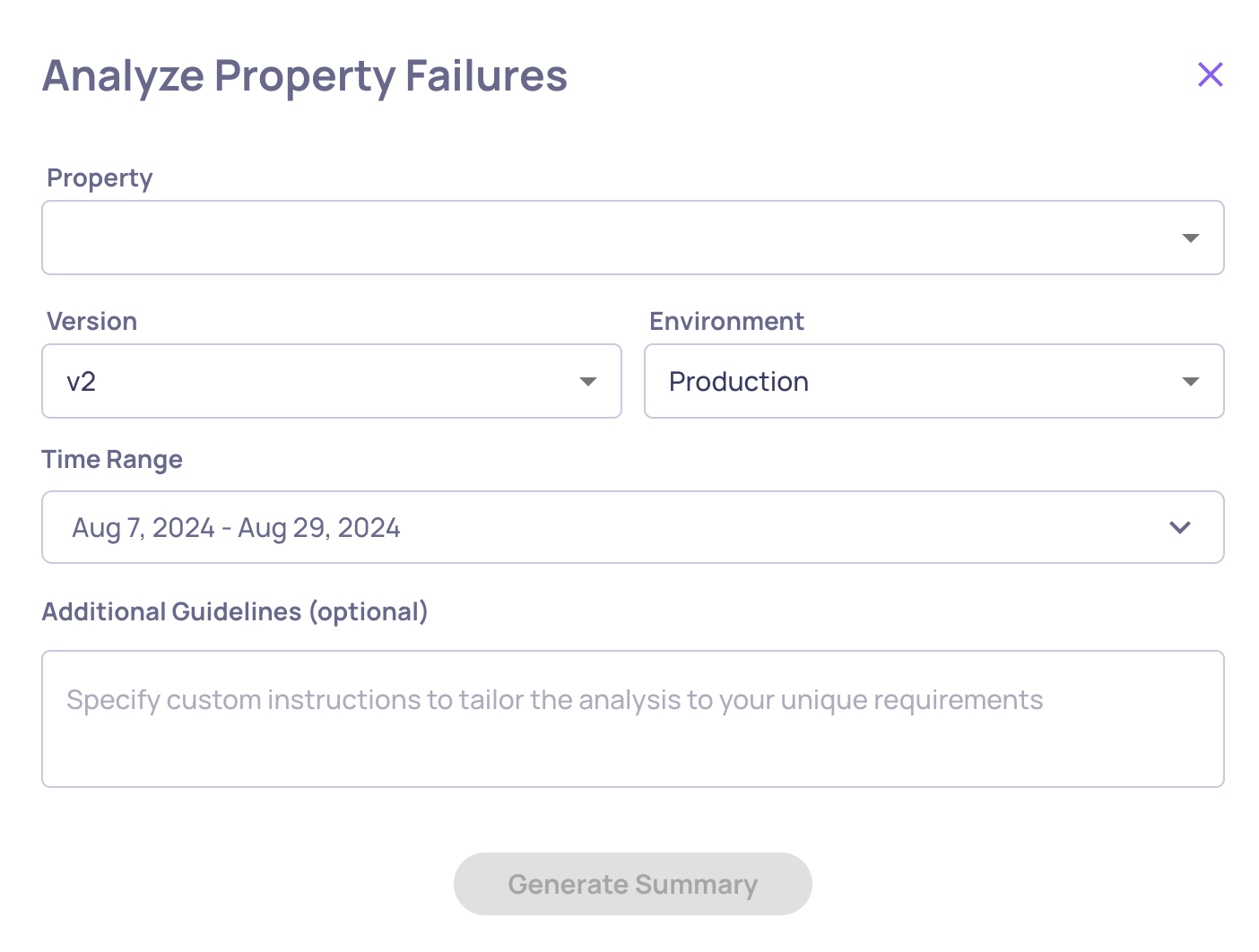

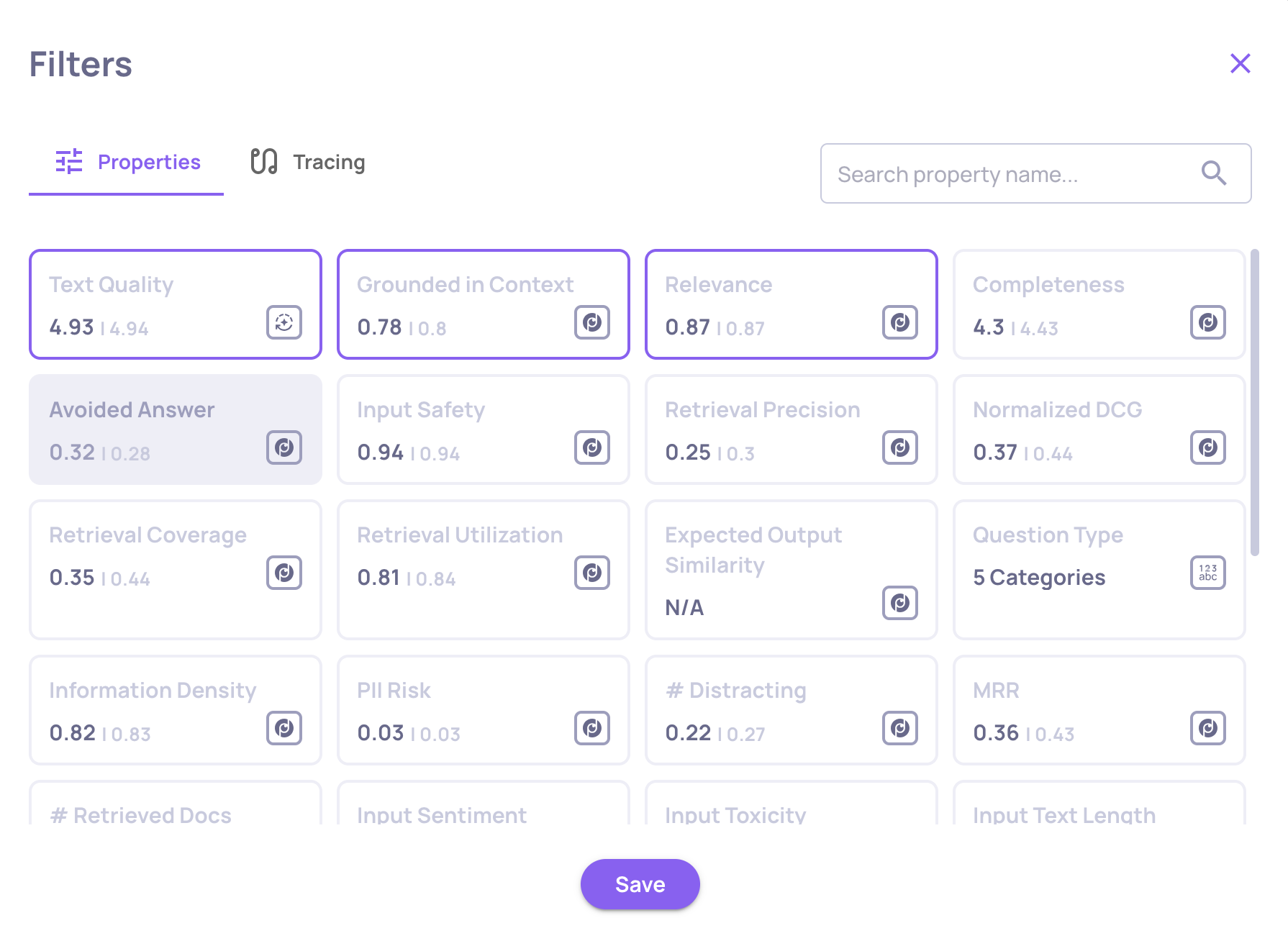

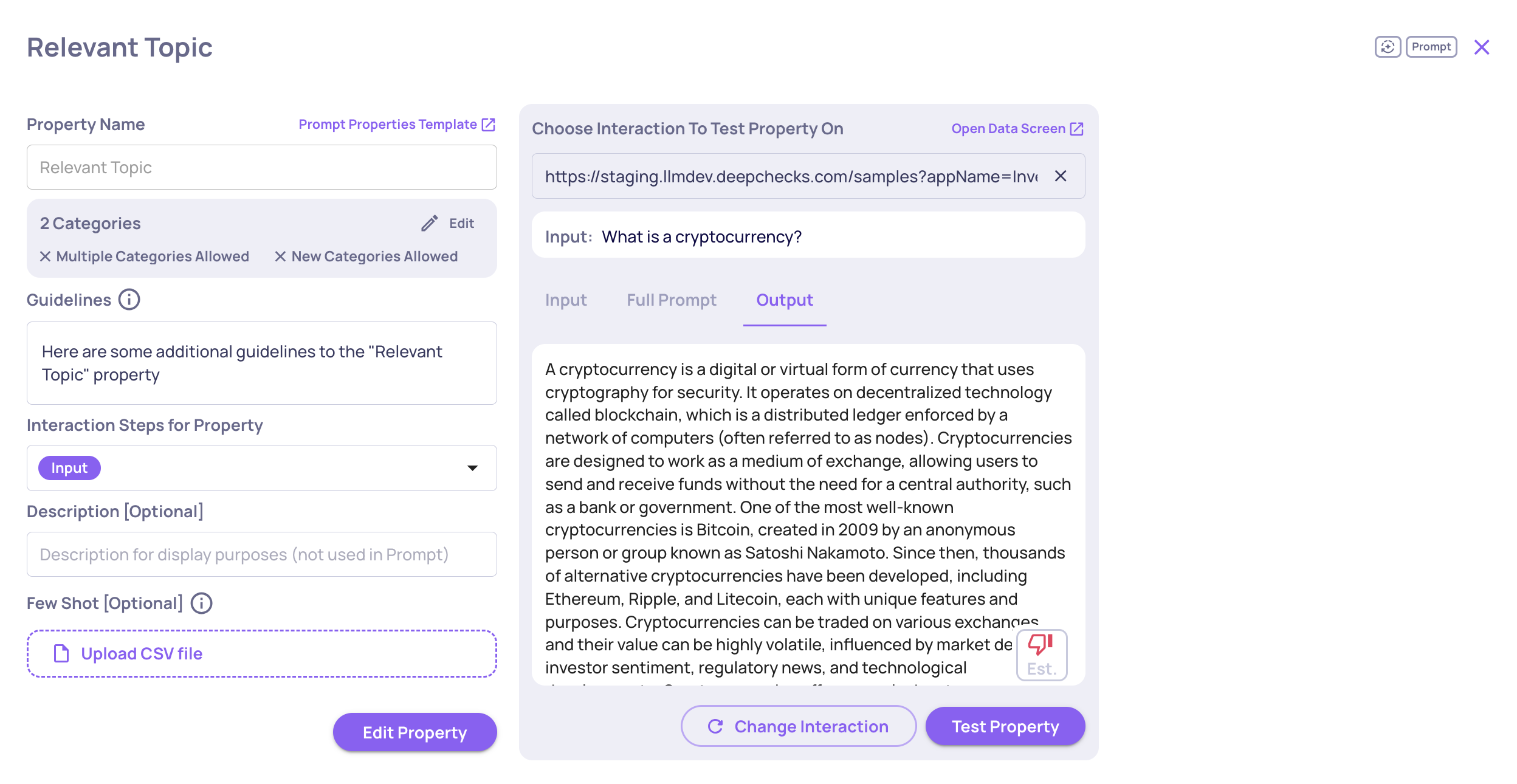

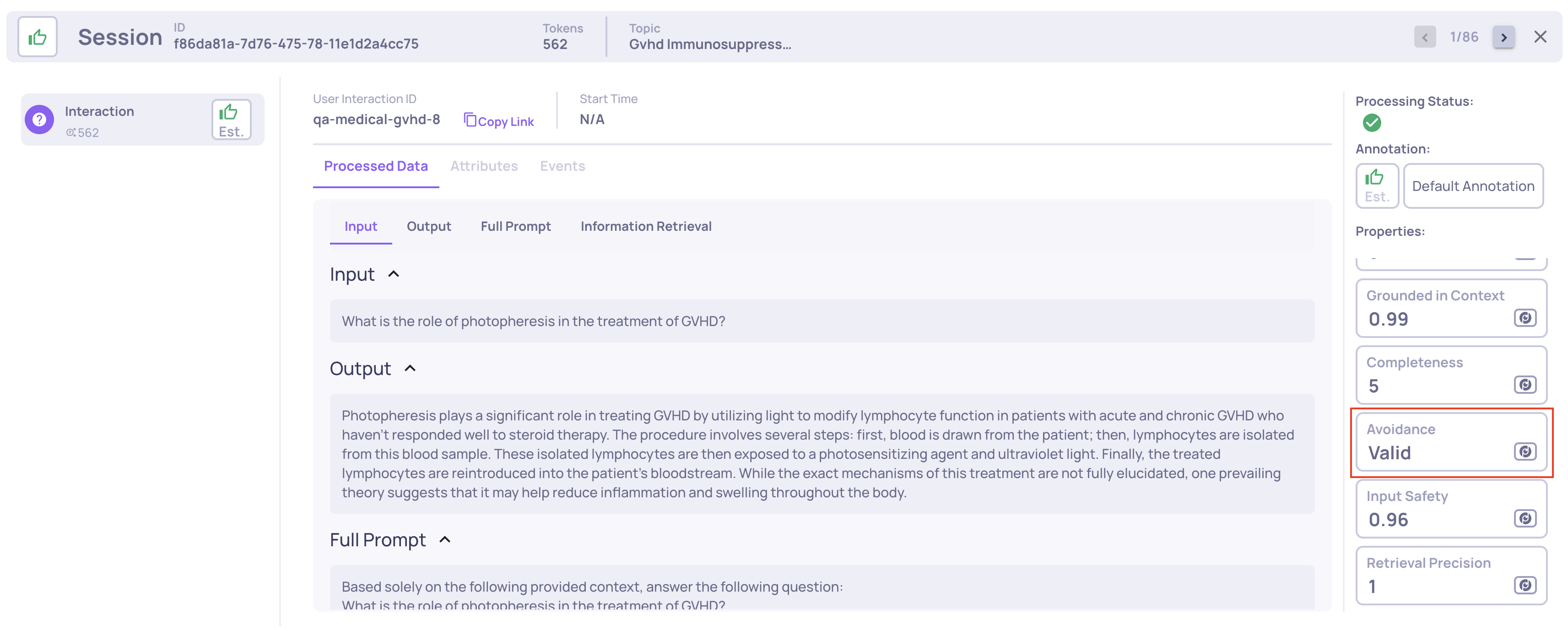

Avoided Answer → Avoidance (Enhanced Property)

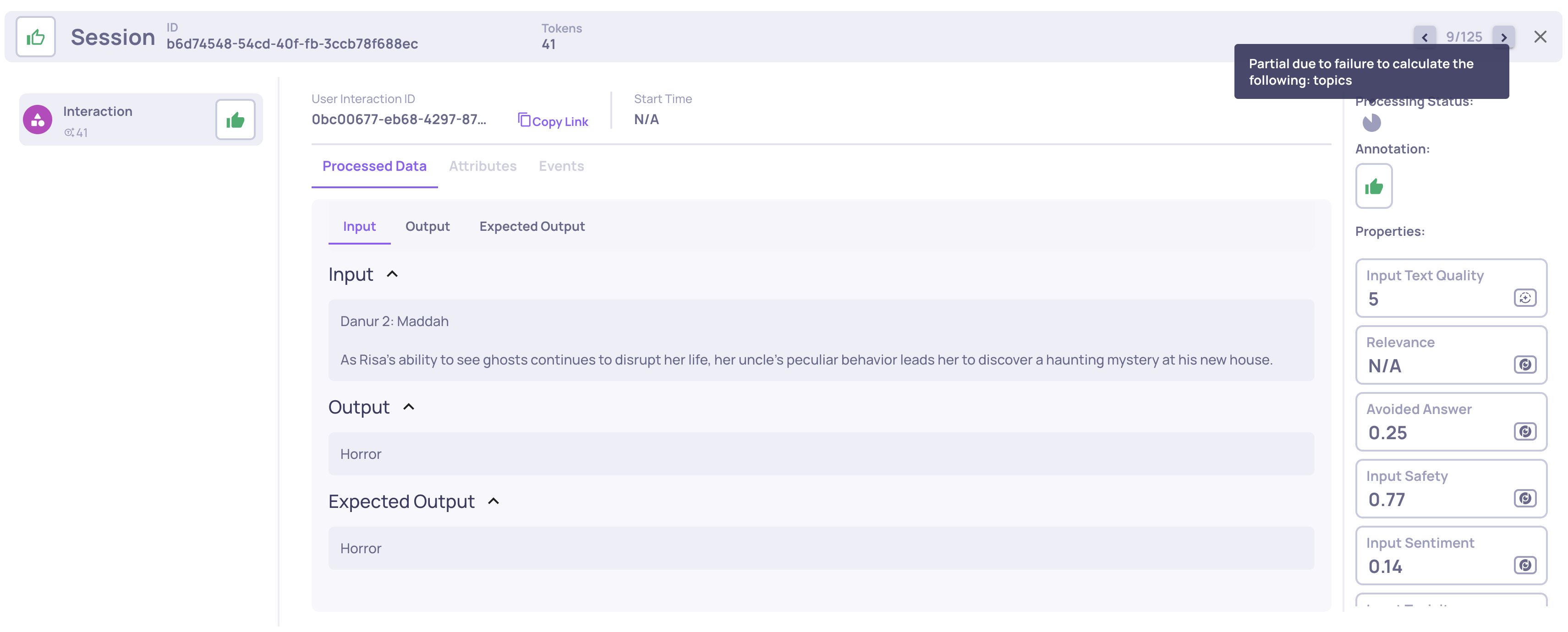

The existing Avoided Answer property has been upgraded to Avoidance, providing richer and more actionable signals.

What changed:

- Previously: a binary (0/1) score indicating whether an answer was avoided.

- Now: a categorical property that distinguishes between:

valid— the input was not avoided- Specific avoidance modes (e.g. policy-based, lack of knowledge, safety constraints, and more)

This enables clearer diagnosis of why an answer was avoided and supports more meaningful aggregation and analysis across versions.

📌 Deprecation note: The legacy Avoided Answer property is deprecated but will continue to function for existing applications.

For full property definitions and migration details, click here.

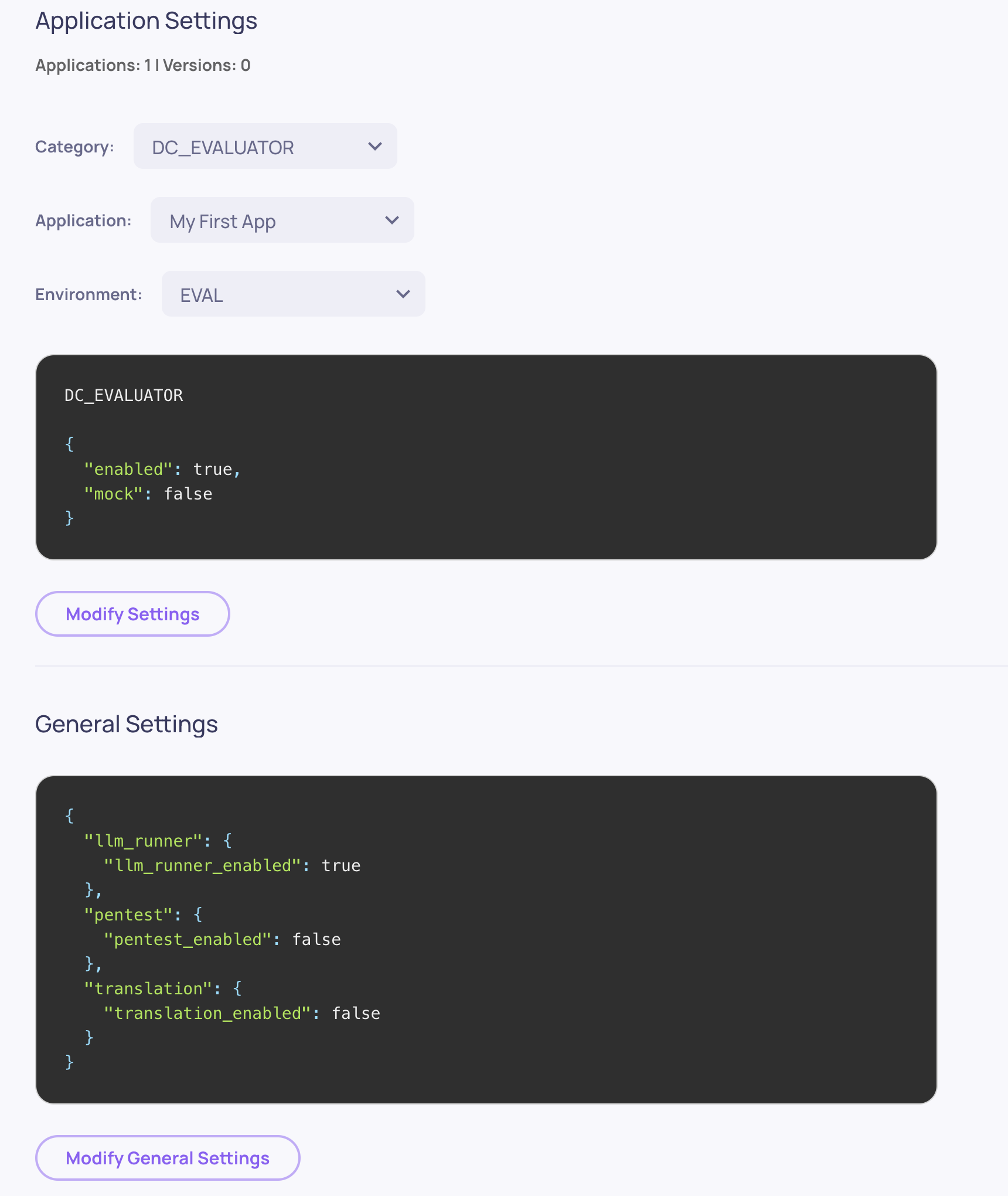

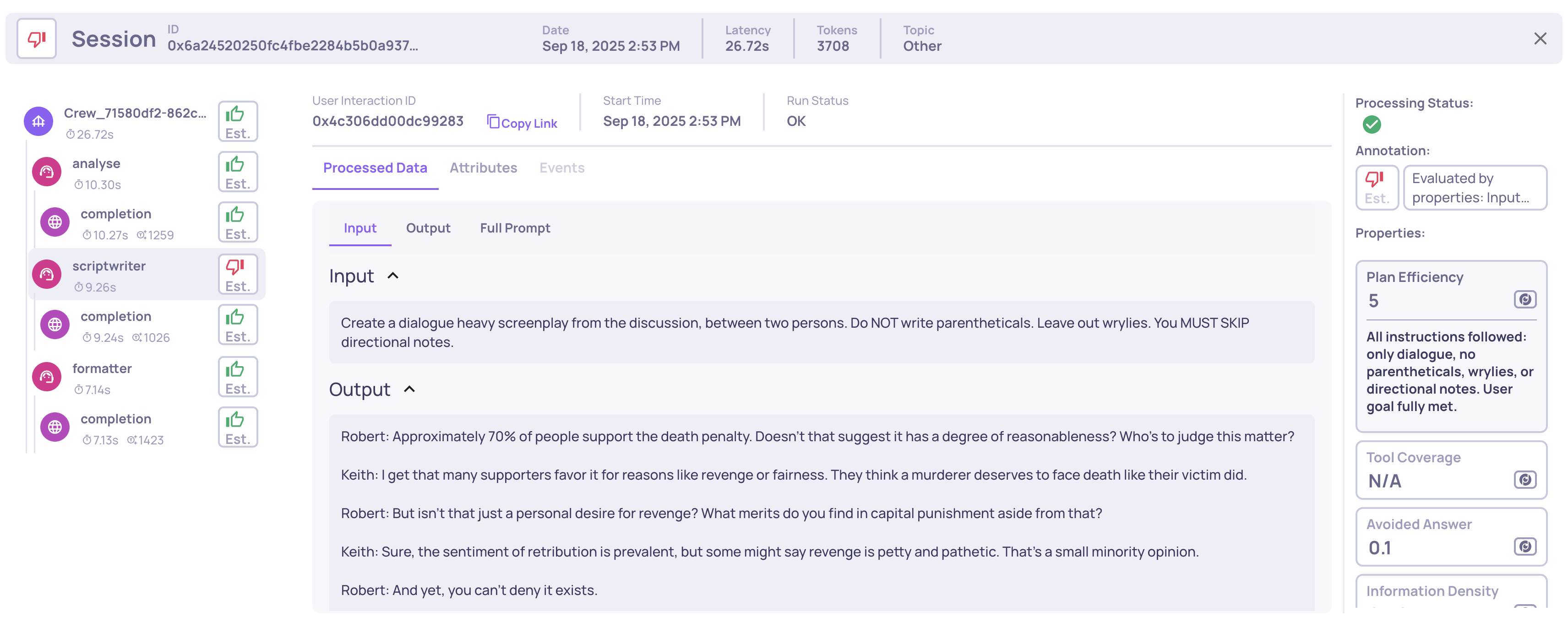

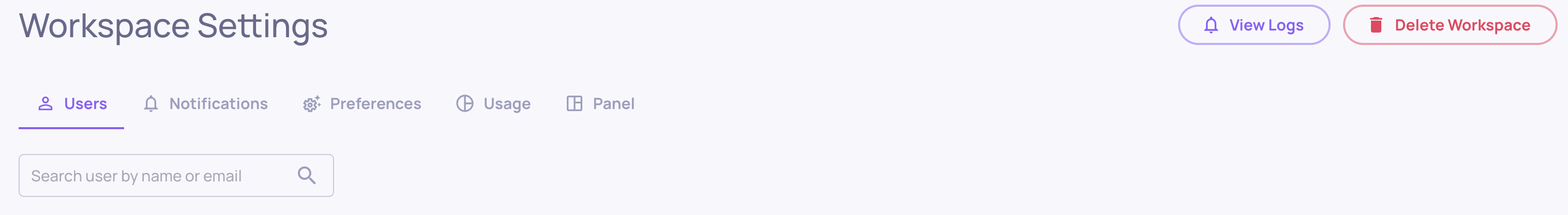

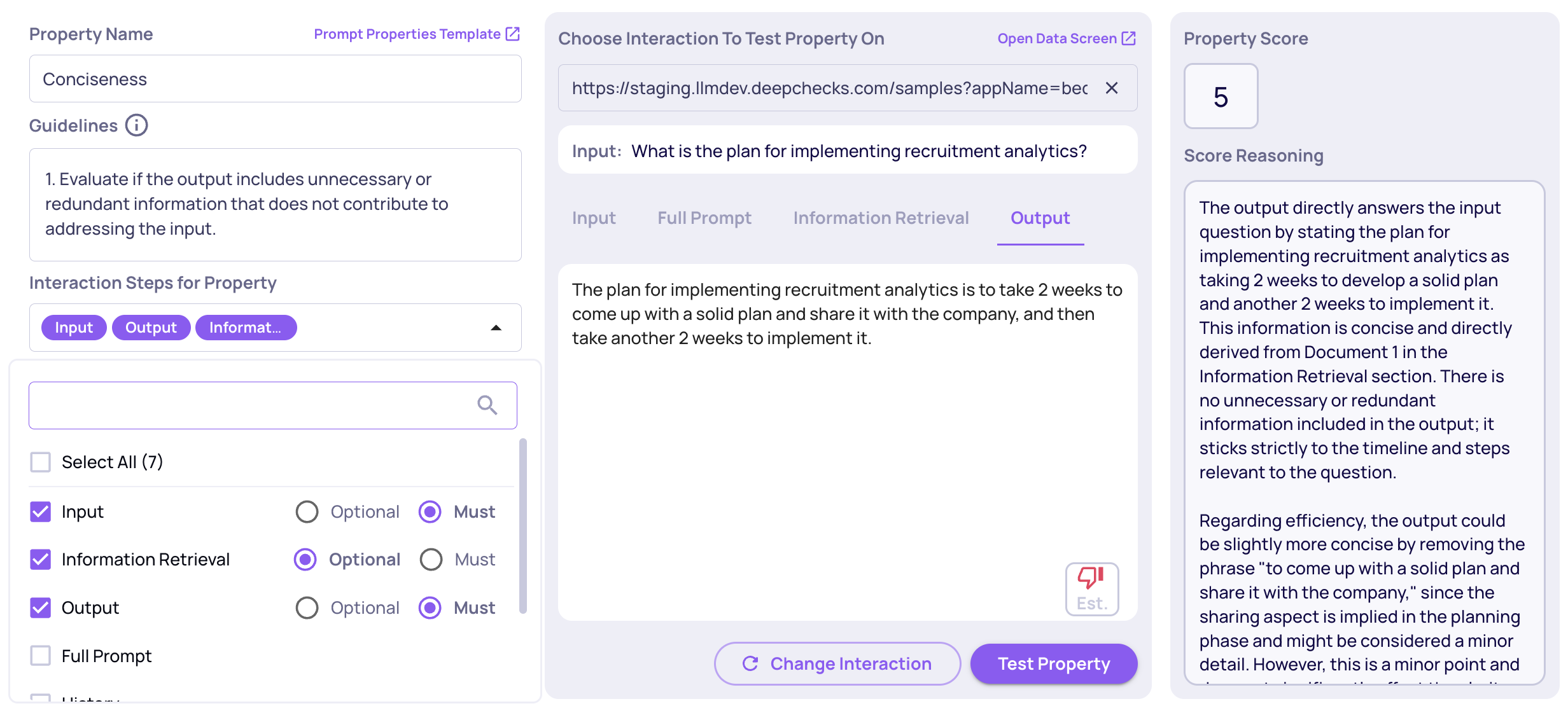

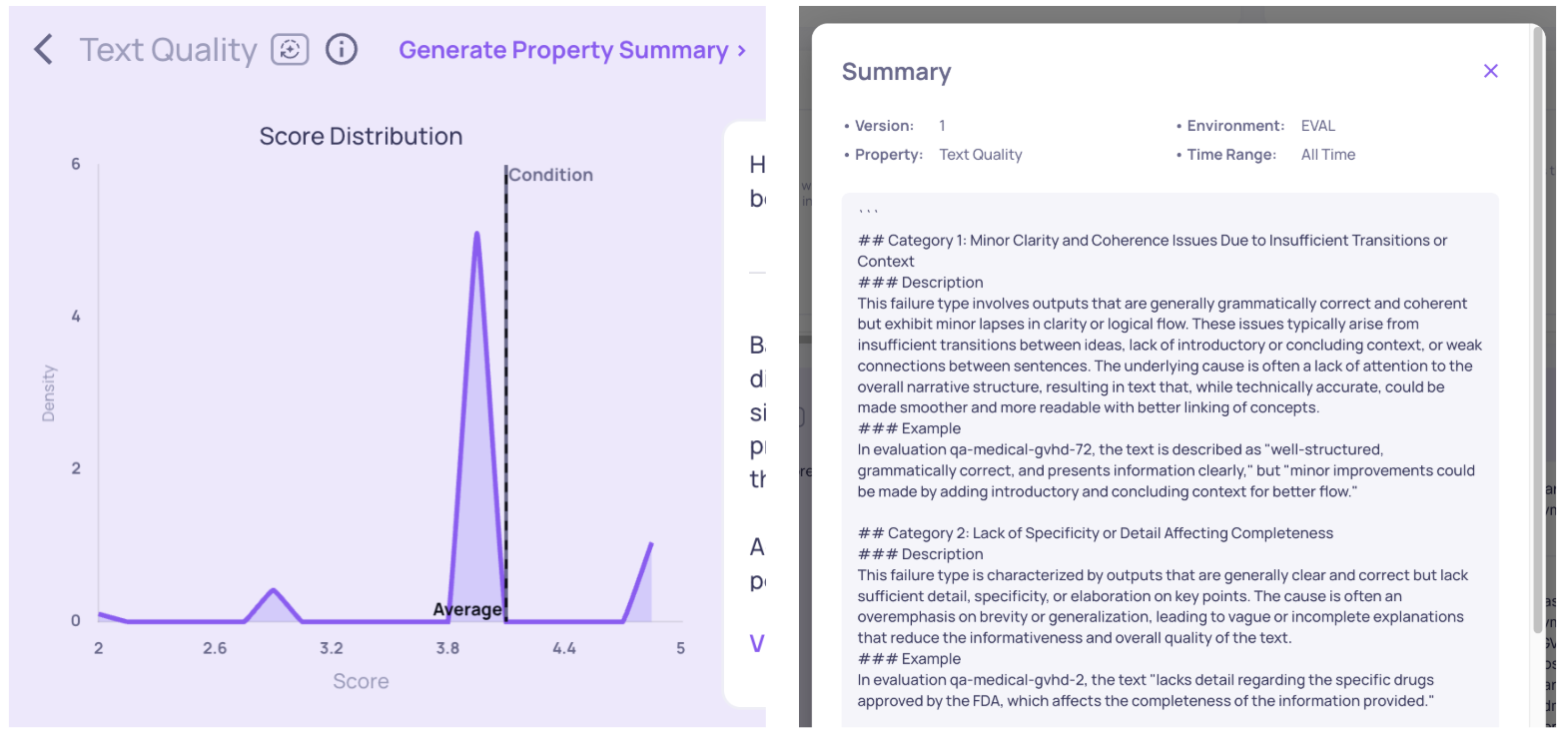

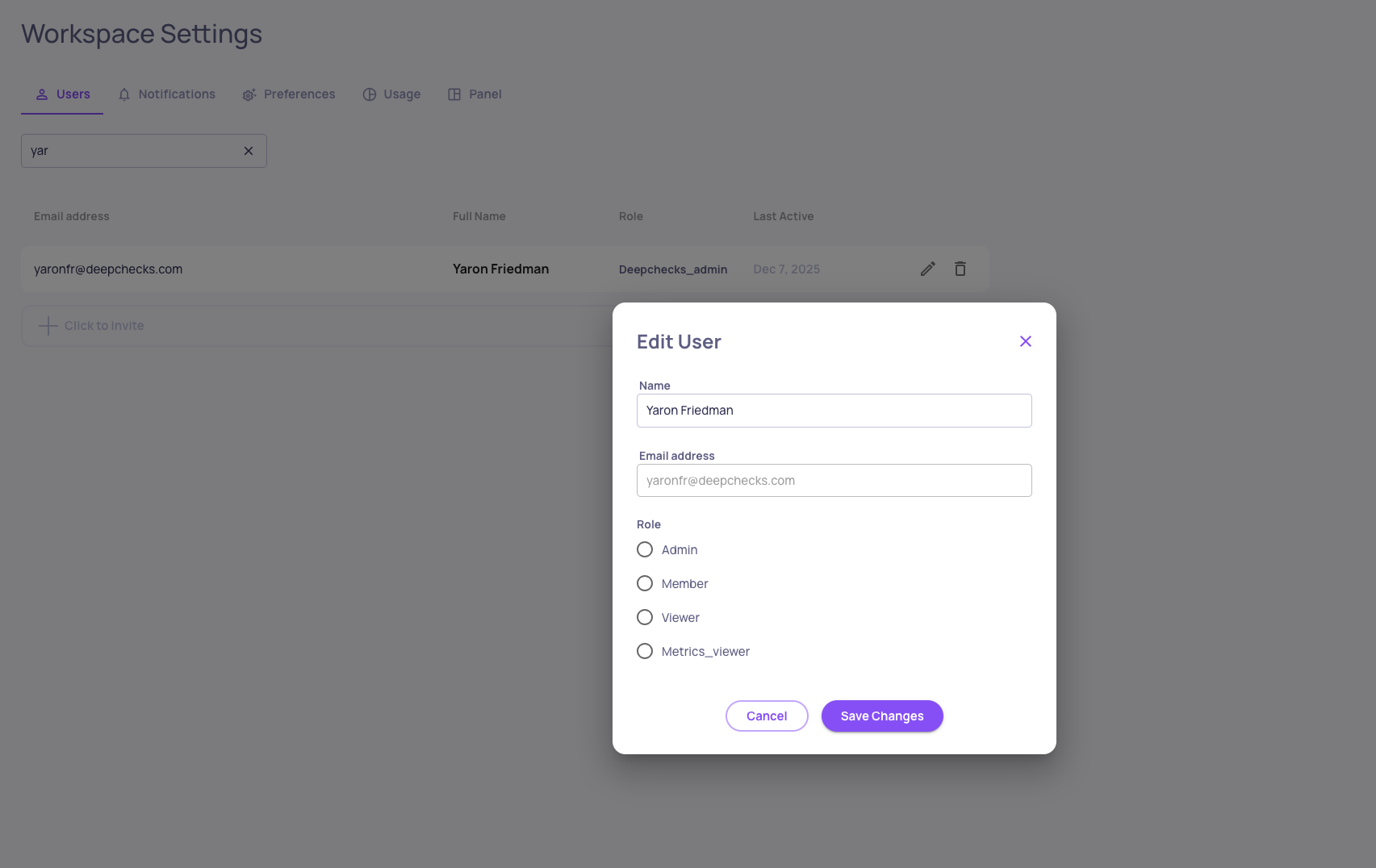

New RBAC Role: Metric Viewer

We’ve added a new role to Deepchecks Role-Based Access Control: Metric Viewer.

This role is designed for stakeholders who need high-level insights without access to raw data.

Metric Viewer capabilities:

- Read-only access to aggregated metrics and evaluation results

- Access limited to the version level

- ❌ No access (via UI or SDK) to raw spans and traces

- ❌ No write permissions

This complements the existing Viewer role by enabling stricter data-access boundaries for security-sensitive environments.

To learn more about Deepchecks RBAC roles, click here.

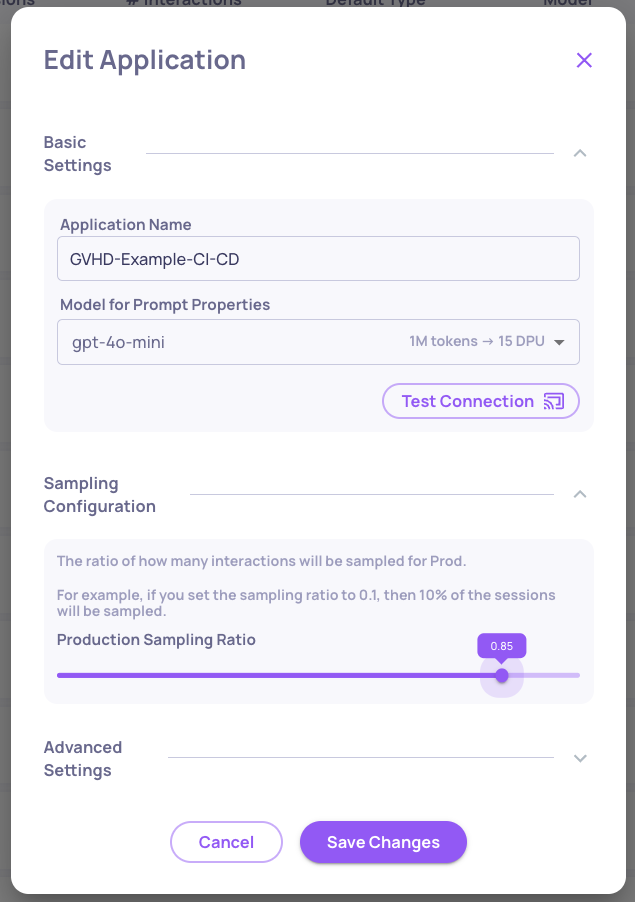

New Models Available for LLM-Based Features

The following models are now supported:

- GPT-5.1

- Amazon Nova 2 Lite

- Amazon Nova Pro

These models can be selected for evaluation, analysis, and automation features across the platform.

SDK Deprecation Notice: send_spans()

The SDK function send_spans() has been renamed to log_spans_file() to better reflect its behavior and usage.

📌 Deprecation notice:

send_spans() is now deprecated and will remain supported for the next few releases. We recommend migrating to log_spans_file() to ensure forward compatibility.

Updated SDK documentation and examples reflect the new function name.