0.29.0 Release Notes

9 months ago by Shir Chorev

This version includes Enhanced session visibility, improved annotation defaults, and streamlined property feedback, along with more features, stability and performance improvements, that are part of our 0.29.0 release.

Deepchecks LLM Evaluation 0.29.0 Release:

- 🔍 Sessions View in Overview Screen

- 📝 Improved Prompt Property Reasoning

- 🎯 Introduced Support for Sampling

- ⚙️ Updated Default Annotation Logic

What's New and Improved?

Sessions View

-

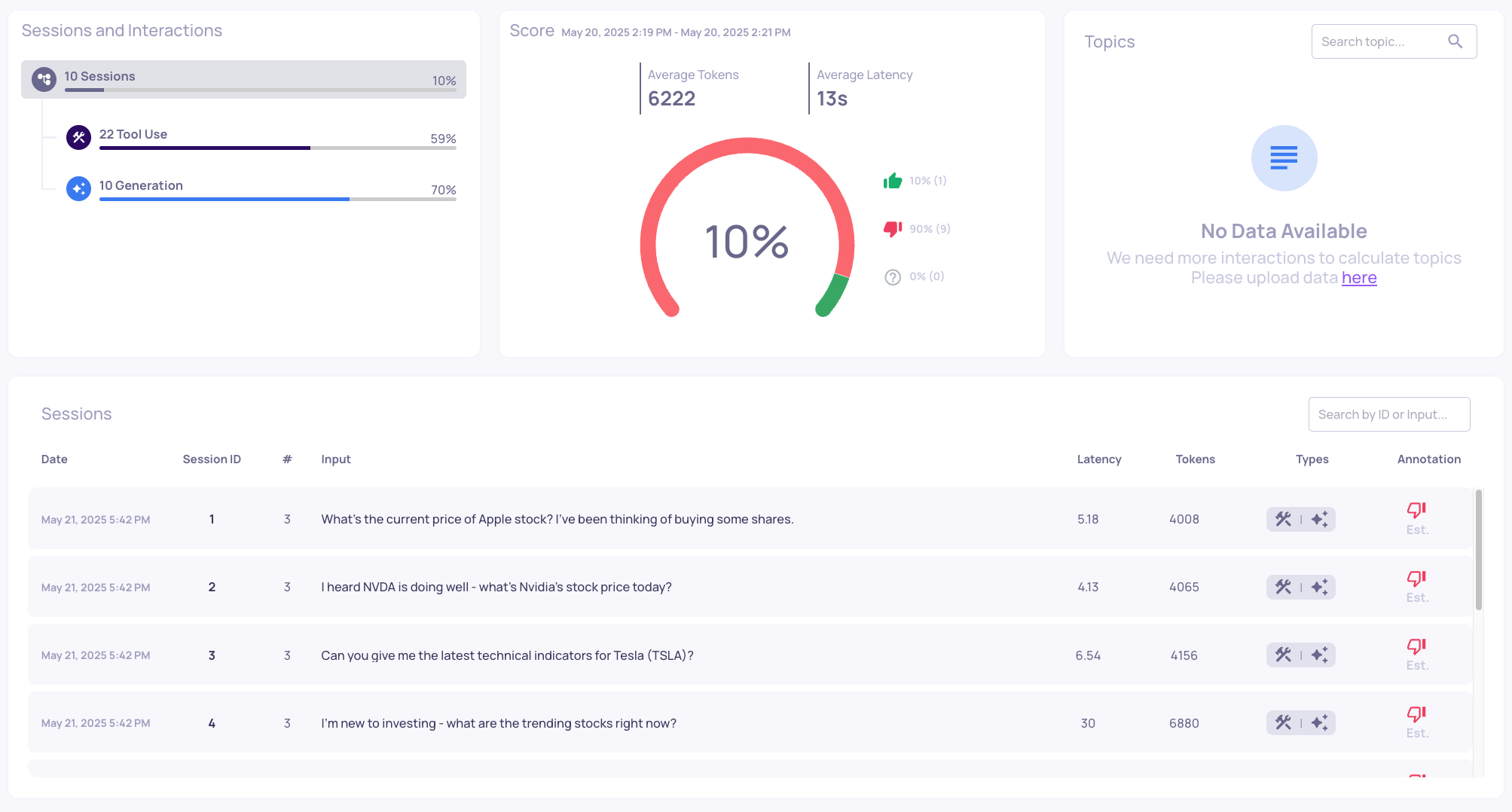

We've enhanced the Overview screen with a new Session View toggle, allowing you to switch between session-level and interaction type-level perspectives. This allows greater flexibility in analyzing your data, enabling to examine both the broader session context and the granular details of individual interaction types.

Improved Prompt Property Reasoning

- We've streamlined the reasoning output for Prompt Properties, making feedback more concise and actionable. The shortened explanations focus on key insights while maintaining clarity, enabling to understand evaluation results and take appropriate action without the verbose explanations.

Introduced Support for Sampling

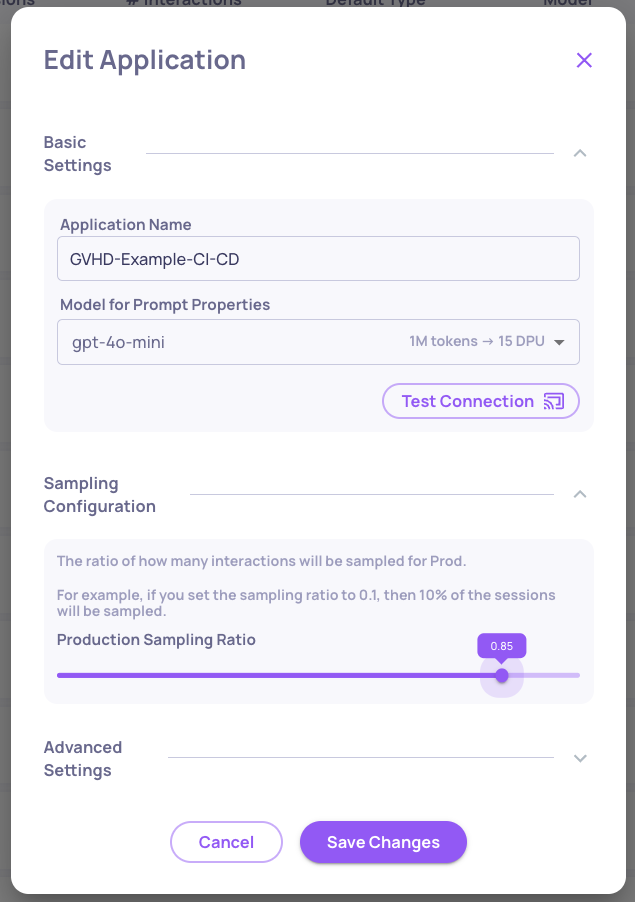

- We've added robust backend infrastructure for sampling capabilities, to allow production data to be sampled for evaluation. This feature will be accessible via UI in next release, currently - sampling ratio can be selected in application settings, and data will be accessible via SDK.

-

3 Month Retention Period for Sampled Data

After 3 months, all data that wasn't sampled for evaluation will be deleted

Updated Default Annotation Logic

- We've improved the default annotation behavior for new applications. Previously, interactions without explicit annotations defaulted to "unknown" status. Now, they default to "good," providing a cleaner way to evaluate failure modes for quality assessment workflows and asses application’s performance.