0.26.0 Release Notes

This version includes improved properties flows, updated usage tracking method and flexibility in model choice, along with more features, stability and performance improvements, that are part of our 0.26.0 release.

Deepchecks LLM Evaluation 0.26.0 Release

- 📄 New Properties Screen

- 📕 Note: Properties Naming Update

- 🦸♀️ LLM Model Choice for Prompt Properties

- 🪙 Usage Tracking Updated to Deepchecks Processing Units (DPUs)

- 🧮 New Property Recalculation Options

- 💽 Download All Interactions in a Session

What’s New and Improved?

-

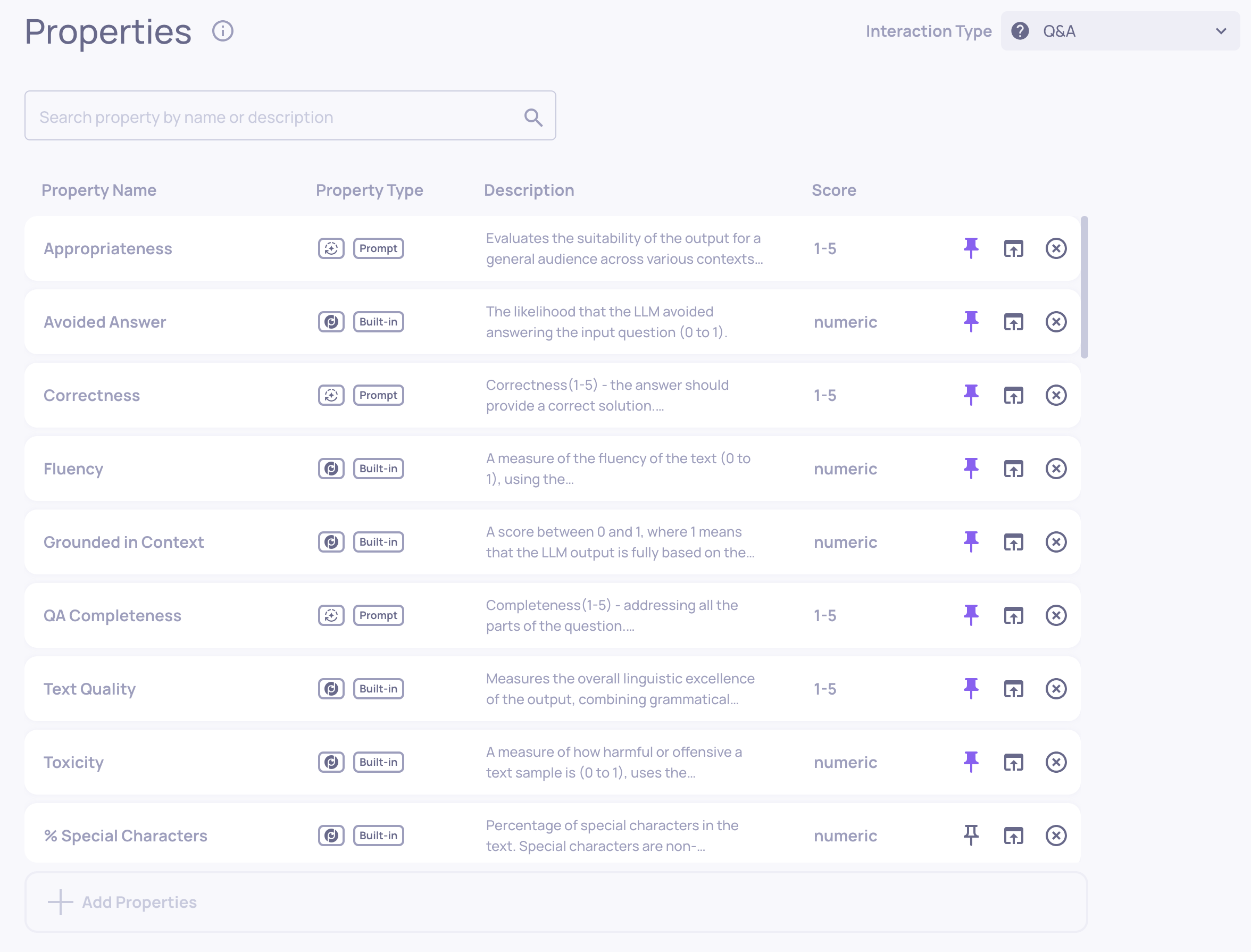

New Properties Screen

- In the main properties screen, LLM, built-in and custom properties have been consolidated into one unified list, with icon differentiation for each property type.

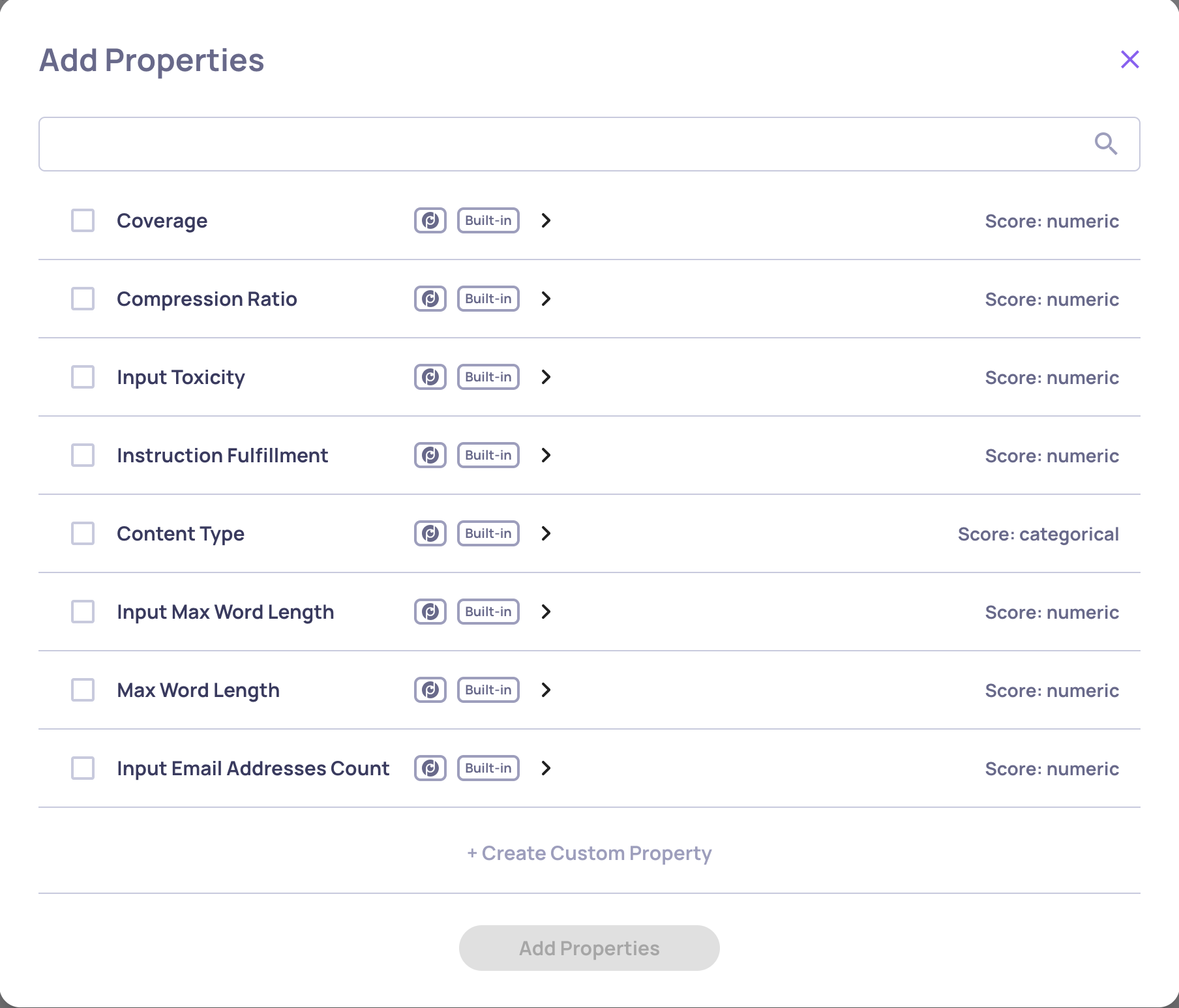

- Properties on the main screen will be automatically calculated for all interactions within the relevant interaction type. Additional properties can be incorporated by selecting them from the “property bank.”

- A centralized “hub” is now available for adding and customizing new properties.

- For more information on the new properties structure and flows, click here

-

Property Naming Updates

- To improve property naming and understanding, Deepchecks no longer requires the types -"out", "in", "llm", "custom". Instead - names of active properties have to be unique. Accordingly, the "type" filed is now redundant in YAML, and some properties were renamed for clarity and uniqueness.

- Renamed properties:

- All properties that had an "_INPUT" suffix, e.g. FLUENCY_INPUT, TOXICITY_INPUT are now INPUT_TOXICITY, INPUT_FLUENCY

- All properties that had an "_OUTPUT" or an "_LLM" suffix have dropped that suffix (e.g. LEXICAL_DENSITY_OUTPUT is now LEXICAL_DENSITY, and COMPLETENESS_LLM is now COMPLETENESS)

-

LLM Model Choice for Prompt Properties

-

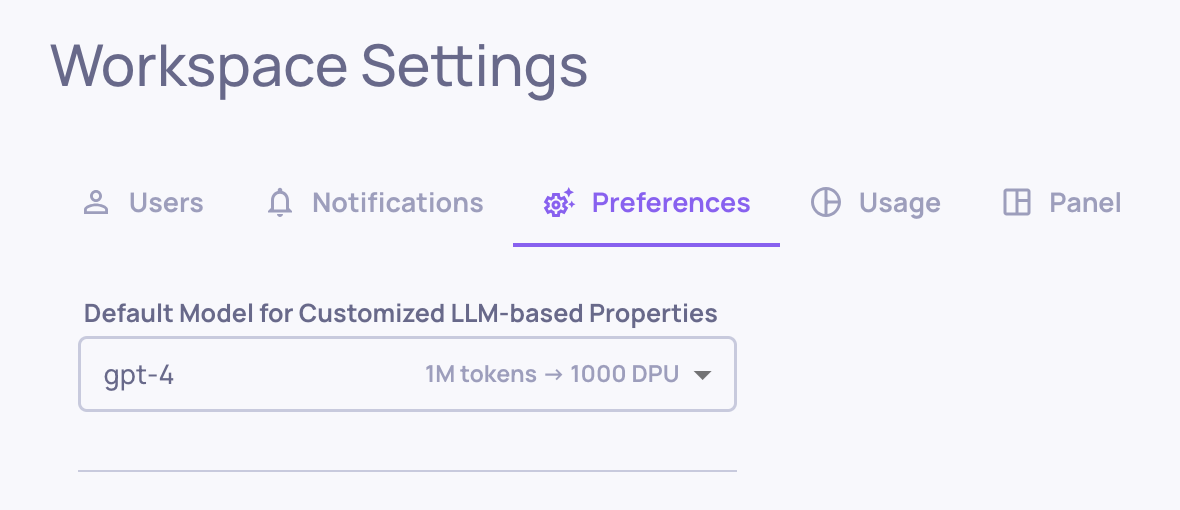

You can now select which models process your Prompt properties in the Deepchecks app, providing greater flexibility. Usage is calculated based on the selected LLM model.

-

This configuration can be managed on two levels:

- Organization-wide default settings (accessible via "Workspace Settings").

- Application-specific settings (override the default for specific applications in the "Application" screen).

-

-

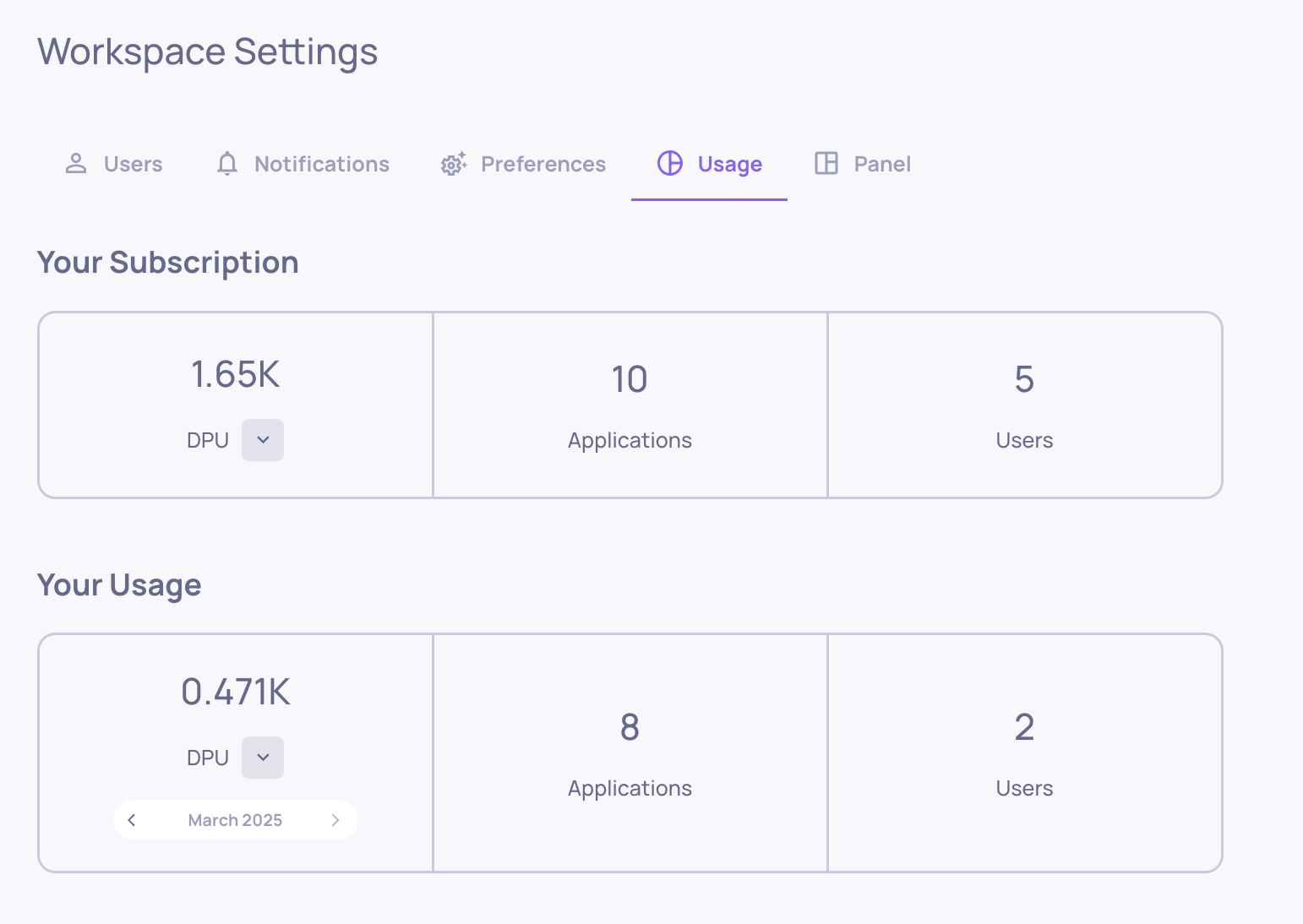

Usage Tracking Method Shift — from Tokens to DPUs

- We've updated our usage tracking method from tokens to DPUs (Deepchecks Processing Units) to accommodate our new flexible model choices. In addition to being a more accurate and transparent usage tracking method, this change provides you with a unified pool of processing units which you can allocate as needed.

- The usage screen displays your plan in DPUs and shows your monthly usage. Click the small arrow to see a detailed breakdown of your monthly DPU usage.

- Where applicable, you'll see how 1M LLM token usage converts to DPUs for different models.

-

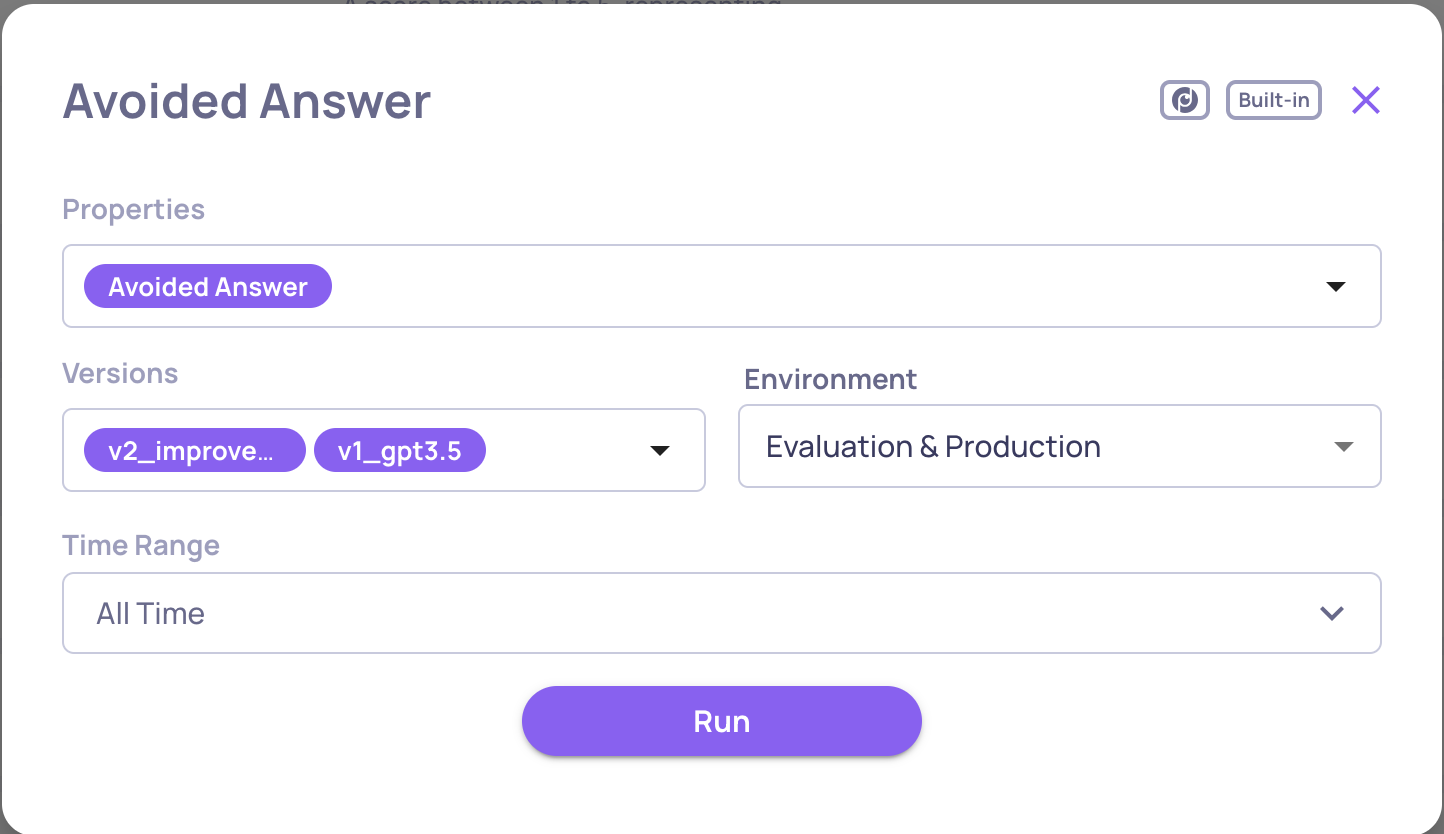

Property Recalculation Options

- You can now recalculate properties based on interaction upload dates (time range), in addition to recalculating across all interactions in selected versions.

-

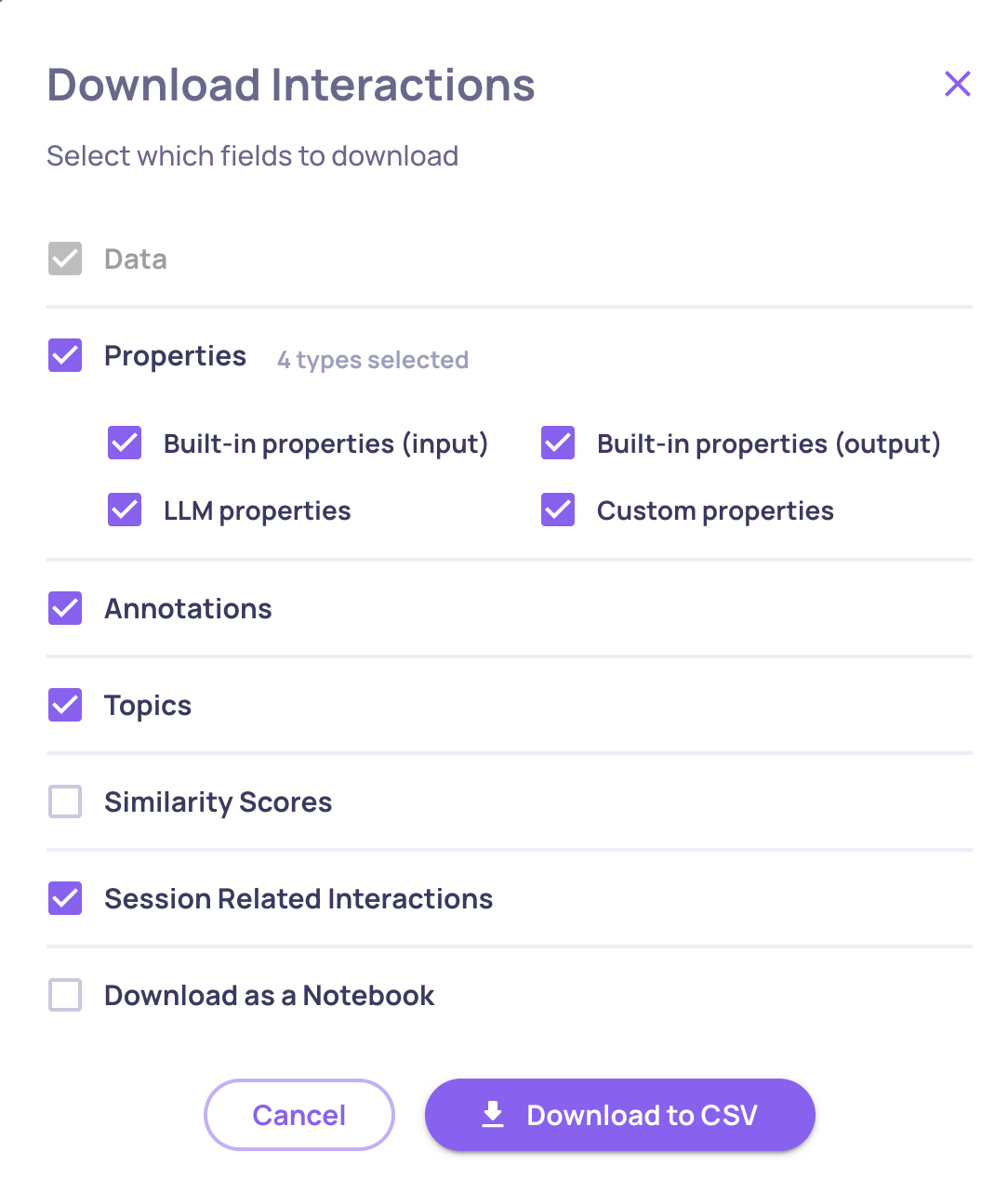

Download All Interactions in a Session (Available in UI & SDK)

-

In the interaction download flow we’ve added to option to download all other interaction in a given session. Checking this option when downloading multiple interactions will result in downloading all of the interaction from all the relevant sessions.

-

-

You can also download all session related interaction with the SDK:

dc_client = DeepchecksLLMClient(

host="HOST",

api_token="API_KEY"

)

df = dc_client.get_data(

app_name="APP_NAME",

version_name="APP_VERSION",

env_type=EnvType.EVAL,

user_interaction_ids=['46eaf233-5825-4bad-ad02-0d8dbd94994e', '80ab45da-53f4-45b1-a3b5-94c7afe05bec'],

return_session_related=True

)